recitation ds

本文主要记录对分布式系统部分的设计细节和实现部分相关问题的想法。

GFS¶

GFS: machine failures

简单来说,GFS是一个使用拷贝的文件系统,它的结构包含controller, chunk server, client。这些角色的交互来完成拷贝的功能,以防止大集群中某个机器的崩溃。它和unix文件系统的区别在于,GFS更能适用有很大工作量写入写出读入读出的情况(有数百TB计的存储量的数千磁盘在数千机器上的大集群收到数百用户并行的访问)

Mapreduce¶

…

ZFS¶

…

consistency guarantees¶

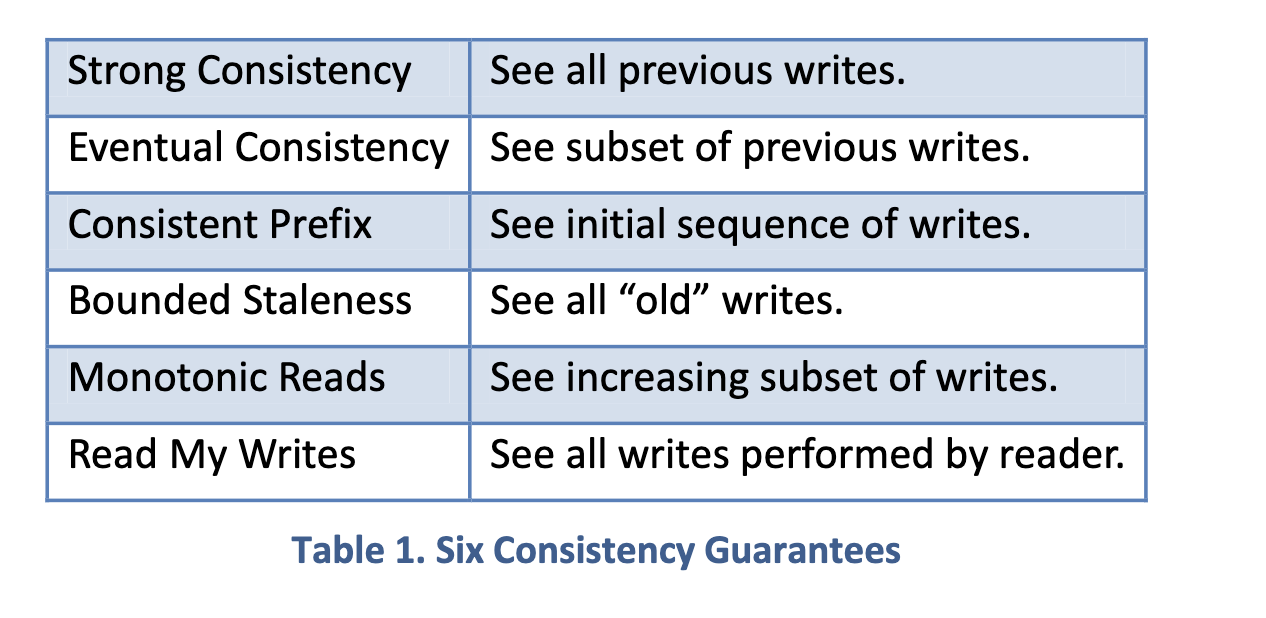

What: Consistency guarantee is a contract between the data provider and clients. It can affect a system in three aspects: consistency, performance and availability.

How: A system designer should understand the particular kind of need of clients and how the system operates to decide which consistency guarantee.

Why: It matters because the system can be the best version for client’s needs, faster without loss of correctness.

Design process¶

Data availability is a term used by computer storage manufacturers and storage service providers to describe how data should be available at a required level of performance in situations ranging from normal through disastrous.

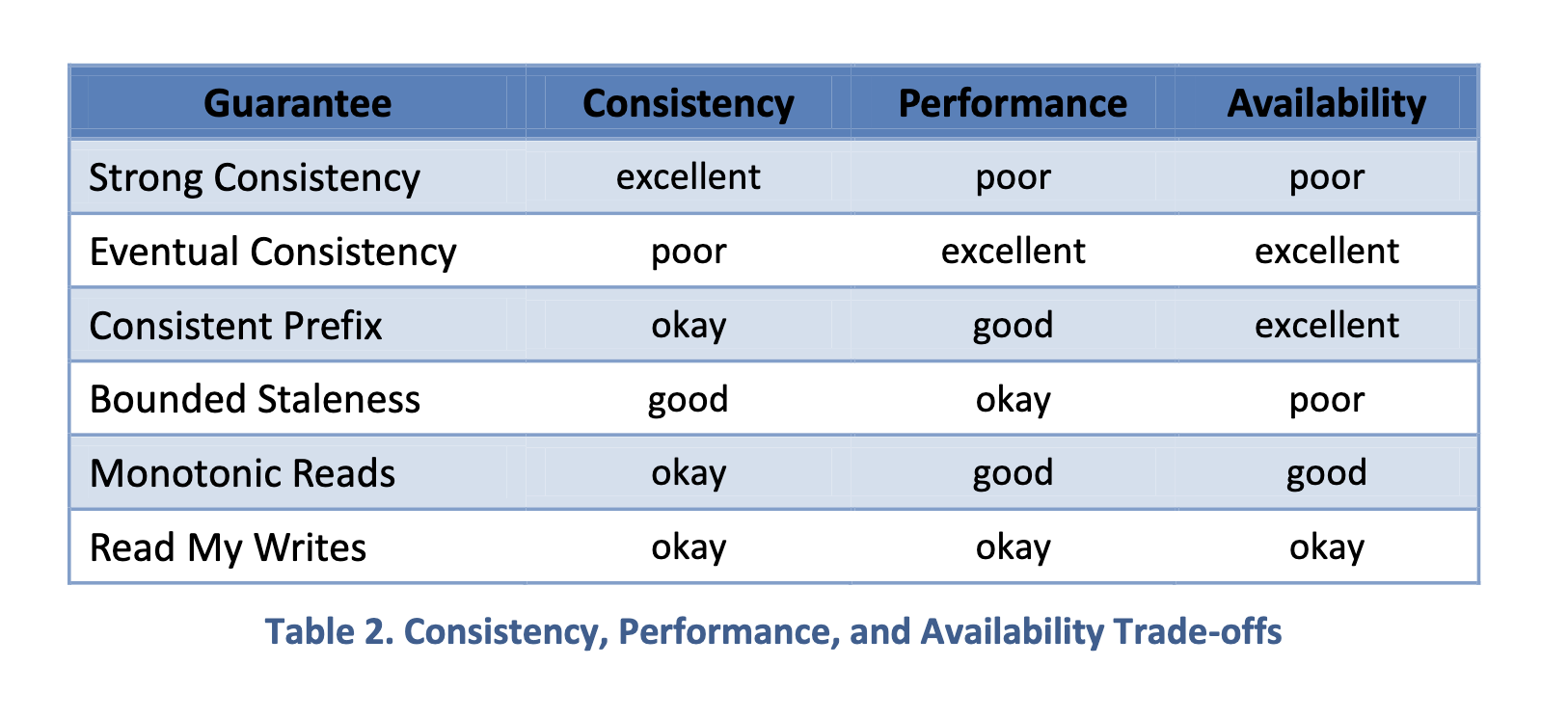

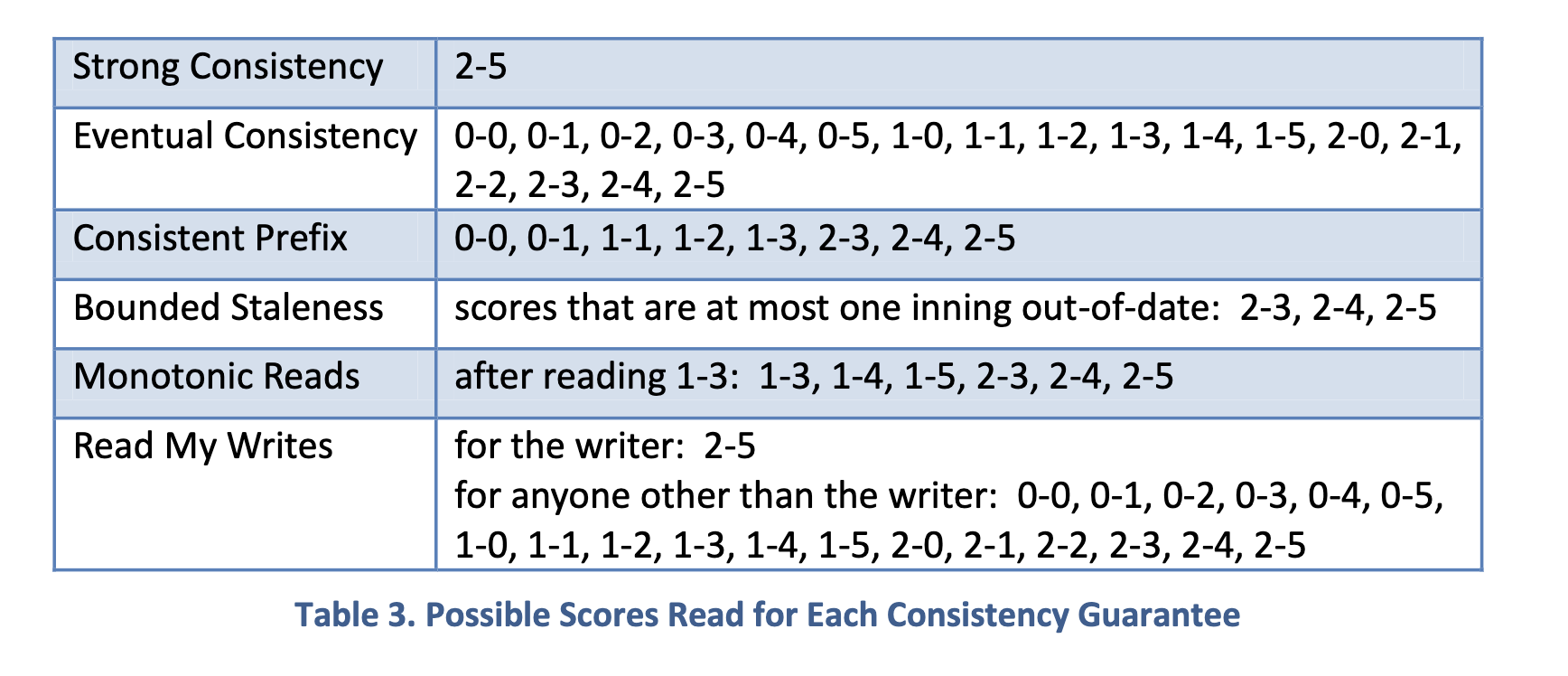

The paper describes four intermediate consistency protocols between strong consistency and eventual consistency, which gives more flexibility while preserving correctness. Giving the example of baseball game, it lists six peoples to use the data store for different purposes. Here, I will give the reasoning process to the table 2.

The consitency and performance have inverse correlation. In other words, if the data is more consistent, it means the server which updates the data needs to pass on the new data to every other servers. One server crashed would cause the wrong data got transferred. Therefore strong consistency model has excellent consistency and poor performance and vice versa.

The four intermediate consistency models can get higher performance than strong consistency due to partial correctness of the data. They don’t need the data in every server is consistent at the same time giving the specific applications providing. Bounded staleness has poor availability as the strong consistency for the same reason of requirement for time. The server can not guarantee the correctness in specific time.

Baseball example¶

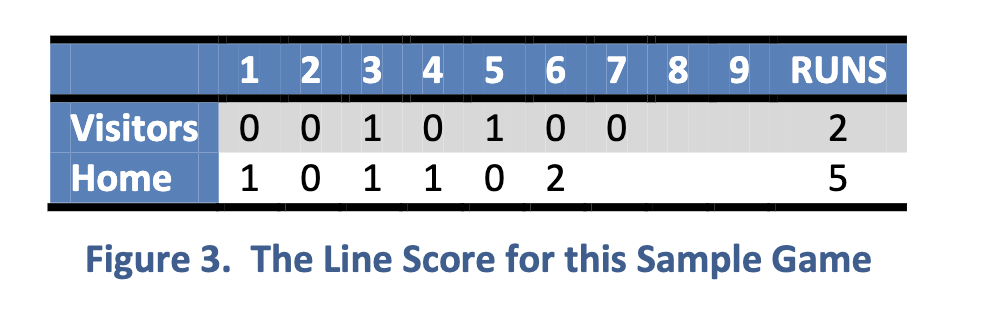

A baseball game example: minimal consistency of read operation in a simple data store(replicated storage systems) containing only two teams’ scores.

-

Official scorekeeper: the scorekeeper is the only person to write the data. They need the most updated scores so that strong consistency seems the appropriate one. However they are the only server to write the data and pass on to others. Therefore read my writes would be sufficient.

-

umpire: strong consistency. They can’t provide wrong scores because it’s a fair game.

-

Radio reporter: They need tell the audience a more updated scores than before. Consistent prefix is not enough because they are using scorekeeper’s data and old scores can emerge later than new ones. Therefore it have to maintain the monotonic reads at the same time. Alternatively, monotonic reads with bounded staleness can achieve the same goal.

-

sportswriter: bounded staleness. They need the most updated scores but not in a hurry. So they can wait for the final score at least it is correct one.

-

statistician: for one team’s score, they need strong consistency; for one season’s score, they need read my writes for the same reason as scorekeeper.

-

stat watcher: eventual consistency. It’s ok to provide out-of-date data.

Some thinking¶

-

What systems need weaker consistency? I think the systems that incline to performance and donot heavily rely on real-time computing results such as bank accounts. For example, social media systems like twitter. Updates of tweets are not critical for users.

-

I think understandability is a worthy design goal. If even the developers can hardly understand the protocol, how can they make improvements based on the customer needs and develop relevent features on top of it.

-

To decide on a particular consistency model, I think it requires a thorough study of current target customers and future costs it may bring giving the current design goal. Different cloud storage providers have made different decisions. Amazon S3 uses eventual consistency to improve the performance and availibility[1]. It gains more availibility with the proliferation of geo-replicated services. However Azure aims for specific organizations which needs strong consistency for correctness. I think they are targeting different customer bases.

Raft¶

What is Raft[2]?

Raft is a protocol for implementing distributed consensus algorithms so that distributed system can reach agreements having same states. Simply speaking, it’s a way that makes client and its many servers have x = 3 when the client sets x to 3.

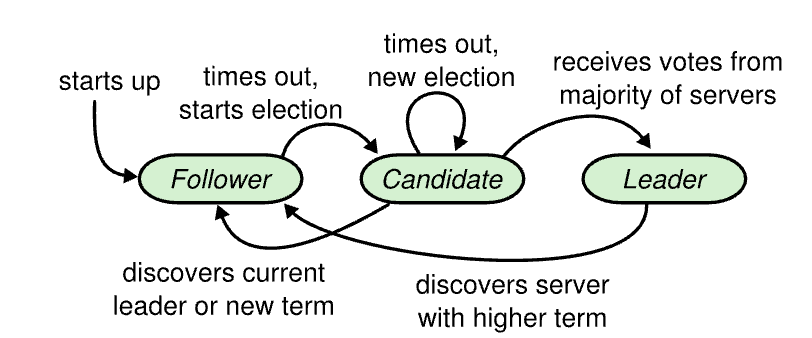

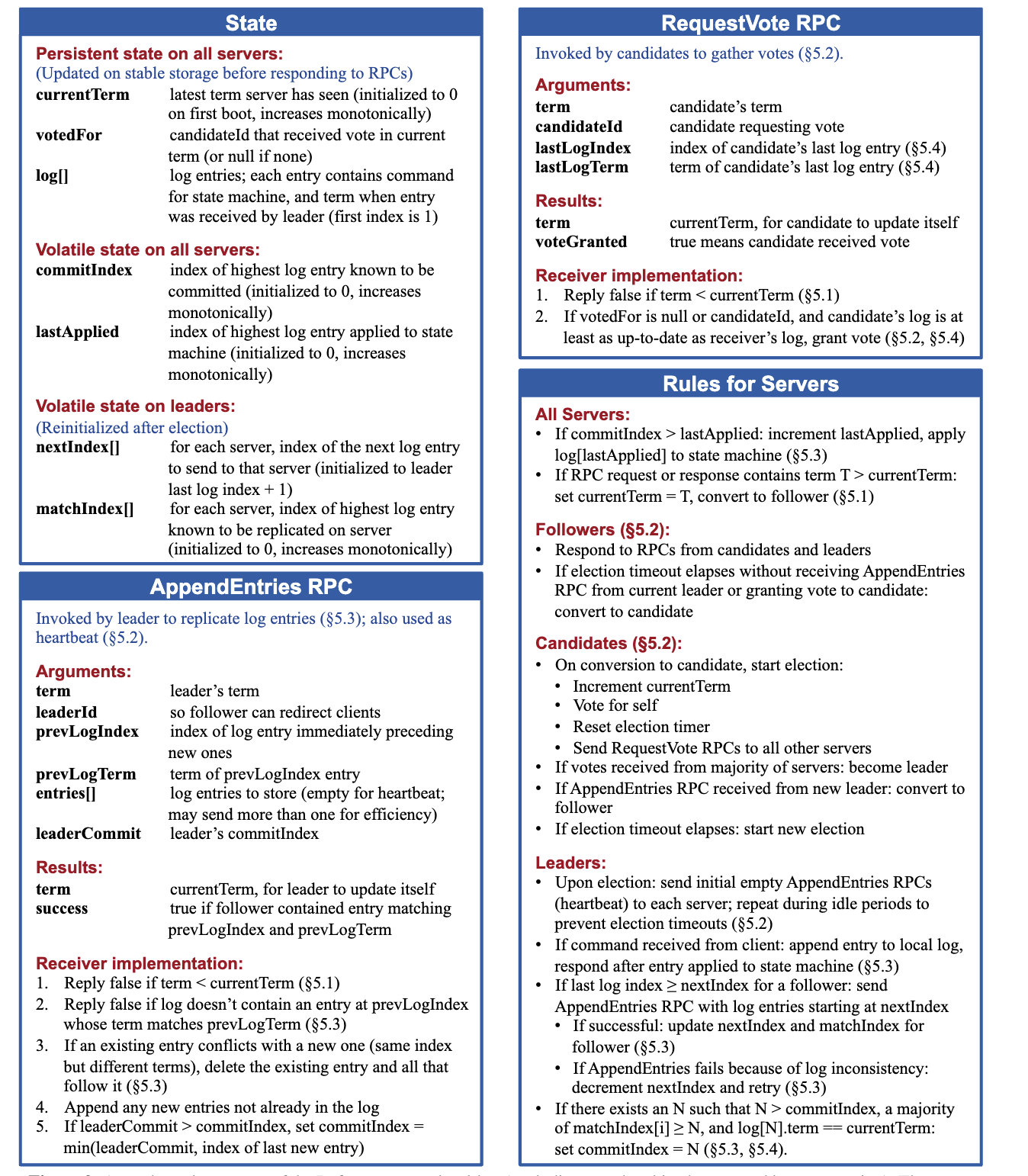

Rules: A Raft cluster has several servers (>=5). A server node has 3 states: follower, candidate, leader. Each node has a log and a state machine.

-

state machine of servers: an election term

-

Time is split into

terms, like logical clocks in Raft. -

Raft servers communicate through RPCs in parallel. Servers retry RPC if they donot receive a response in a timely manner.

- RequestVote RPCs

- AppendEntries RPCs

Problem 1: a split vote if 2 nodes become candidates at the same time in leader election

Re-elect until one node becomes leader (receive a majority of votes. Majority means over a half of members.). Majority != max.

Problem 2: network partition in log replication

The system will reach consensus again when the network partition is healed for following reason. When a node sees the higher election term, it will step down, which means to roll back its uncommitted entries and match the new leader’s log.

Background¶

-

Goal of Raft is understandability without losing performance.

-

Backgroud: Paxos, the previous main-stream consensus algorithms, is hard to understand and complicate to maintain the systems built on top of that.

-

Replicated state machine, a replicated log containing the state machine commands from clients. Consensus algorithms usually have properties as: safety (network delay/partition, packet loss, duplication, reordering), availabilty (if a majority of servers in the system is running, the sys can work. The failed ones can work after recovering from state on storage), time irrelevant consistency, Majority Rules.

-

Why leave Paxos? Error prone, hard to implement/maintain/understand

(lol a section to describe why Paxos is so hard)

-

Single-decree decomposition is hard to maintain replicated log where it separates partial logs of the system and then merges them into one in the end. Consensus algorithms should have constraints on the order of committed logs.

-

Big gap between the theory and real systems in multi-Paxos. And correctness is hard to maintain when the system built on top of Paxos eventually produces its own architecture.

-

-

Raft improves understandability by

- divide and conquer: decompose consensus to independent part: leader election, log replication, safety and membership changes

- simplify state space: reduce #states to consider. Ex: randomization: introduce non-determinism with random timeout settings, which reduces state space

Consensus algorithms¶

First, keep in mind that Raft is a protocol to manage replicated logs.

Problem 1: what is the leader of a Raft cluster? How it works?

- A leader manages the replicated log

- A leader’s tasks

- receives/handles requests from client: client -> leader; client -> follower -> leader

- sends messages to all its followers: replicates its log entries/heartbeat

- A follower that receives no communication over a period of time will become a candidate with

current_term += 1& state transition. - Randomized retry approach: Raft randomly chooses election timeouts from a fixed interval (150-300 ms) to reduce the frequency of mulitple servers having timeouts together. It means each candidate has random election timeout. This approach is to solve the availability issues that a ranking systems can not.

- Eventually, after a leader is elected, the log consistency converges due to consistency check of appendEntries RPCs.

Problem 2: log replication

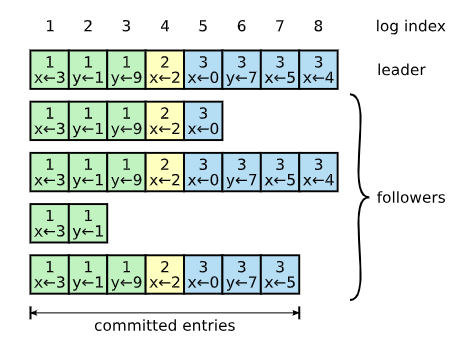

- A log entry is committed when the leader replicates it on a majoritiy of the servers.

- A log entry represents a command not the result of a command, which makes preceding entries trackable.

- Consistency check:

- send (i): [entry(i-1)][entry(i)] to server S_i, S_i checks if entry(i-1) in its log and only replicates entry(i) when the check passed.

- if the follower is inconsistent with the leader, the check will find the latest entries that both servers agree and overwrite the following with the leader’s after that point.

- A

nextIndexapproach. - A leader’s right

- A leader can modify its followers’ log entries.

- A leader can append new entries to its own log (but cannot delete/overwrite its log)

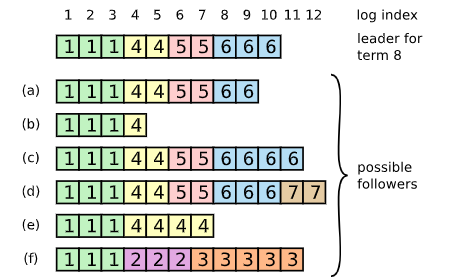

Operation sequences lead to the failure cases:

- has missing entries

(a) server a crashed at term 6

(b) server b crashed at term 4 - has extra uncommitted entries

(c) server c was the leader for term 6, added serveral entries to its log but only committed some of its entries.

(d) server d was the leader for term 7, added serveral entries to its log but committed none of its entries. - has missing entries and extra uncommitted entries

(e) server e was the leader for term 4, added serveral entries to its log but only committed some of its entries. Then it crashed and remained down for several terms.

(f) > Server f was the leader for term 2, added several entries, then crashed before committing any of them. It restarted quickly and became the leader for term 3, behaved the same as term 2. Then it crashed again and never came back for several terms.

-> Implies that a server having more entries than other servers in one term could be the leader for that term.

-> And no entries will get replicated after a server receiving the entries crashed. No entries will get committed after a leader crashed.

Problem 3: Why does Raft need a leader?

Problem 4: Safety measures and restrictions

Problem 5: failures

- leader crashes: consistency guarantees

- follower/candidate crashes: retry RPCs indefinitely. Supported by idempotent property of Raft RPCs.

- network partition

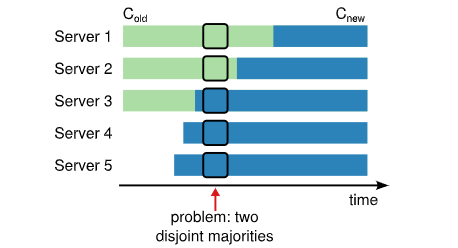

Problem 6: cluster membership changes. Operatiosn like replacing disks/servers, changing the degree of replication.

Problem 7: log compaction

Snapshotting in Raft

Intuition of terms¶

-

Leader Election: When an election term starts, the candidate sends"VOTE FOR ME"messages to others. If the receiving node hasn’t voted yet in this term then it votes for the candidate and the node resets itselection timeout. Once a candidate has a majority of votes it becomes leader and the node resets its election timeout. The leader begins sending outAppend Entriesmessages to its followers. Followers then respond to eachAppend Entriesmessage inheartbeat timeout. This election term will continue until a follower stops receiving heartbeats and becomes a candidate[3]. -

Log Replication: Leader notifies its followers the committed entry by replicating all changes to all nodes from it. An entry is committed once a majority of followers acknowledge it and a response is sent to the client. -

Process: follower => candidate => leader, If followers don’t hear from a leader then they can become a candidate. The candidate becomes the leader if it gets votes from a majority of nodes.

-

2 timeout settings control election:

-

The

election timeoutis the amount of time a follower waits until becoming a candidate, randomized to be between 150ms and 300ms. -

The leader sends messages to its followers in intervals specified by the

heartbeat timeout.

-

-

Byzantine Fault: a metaphor for untrusted components in systems. Every component can go wrong and it’s hard to infer which one is wrong.

-

An operation is idempotent which means performing the same op multiple times has the same effect as performing it once.

-

Availabilty of Raft is the ability of the system to respond to clients in a timely manner.

-

MTBF, mean time between failures. Typical server MTBFs are several months+

olc notes¶

-

In Raft, a leader is a server that manages replicated log of the system. Leader receives all calls from clients and sends requests to its followers in normal condition.

-

Raft handle the following three types of failures:

- leader failures

- candidate or follower failures

- network partitions

A visualization of Raft ↩︎

The explanations of terms come from a visualization of Raft ↩︎