Speeding Up Parallel Programs

Terminology:

-

CPU clock cycle: one tick of the processor core’s clock.

-

Superscalar execution: processor automatically finds independent instructions in an instruction sequence and can execute them in parallel on multiple execution units. A two-way superscalar core can run two indepent scalar instructions per clock.

-

SM = Streaming Multiprocessor, the compute block in NVIDIA GPUs.

-

Transistors increase sophistication of processor logic that accelerates a single instruction stream of a processor. More transistors indicate larger cache, smarter out-of-order logic, smarter branch predictor, etc.

-

One core means one instruction stream.

-

ALU is an execution unit.

-

SIMD, single instruction, multiple data. It is the execution unit inside a single hardware context. Same instruction is broadcast to all ALUs, This operation is executed in parallel on all ALUs.

-

8-wide SIMD means a single SIMD instruction operates on 8 data lanes in parallel. A SIMD vector register holds 8 elements (the lanes). One instruction (e.g. add, multiply) performs the operation on all 8 lanes simultaneously. 8 lanes of 32-bit floats are 8 × 32 = 256 bits wide.

Example: v = a + b. The hardware does v[i] = a[i] + b[i] for i = 0…7 in one instruction cycle depending on throughput/latency.

-

A mask is a bit vector that tells the SIMD processor which lanes should be active for a given instruction. In an 8-wide SIMD unit, you get an 8-bit mask where each bit controls one SIMD lane.

-

Instruction stream coherence (coherent instruction): the same instruction sequence applies to many data elements. It is at the level of one core for SIMD processing.

-

divergent execution: a lack of instruction stream coherence in a program.

-

explicit SIMD: SIMD parallelization is performed at compile time.

-

Implicit SIMD: h/w is responsible for execution.

-

SMT, Simultaneous multi-threading. Each clock, core chooses instructions from multiple threads to run on ALUs.

-

GPU SIMT, single instruction, multiple threads.

-

Memory bandwidth: The rate at which the memory system can provide data to a processor

-

Compute-bound: a task, job or process is said to be CPU-bound (or compute-bound) when the time it takes for it to complete is determined principally by the speed of the central processor.

-

SPMD: single program multiple data

-

ISPC is short for Implicit SPMD Program Compiler

-

Advanced Vector Extensions (AVX) are SIMD extensions to the x86 instruction set architecture for microprocessors from Intel and Advanced Micro Devices (AMD).

-

parallel slack = ratio of independent work to machine’s parallel execution capability (in practice: ~8 is a good ratio)

Parallelism for Efficiency¶

The reason why we need parallelism is that power limits CPU clock frequency. Instruction level parallelism at processors is tapped out.

Big concerns in computing are:

power = heat/battery. It’s common requirements to run a program for a longer time or run with higher performance for a fixed time. If a chip gets too hot, it must be clocked down to cool off.

Parallel programming aims for three aspects:

- design at scale. Communication cost (synchronization) is a big factor at times.

- h/w implementation: the characteristics of the machine matter to efficiency and performance

- h/w efficiency: fast != efficiency. The evaluation is based on performance/cost.

Parallel to scheduling respects program order.

Cache is faster than DRAM. Caches are implemented in SRAM.

Cache operates at the granularity of cache lines. It means if the byte loading is not present in the cache, CPU requests the entire cache line where the byte resides starting at the cache line boundary. Common cache line size is 64 bytes.

Memory access time in another words is latency. A DRAM access is typically ~50–300 times slower in latency than an L1 access.

Data movement has high energy cost.

Rule of thumb in modern system design: always seek to reduce amount of data movement in a computer.

Multi-core Processors¶

A modern multi-core processor increases parallelism in many aspects:

- Superscalar: ILP improvements. Executes up to two instructions per clock from a single instruction stream, which is about instruction throughput.

- Multi-core: Add more cores (processing units). Executes one instruction per clock … on each core.

- SIMD (within a core): Add execution units (ALUs) to increase compute capability. Executes one 8-wide SIMD instruction per clock …

Instructions are generated by the compiler. That means parallelism is inferred by compilers.

SIMD¶

Problem: Think of piece of code that yields the worst case performance on a processor with 8-wide SIMD execution. Hint: use only a single “if” statement

For conditional execution, SIMD processors have to execute both branches when there’s divergence, then mask out the incorrect results. After branch, SIMD processors continue at full performance.

The worst case is 1/8 peak performance. We can use 1/7 split to degrade SIMD utilization to 1/8. Fro example, if i == 0. The key is actually utilization of resources.

Reduce memory access latency by many ways:

- Caches reduce length of stalls.

- Data prefetching reduces stalls (hides latency). Many modern CPUs have logic for guessing what data will be accessed in the future and “pre-fetching” this data into caches. Pre-fetching can also reduce performance if the guess is wrong, which consumes bandwidth and pollutes caches.

- Multi-threading reduces stalls. Interleave processing of multiple threads (Hardware-supported multi-threading) on the same core to hide stalls.

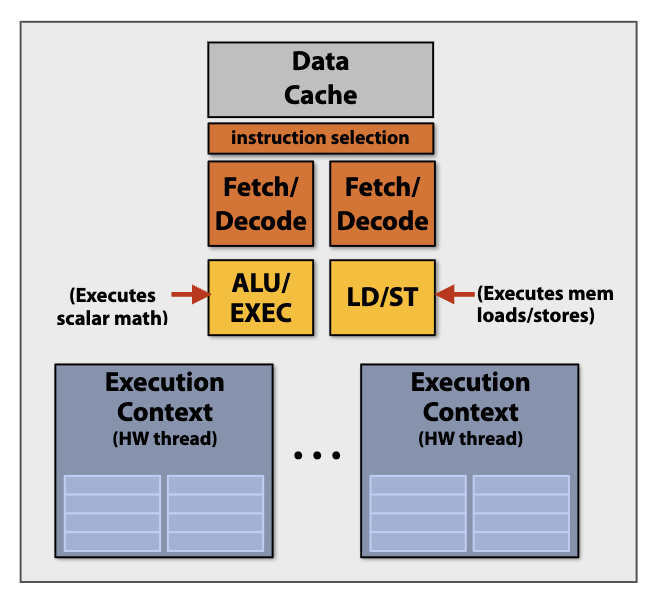

H/W Multi-threading¶

Key idea of throughput-oriented systems: Potentially increase time to complete work by any one thread, in order to increase overall system throughput when running multiple threads.

The cost of storing execution contexts: Assume on-chip storage of execution contexts as a finite resource, how many threads can achieve 100% utilization of one core?

A processor with multiple h/w threads can avoid stalls by performing instructions on another thread when a thread takes a long latency operation to complete. The processor utilization is no longer reduced by the long running thread.

Programs that feature more arithmetic per memory access need fewer threads to hide memory stalls.

Architecture of a modern processor is a multi-core chip with multi-threaded, superscalar cores. It can be:

16 core/ 8 SIMD ALUs per core/4 (h/w) threads per core.

-> 16 simultaneous instruction streams / 64 total concurrent instruction streams / 512 independent pieces of work to run chip with maximal latency hiding ability

For example, one SM unit of a NVIDIA GPU can be:

64 “warp” execution contexts per SM / Wide SIMD: 16-wide SIMD ALUs (carry out 32-wide SIMD execute over 2 clocks)

-> 2048 data items processed concurrently per “SM” core

One GPU can have 80 SM cores.

GPU SIMT: modern GPUs excute hardware threads running only scalar instructions. GPU cores implement simultaneous execution of SIMD-width threads when they are executing the same instruction. A divergent thread would be masked off.

Applications for utilizing modern processors efficiently must:

- Utilize all available execution units across many cores and many execution units (ALUs) on one core

- Groups of parallel work items must require the same sequences of instructions to utilize SIMD execution

- Expose more parallel work than processor ALUs to enable interleaving of work to hide memory stalls

Bandwidth¶

Memory bandwidth is a rate at which data is transferred from memory to a processor.

A bandwidth limited computation is that the bandwidth of the memory system cannot keep up the rate processors request data.

Problem: how to overcome bandwidth limits?

Programs must access memory infrequently to utilize modern processors efficiently.

Reducing memory accesses:

Reuse data loaded by the same thread and share data across threads. Example: perform math to storing values.

Fun fact: modern CPUs may contain up to 20 stages in their instruction pipeline.

GPU may not be used more efficiently than CPU. But it’s faster than CPU for throughput workloads. GPUs are built for data-parallel work and much higher memory bandwidth, so for massively parallel floating-point tasks (matrix multiply, convolutions, large vector operations, dense linear algebra) they can be orders of magnitude faster than a CPU.

Abstraction vs. Implementation¶

Abstraction: semantics of operations provided by a programming model. What are expected from the program to compute?

Implementation: scheduling of parallel programs. How will the program actually compute on a parallel machine?

Our goal: A parallel programming model implementations -> Trace through what each part of parallel computer is doing during each step of program.

SPMD is the programming abstraction for ISPC Programming (parallel programming). And SIMD implementations handle mappings of conditional control flows to vector instructions. Masking vector lanes is one example.

- SPMD programming abstraction: An ISPC function spawns gangs of ISPC programming instances which run ISPC code concurrently. Each instance has its local copy of variables. Upon return, all instances have completed.

- ISPC compiler generates SIMD implementation. Number of instances in a gang is the SIMD width of the hardware (or a small multiple of SIMD width). ISPC compiler generates a C++ function binary (.o) whose body contains SIMD instructions. C++ code links against generated object file as usual.

- An ISPC gang is implemented by SIMD instructions executing within one thread of one CPU core. An ISPC task is executed within multi-core CPU.

Keywords[1]:

programCount: number of simultaneously executing instances in the gang (uniform value)

programIndex: id of the current instance in the gang (a non-uniform value: “varying”)

uniform: A type modifier. All instances have the same value for this variable. Its use is purely an optimization. Not needed for correctness.

foreach declares parallel loop iterations which the entire gang must perform

reduce_add(): A cross-instance communication primitive.

1 | # Compute sum of a variable’s value in all program instances in a gang |

ISPC implementation takes responsibility for assigning iterations to program instances in the gang.

Scheduling: map iterations to program instances

- Blocked assignment of program instances to loop iterations: If the SIMD width is eight, divide iterations to eight groups and assign each program instance sum/eight iterations. For all program instances in the gang, the #programCount values of the same index are not contiguous in memory, which require a costly gather instruction to fetch values.

- Interleaved assignment: assign each program instance one iteration one by one. For all program instances in the gang, the #programCount values are contiguous in memory, which can be implemented by a

packed vector loadinstruction. - Dynamic assignment: assign new work depending on the status of other program instance

- Fixed assignment: for example, always assign one program instance all iterations

It’s a bad scenario where multiple iterations of the loop body write to same memory location. The output of such a program is undefined.

PP Basics¶

Amdahl’s law: the overall performance improvement gained by optimizing a single part of a system is limited by the fraction of time that the improved part is actually used.

Let S be the fraction of work that is inherently sequential. The rest of work that can be parallel is (1-S).

Max speedup on P processors: speedup <= 1 / [(1-S)/P + S] <= 1/S

Dependencies prevent parallel execution. A small serial region can limit speedup on a large parallel machine where the speedup is 1/S as P >> S.

A parallel problem can be divided into four parts: decomposition, assignment, orchestration and mapping to hardware.

- Decomposition is breaking up problem into tasks that can be run in parallel and identifying dependencies of tasks.

- Assignment is assigning tasks to workers (threads) to achieve a good workload balance and reduce communication costs.

- Orchestration involves synchronization, scheduling and communication.

- Mapping to hardware is mapping workers to hardware execution units.

There are two ways to solve a parallel problem. Data-parallel model supports hardware dependent intrinsics, exposing special built-in primitives to synchronize or communicate. For example, the forall loop has an implicit barrier at the end of the body. Shared address space model is generally used not just in parallel problems.

- Data-parallel programming model.

- Shared address space/SPMD. It’s usually used in multi-threaded programs. Locks express mutual exclusion for shared variables. Barriers express dependencies of computation phases they divide into.

Barriers are used for dependent phases of computation. Each processor has its own private memory. Processors need to be synchronized to share data. All computation by all threads before the barrier complete before any computation in any thread after the barrier begins.

Performance Optimization¶

Optimizing performance of parallel programs has misaligned goals:

- Balance workload onto execution resources

- Reduce communication cost

- Reduce overhead of orchestration

Parallel programming rules of thumb: We want at least as much independent work as the parallel execution capability allows, preferably more. But the work should not be too much so that granularity of work is too small.

In general, fine-grained work incurs overhead of management while coarse-grained work incurs load imbalance.

Summary of Tips:

- Implement the simplest solution first, then measure performance to determine room for improvement.

- Establish high watermarks for your program.

- Be aware of scaling issues.

Work Distribution¶

To achieve good workload balance, we want all processors working but low-cost of communication and orchestration (synchronization).

Work assignments can be classified as static, semi-static or dynamic. Choosing between static or dynamic depends on system knowledge of workload so as to reduce load imbalance and synchronization costs.

Static: Assignment of work to threads does not depend on dynamic behavior. The assignment is static when the assignment is determined given the amount of work and number of workers. It may depend on runtime parameters such as input data size. But statistics about execution time are predictable.

Semi-static: Cost of work is predictable for near-term future. Application periodically profiles its execution and re-adjusts assignment.

Dynamic: Assignment of work is determined dynamically at runtime to ensure a well-distributed load.

Scheduling Fork-join Programs¶

Fork-join pattern is natural to express divide-and-conquer algorithms. The examples are in Cilk Plus. A fork is creating new logical thread of control. A join is syncing up with the spawned calls.

cilk_spawn foo(args);

Caller may continue executing asynchronously with execution of foo.

cilk_sync;

An implicit cilk_sync is at the end of every function containing a cilk_spawn.

Main idea is to expose independent work to the system using forks. Scheduling may incur performance problems like heavyweight spawns, context switching overhead, larger working set than necessary.

1 | # spawned child |

Cilk’s implementation of thread pool. The Cilk runtime maintains pool of worker threads. It’s exactly one execution context for one worker thread in the machine. Per-thread work queues store work to do. Thread continually pops continuation from work queue, enqueues new continuation. Idle threads steal work from busy threads.

Calling thread can run the child or the continuation at spawn.

- Run continuation first: queue child for later execution which is available for stealing by other threads (child stealing).

- Caller thread spawns work for all iterations before any execution.

- Breadth-first traversal of call graph. O(N) space (N is problem size)

- Without stealing, different execution order from the same program without spawning children.

- Run child first: enqueue continuation for later execution (continuation stealing).

- Caller thread creates one item of all remaining iterations to steal and executes current iteration.

- Depth-first traversal of call graph. Same execution order when no stealing.

- Space. Work queue storage for system with T threads is no more than T times that of stack storage for single threaded execution

Work stealing with run child first scheme implementation:

- Dequeue per worker: Every worker has a work queue. Work queue is implemented as a dequeue (double ended queue). Local thread pushes/pops from the tail (bottom). Remote threads steal from head (top).

- Choice of victim: Steal work from top of dequeue. The top element of work queue has largest amount of work. In this way, each thread can perform work with maximum locality. Stealing thread and local thread do not contend for same elements of dequeue when dequeue applies lock-free scheme.

Greedy join scheduling. All threads always attempt to steal if there is nothing to do. They go idle only if there is no work to steal. The worker thread executes logic after cilk_sync may be different from thread that initiated spawn.

Sync implementation:

Without stealing, there is nothing to do at sync point.

For stealing case, Cilk uses descriptors to bookkeep steals and sync points. The descriptor tracks the number of outstanding spawns for the section, and the number of those spawns that have completed.

Memory Models¶

There are two memory models. One is shared address space model where threads communicate by reading and writing to variables in shared address space. The other is message passing model where threads operate within their own private address spaces. Shared address space model requires hardware support to implement a single shared address space for all processors. In contrast, message passing model does not have such requirements. It only needs to provide communication mechanisms between nodes, which makes it easier to connect commodity systems to form a large parallel machine for clusters and supercomputers.

Non-uniform memory access (NUMA): The latency of accessing a memory location may be different from different processing cores in the system. Bandwidth from any one location may also be different to different CPU cores. In practice, NUMA behavior can also be found on a single-socket system since different cache slices are a different distance from each core.

Shared address space hardware architecture: any processor can directly reference any memory location. It can be costly to scale to large numbers of processors, increasing communication cost.

Message passing model: sending messages is the only way to exchange data between threads. The communication interface is send(dest, buffer, msg_id) and recv(sender, buffer, msg_id).

Message passing can be synchronous (blocking) and asynchronous (non-blocking).

- Synchronous (blocking): calls return when caller receives acknowledgement that message data resides in address space of callee

- Asynchronous (non-blocking): calls return immediately. Use checksend(), checkrecv() to determine actual status of send/receipt.

Communication¶

A parallel system can be viewed as an extended memory hierarchy. The lower the level, the higher the latency, the lower the bandwidth, and the larger the capacity.

1 | Processor: |

Communication refers to not only messages between machines. It also refers to messages between a processor and its cache, between processor and memory, or between processor and a remote memory. Non-local memory accesses result in communication with other levels. Managing locality to reduce the amount of communication performed is important at all levels.

Arithmetic intensity = amount of computation (e.g., instructions) / amount of communication (e.g., bytes)

1/Arithmetic intensity is communication-to-computation ratio.

High arithmetic intensity is required to utilize modern processors.

There are two reasons for communication:

- Inherent communication: Communication that must occur in a parallel algorithm. Good assignment decisions can reduce inherent communication

- Artifactual communication: all other communication. It results from system implementations such as cache line size. Recall that when a program load a variable, entire cache line must be transferred from memory. For examples, a system may have minimum granularity of data transfer, causing unnecessary communication. System operation might result in unnecessary communication (store, load, overwrite, store). A system may have finite replication capacity (capacity miss).

A resource can perform operations at a given throughput (number of transactions per unit time). Contention occurs when many requests to a resource are made within a small window of time (the resource is a “hot spot”).

Techniques for reducing communication:

- Reduce overhead of communication to sender/receiver

- Send fewer messages, make messages larger (amortize overhead)

- Coalesce small messages into large ones

- Reduce latency of communication

- s/w: restructure code to exploit locality. For example, change array traversal order to fit cache capacity.

- h/w: improve communication architecture

- Reduce contention

- Replicate contended resources: local copies, fine-grained locks

- Stagger access to contended resources.

- For example, distributed work queues reduce contention in access to single shared work queue.

- Increase communication/computation overlap

- s/w: async messages

- h/w: pipelining, multi-threading, pre-fetching, out-of-order exec

Performance Analysis¶

A strategy is to try and establish high watermarks. Try to find out what’s the best performance in practice, which tells you how close your implementation to a best-case scenario. Determine if your performance is limited by computation, memory bandwidth (or memory latency), or synchronization?

Roofline model is a plot whose X axis corresponds to arithmetic intensities (memory bandwidth) and whose Y axis corresponds to maximum obtainable instruction throughput (compute). It depicts optimization regions.

To establish high watermarks, there are some techniques to show how compute, bandwidth or sync affect execution time of a program. Although overall performance is influenced by many factors, the performance change to the program modifications can be a good indication of dominant costs.

- Add math (non-memory instructions)

- Remove almost all math, but load same data

- Change all array accesses to A[0]

- Remove all atomic operations or locks

Use profilers/performance monitoring tools. All modern processors have low-level event “performance counters”. Registers that count important details such as: instructions completed, clock ticks, L2/L3 cache hits/misses, bytes read from memory controller, etc.

Understanding problem size issues

- Speedup should be measured against the performance of the best sequential program, not a parallel version of a program running on single processor.

- Evaluating a machine with a fixed problem size can be problematic. Problem size that is too small for the machine leads to a large communication-to-computation ratio. If problem size is too large for a single machine, working set may not fit in memory, causing thrashing to disk. Super-linear speedups can occur on a bigger parallel machine where the working set can fit in memory.

- Scaling problem size can be desirable as machine sizes grow. Buy a bigger machine to compute more, rather than just compute the same problem faster

ISPC Basics¶

compile a program:

1 | ispc (--target=avx2-x8) -O2 -h simple_ispc.h -o simple_ispc.obj simple.ispc |

(GPT-5t-mini)

--target=avx2-x8— choose the code-generation target.

This picks an x86 AVX2 codepath and a gang/vector width. ISPC historically uses names likeavx2-i32x8to mean “AVX2 target with 32-bit base types and 8 lanes” (i.e. 8 program instances / SIMD lanes). Older short forms likeavx2-x8are still accepted by some ISPC builds, but the explicit formavx2-i32x8is clearer and recommended. The gang width determines how many ISPC program instances run in lockstep (and usually maps to SIMD lanes).

-O2— optimization level (optimize for speed).

-h simple_ispc.h— generate a C header file (simple_ispc.h) with the C-callable function declarations that match your ISPCexportfunctions.

-o simple_ispc.obj— write the compiled object file with this name. On Linux/macOS you’d typically use.o(e.g.simple_ispc.o); on Windows/MSVC use.obj.

simple.ispc— the input ISPC source file.