Utilizing IO Queue Depth for Replicated Storage

I recently looked into IO queue management and worked on utilizing IO queue depth (QD) on the client side for an in-house replicated storage device. A client connecting to several servers tracks in-flight IO requests for servers so that the number of IO requests on each server would not go over a certain limit.

Background¶

QD represents the number of in-flight requests in the moment. A common way to control QD for a device is to combine bitmap with atomic operations, providing a lockless but safe accesses. It’s how null_blk device manages tags as below[1].

1 | static void put_tag(struct nullb_queue *nq, unsigned int tag) |

Design of New IO queues¶

Different from null_blk, the constraints of our task come from two respects. First, the QD of each server needs to be tracked on the client side. Secondly, the IO requests are divided into two groups, reads or writes. A read request consumes one IO unit for one server while a write request consumes IO units for each server. Previously, the client adopted one bitmap whose size is QD of all servers but incurred wild address accesses when IOs are extensive. The original design was also rigid for connecting a new server to the client or shutting down a connection. Then the QD of the client is set to QD of one server, which fixed the issue with concurrent IOs but doesn’t utilize QD of the client entirely. For read IOs, the QD of the client is utilized 1/(# of servers).

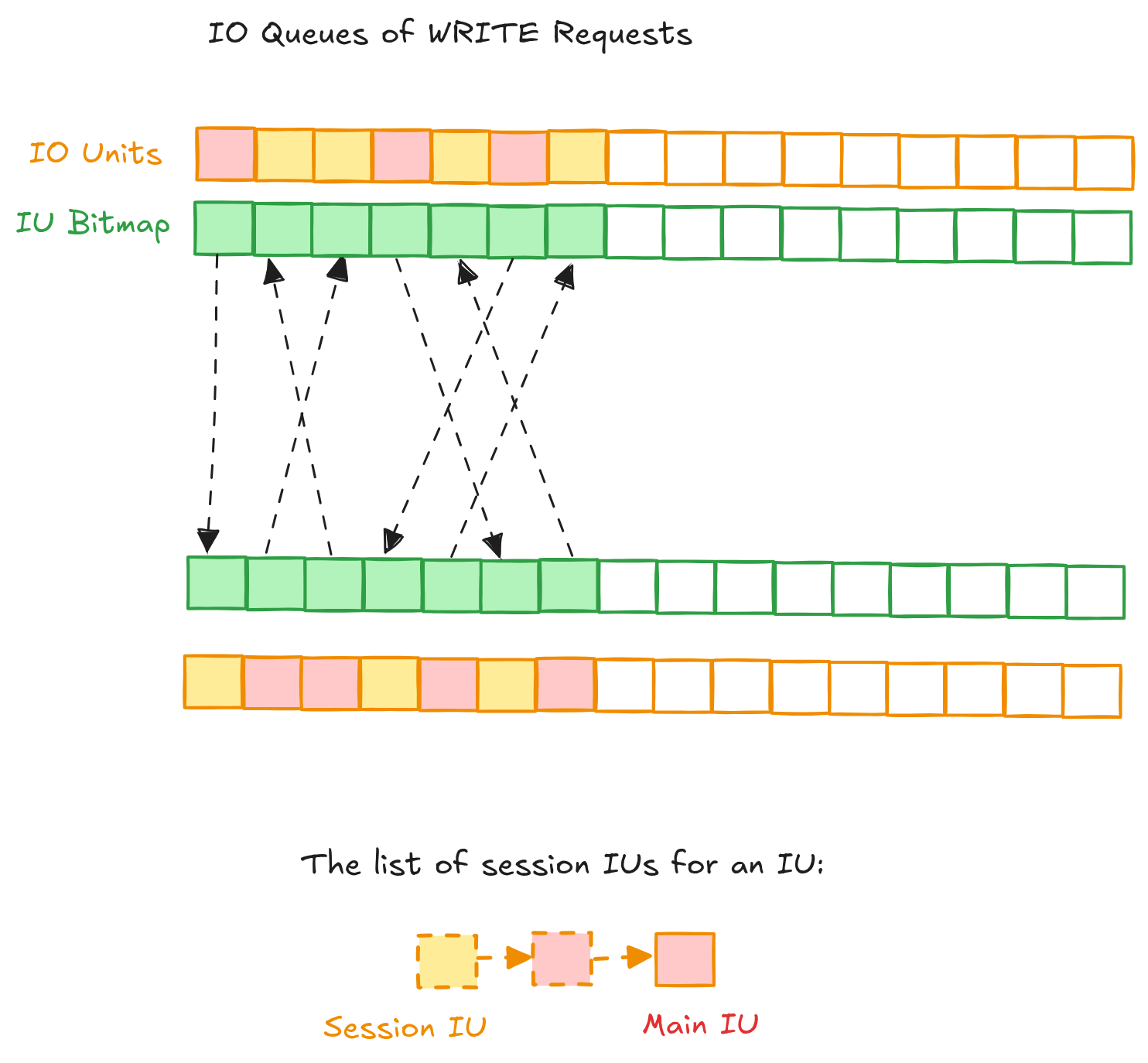

The new design fully utilizes QD on the client side by assigning each server a bitmap and a list of IO units with size of QD of the server. This structure keeps the lockless feature and increases IO bandwidth. An IO unit taken by a request carries a list of server sessions which record the index of occupying bit in the bitmap.

Debugging¶

Even though the design idea seems simple, the implementation is not trivial to get correct. The debugging phase is broken into three parts. The first part is the bugs that are easy to fix. Those are errors you can inspect from stack traces and diagnose by eyes. For example, the reference count is not reduced properly. The second part is race conditions without stack traces or with traces which tell little information. The third part is issues rising when running regression tests, which are mostly about missing logic.

I’ll mainly talk through the race condition with this new QD structure. The difficulty of the second part is implicit errors and no clues shown. How event to debug a case where the server just crashes after some time? Likewise, what’s peculiar at first was that fio failed or stalled after running concurrent IOs, showing error below:

1 | fio: pid=728 disappeared 5 |

Invalid Attempts¶

There were some attempts to find the fault which went in vain. For example, run small IOs with dd, run fio multiple times with different ioqueuedepth and numjobs, or run the same fio command without my code to observe the difference. I also instrumented IO requests a lot but didn’t find a valid fix. KASAN did help to generate a trace on rdma_rxe layer, however, which turned out to be unrelated to my code. It’s not guaranteed that the kernel traces show errors at the right place of faults. And it could happen that a fault triggers a chain of effects, displaying errors elsewhere which are far away from the fault. Irrelevant information are given constantly. It’s up to the engineer to decide the right track to go along with.

It’s possible that your code masks an error instead of fixing the real error, especially in race conditions. The new code may slow down the related process and hide the error which fails only in high concurrency scenarios.

Valid Investigations¶

At last, I found the issue was a race condition on IO requests or missed server responses. It struck me that bitmaps could be dumped to observe in-flight IO usage. It helped me to figure out that IOs got stalled when there were no free slots in the bitmap. Running fio jobs left some dirty bits in the bitmap sometimes, which was either caused by a race, uncompleted IOs on the server side or failed responses from servers for completed IOs.

With a clear direction to investigate, the root cause of io stalls was finally deduced to be at the list of server sessions for an IO unit. First, the possibility of server responses or uncompleted IOs on the server side were eliminated. By instrumenting at the submission and completion path on both the client and the server side, it’s found IOs were completed on the server side. However, the client didn’t receive all responses, meaning some IO units were not released. During releasing IO units, the main IO unit was the first to clear its bit, reinitializing the server session list to empty. That led to uncleared bit of the rest of server sessions in the list.

1 | $ grep "got iu" qdlog_v7 | wc -l |

It’s important to keep mind refreshed. Sleep is usually better than staring at the screen all day long. The third part was easier than the race condition but still exhausting. At the very end, I did spot the fault but created new subtle bug while changing the code…

Performance Evaluation¶

Under given configurations for a many-job fio command, it turned out to be faster than the previous design, around 0-10%. Case closed.

Taken from Linux v6.1.118. ↩︎