Distributed File Systems Notes

This note will only briefly talk about disk array systems, including some points I think is interesting.

Multi-disk systems¶

Terms:

- disk stripting is that data gets interleaved across multiple disks.

- To measure how often one disk fail: MTBF_disk (Mean Time Between Failures) = sum(t_down - t_up) / # of failures

- MTBF in multi-disk system, MTBF_MDS = mean time to first disk failure = sum(t_down - t_up) / (Disks * # of failures per disk) = MTBF_disk / disks

- Recalled that raid5 intersperses the parity over N+1 disks, raid 6 is N+2 and raid N+3 etc. Not elaborate here.

We want a reliable storage system with large capacity, good performance. Multi-disk systems are designed for these reasons.

Solution: RAID, redundant array of independent disks

Problem 1: Load balancing: bandwidth (MB/second) and throughput (IOs/second).

The problem is that some data is more popular than other data, which is described as load imbalance. I/O requests almost never evenly distributed, but depend on the apps, usage, time. It’s hard to partition static data to evenly distributed IO reqs for hot data changing over time.

Solution 1.1: stream data from multiple disks in parallel. To deal with hot data, find the right data placement policy

- simple approach: fixed data placement: Data x goes to y.

- better approach: dynamic data placement. Label popular files as

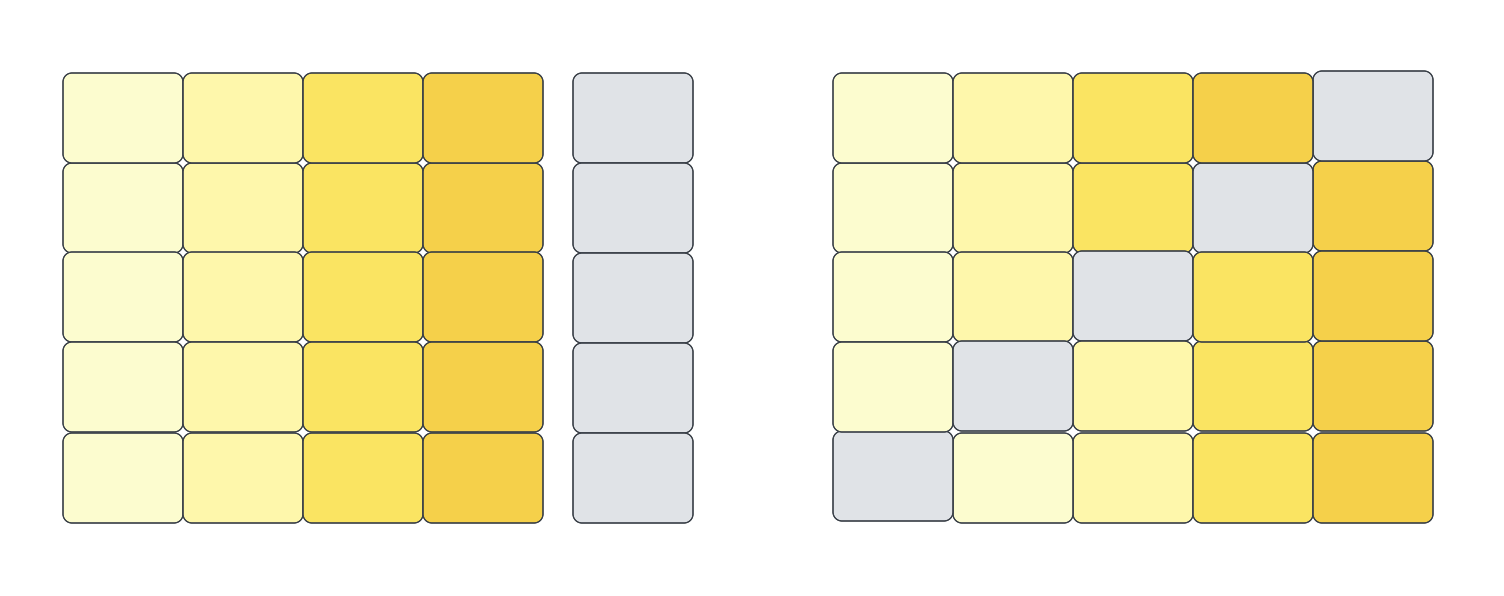

hot, separated across multiple disks. - practical approach: disk striping, to distribute chunks of data across multiple disks for large files (relative to stripe unit), good for high-throughput requests. Hot file blocks get spread uniformly.

The key design decision is picking the strip unit size. To assist alignment, choose multiple of file system block size. If it’s too small, small files will also be spanned across all disks. Extra effort for little benefit. If it’s too large, it’s cost more with striping but no parallel transfers.

Solution 1.2: concurrent requests to multiple disks

Problem 2: Fault tolerance: tolerate partial and full disk failures

Solution 2.1: store data copies across different disks

In a independent disk system, all fs on the disk are lost; in a striped system, part of each fs residing on failed disk is lost. Backups can lead to new problems like performance issue and complicate storage provisioning. It is also difficult to do back scheduling.

Disk failures in a multi-disk system happen. HDDs fail often (1-13%); SSDs are more reliable (1-3%).

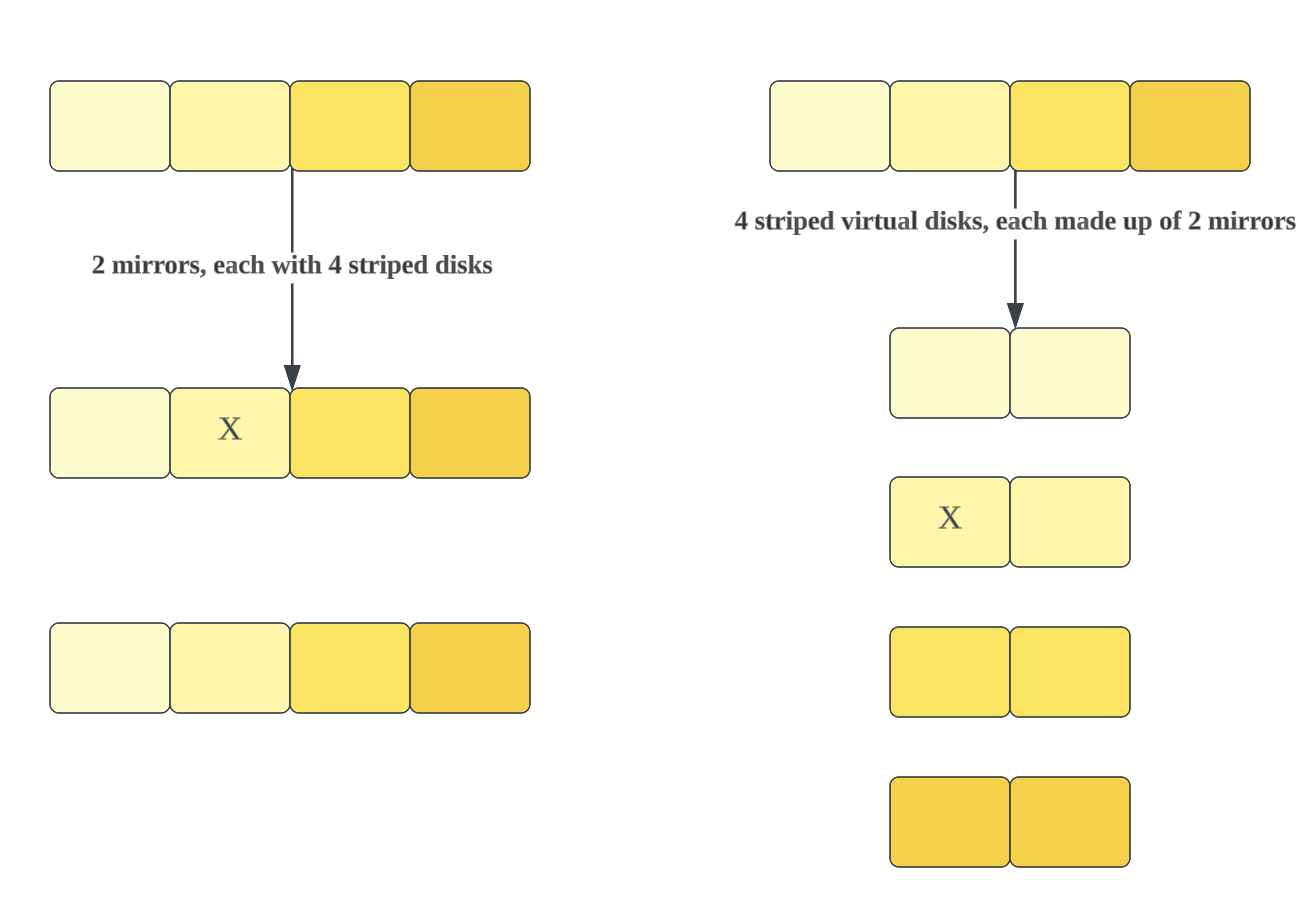

Solution 2.1.1: Replication. Combine mirroring (replicated block device) and stripping to find a balance between reliablity and performance.

- combination ways differ

Solution 2.1.2: Error correction code via parity disks. Read-modify-write is behind updates of failed disk.

-

striping!

Distributed File Systems¶

Fs/database is just a layer of abstraction on top of block devices. It provides services to many apps. Distributed fs shares data among users and among computers with easier provisioning and management.

Dfs has its own jobs as is pathname lookup. Client mounts fs on the server. When the client looks up a file, it performs the individual lookups (1 RPC per directory), which can take a long time. Server does not know about client’s mount points.

Design:

-

server-client structure: a separate server providing base of fs and applications running on client machines use this base.

-

how to partition fs functionality? Performance, complexity of system, security, semantics, sharing of data, administration…

request/reply: a procedure call (read/write descriptor, parameters, a pointer to a buffer, implicit info).

- Client marshals parameters into message

- message sent to server, unmarshalled, processed as local op

- server marshals op result and sends back to client

- client unmarshalls message, passes result to calling process

Approach 1: server does everything (partition)

- Performance of a server and network can bottleneck clients.

- Memory state must be maintained for each client app session.

Performance tuning (d)¶

client-side caches

design decision 1: cache coherence, a consistent view across set of client caches

design decision 2: choose consistency model -> in what order to persist data in

- Unix (Sprite, a new network file system which made heavy use of local client-side caching), 1984, all reads see most recent write

- Original HTTP: all reads see last read, no consistency

implementation: who does what, when

Approach 2: Sprite, caching + server contorl

- Server tells client whether caching of the file is allowed.

- Server calls back to clients to disable caching as needed except for mapped files.

- Client tells server its operations to files.

Approach 3: AFS v2, caching + callbacks; All reads see latest close version.

- clients get copy of file and callback from server; server always callbacks if the file changes

- only write back entire file upon close.

- updates by server revoking callbacks for the other clients (race on close)

Approach 4: NFS v3, stateless caching; Other clients’ writes visible within 30 seconds.

Stateless refers to server having no guarantees to clients.

design decision 3: Servers keep state about clients or not.

stateless servers: simple -> faster in a way, easy to recover, no problem with running out of state resources

Vs. stateful servers: long-lived connections to clients -> faster in a way, easy for state checking

File handles (fs)¶

Terms:

opaquemeans a structure’s contents are unknown- NAS, network-attached storage. It’s a dedicated file server.

File handle, as file descriptor, is a easier way to represent/handle a file.

In fs, open returns a file descriptor. Applications don’t need to use file name to represent a file any more.

In NFS with stateless server, open creates a file handle and the server passes it back to the client. The whole process is as this:

- client passes the file handle (

opaquestruct) to the server when it wants to access a file - the server creates a file handle and passes it back to the client

Execution models¶

Terms:

- IOFSL, I/O forwarding scalability layer

- processes: creating a process needs entire new address space. Switching CPU from one process to another needs to switch address space with the unmeasurable cost of TLB misses…

- threads: a thread means a thread of execution. Multiple threads share one address space, which reduces os time for creation and context switch (10000/machine). -> gdb level

- events/tasks: lighter than threads (1000,000/machine). Task is hard to code and debug

Example:

IOFSL for supercomputers. I/O forwarding layer is introduced to solve the large number of concurrent client requests. All IO requests go through dedicated IO forwarder.

Problem: large requests -> Out-of-order IO pipelining; small requests -> IO request scheduler.

AFS with FUSE.

Multi-server Storage¶

Terms:

- LAN, local area network; SAN, storage area network; NASD, network attached storage device; pNFS, parallel NFS

Problem 3: how to partition funtionality across servers and why

do the same tasks on each server for different data <----> do different tasks on each server for the same data

common issues:

- find the right server for given task/data

- balance load across servers

- excessive inter-server communication

conflicting design issues: want to keep the independence of server but gain sth from multi-server struct & easy to use

Approach 1: same functions, different data

- Good for load distribution and fault tolerance.

Problem 3.1 how is client-side namespace constructed

- NFS v3, Each client mounts server FSs at its own pick. Many namespaces in the clients overall.

- AFS, a set of servers provide a single name space. Each client has a link to global directory hierarchy.

Problem 3.2 Given file name, how to find the server having the file

- NFS v3, A client traverses its namespace and mount points and contacts the server (a directory sub-tree)

- AFS, ~ (volume)

Problem 3.3 Load balancing

- NFS v3, manual: system admin can assign file sub-trees to servers and configure mount points on clients.

- AFS, manual: admin moves volumes among servers

Approach 2: different functions, same data

- Good for flexibility and bottleneck avoidance. Less common, often used with approach 1.

- E.g., pNFS for NASD by EMC

Problem 4: concurrent access to shared state

Skip this section for which we have discussed in distributed system blogs.