File Systems At a Glance

This blog is the third part of NVMe ZNS emulation. We will explore cluster storage and all the things behind.

FS storage layout¶

Readings:

Chap 8 of Unix Internals, Vahalia 1995;

A Solution to the Metadata Update Problem in File systems, Ganger 2000

Chap 7 of Practical File System Design, Giampaolo 1998

Problem 1: mapping raw data into files converts into mapping fs structures and data to LBNs

Need a structure to keep track of available space (granularity: blocks). And to choose which free block.

Solution 1:

- Free list: Linked list of free blocks -> simple but performance issues

- Bitmap (common): large array of bits, one per allocatable unit. Two state: free or in use -> better lookup

- Free extent structure (s.t. better): extent is a contiguous range of free blocks -> better management but fragmentation issues

E.g. Fs storage layout for HDD: FFS 1984[1] has advantages on locality and larger transfer size over pre-FFS fs world. Allocation groups are inode and the first data blocks in the same cylinder group, which is also used in modern FSs like xfs 1994, ext*.

Larger transfer size is meant to amortizing overall positioning costs.

- larger fs block size

- allocate contiguous blocks

- prefetch: fetch more data than requested. Read-ahead is for sequential access. When paying positioning cost once, the trade-off is that a large request can delay requests of normal size. -> heavy streaming of sequential I/O (locality helps)

Small (<= 8kb, 80%) files are fragmented in real world. And it leads to small I/Os caused by independence of files and levels of indirection.

Problem 2: small files lead to small I/Os. Need to increase locality and transfer size for small files

Solution 2: group small files and metadata for larger I/Os.

How to group?

- Co-locate?

- Over aggressiveness costs little?

Locality and large transfer size brings performance improvement to fs. The relation between them is prefetching and write-back caching. We don’t want too many random writes. The idea behind all those optimization, I think, is to reduce random access of local file systems. That’s why we care about cache, fetching data, transferring, etc.

+Locality:

- delay in cache for r/w

- disk req scheduling for allocation

- write near the disk head (hardly used)

+large transfer

- re-allocation (move data around)

- pre-allocation (allocate more than you need now)

- extents-like structure as a replacement for block lists and bitmaps (beware of long small-extent lists hiding out)

Log-structured FS¶

-> amortization, large write

- Data and metadata are written sequentially to a circular buffer called log

- shadow paging: write a segment to replace several older smaller blocks and reclaim space. The idea is to increase empty blocks. Its cost may more expensive than benefit gained.

- It follows Amdahl’s law. Speedup is limited to fraction improved.

- cleaning: segment cleaning starts when there are blanks between blocks. Read entire segment for amortization and move live blocks from segment being cleaned.

- know which blocks are live -> segment summary

- know whether block is still live -> inode map, inodes

- do cleaning in background unless running out of space

- Most modern FTLs do LFS-liek remapping into SSD blocks

- F2FS is a LFS-like FS for flash

- set segment size = SSD block size

- separate hot/cold data: Hot/warm/cold. Set criteria for data according to its usage (how likely to be overwritten)

Log-structured Merge Trees¶

data structure view: (memory buffer -> disk)

- insert: buffer and sort recent inserts in memory; write out into local fs sequentially; Less random disk writes than b-tree.

- lookup: search sorted tables one by one -> compaction: merge sort into new files, deleting old (cleaning as LFS); Bloom-filter and in-memory index to reduce lookups.

E.g. TableFS (FUSE) packs metadata into LSM trees

- Design: small objects (<= 4kB) are embedded into LSM tree, turning into one large object (~2MB). Large files are stored in object store indexed by tablefs assigned id number.

- LSM tree is a tabular structure with (key, value). It co-locates directory entries with inode attr and small files to reduce random lookups. Readdir performs sequential scan on the table.

- FUSE is a fs in user space. It presents user VFS to the kernel[2].

- LevelDB is a key-value database using LSM tree to supports its operations like put, delete, get, scan.

Co-locating FFS¶

It allows large transfers by co-locating related metadata and data objs and r/w to obj groups. Files are placed based on name locality, which leads to multiple files per disk I/O.

FS organization¶

Vahalia1995 ch8: P255

-

vnode layer: this layer is for having multiple fs at once. It adds a level of indirection as virtual fs layer. Everything in kernel interacts with fs via a virtualized layer of functions. It can also supprot non-local FSs like NFS, FAT fs,

user -> open file object -> vnode_structure -

dir (inode IDs (file name tranlating to), record len, length of name, file name) is a special file with entries formatted. Sets of entries are organized in block-sized chunks.

-

mount/unmount attach/detach one FS into the namespace.

-

a root file system

/ -

multiple FSs on one disk. Disk capacity is divided into multiple “partitions”. To track such structure, keep a partition map which has (offset, len) (in logical blocks). Commonly, device drivers handle the partition map (FS req -> partition).

-

a symbolic link contains a text string as a path to another file or directory

-

Data is declared to be set of records, each a set of fields. Index is a map of key values to offsets in the file. Libarary API in databases allows access by key value or range of values.

Data organization¶

The problem of disk organization and naming is how to find certain entry in many environments with TBs of data.

- directory and file structure. Traverse the directory hierairchy by the

.and..entries. -> good for moderate-sized data sets - managing namespace: mount/unmount. Starting with a root file system, mounted fs is attached into this namespace.

Difficulty with dir hierarchies:

- difficult to scale to large size

- # of entries in a dir grows too large -> partition into subdirectories again

- one data object fits into many places -> different file names for the same data object/put symbolic link to a file

Naming in two respectives, direct/indirect, absolute/relative. Files are accessed by attributes. Attributes are external information to describe a file, like last modified by, created by, file type etc.

Open/read/write¶

1 | fd = open("foo/bar", RO); |

Open:

- translate file name to inode:

vnode_lookup, use dir lookup cache first for each lookup - create a vnode for inode

- create a open file struct

- fill in fd

- return index into fd table

Read:

- index into fd table to get a open a open file struct -> get vnode

vnode_read(vnode, offset, buffer, size)-> normal read req- find vnode’s buffer (offset) in buffer cache

- if buffer is invalid,

vnode_getbuffills it. It requests device driver to fill buffer with data. - copy data from cached buffer to user space buffer -> advance offset by len of copied data in file obj

- repeat until size reached

- return -> update open file obj’s offset

Write op handles buffers differently from read op. If entire buffer will be overwritten by write, the buffer is marked as valid. When the write copies data to cache buffer, it will also mark buffer dirty. After returning, it leaves buffers as dirty in buffer cache.

To map file offset to disk LBNs, simple solution is to use start location and a contiguous LBNs to keep entire file. Using a next pointer in each block allows a flexible way to store a file. The most common approach is to use block lists. Block lists are an array with one LBN per block in the file.

Access methods¶

Unix files are generally flat which is a stream of bytes. Databese files are generally indexed with b-tree of records.

Hash table: O(1) lookup with poor range query. B-tree: O(lgN) lookup with efficient range query.

FS integrity¶

- cache benefit = time without cache/time with cache

- average = hit time + (average miss time * miss probability)

- write-back caching: the processor writes data to its local cache firsts before writing that cached data to memory or disk.

- ECC is Error-Correcting Code

- fsync: sync everything associated with that file.

Disk block caching¶

Latency to fetch data or perform I/O on disk from when I/Os are sent from application (on CPUs) can be 5-50 ms, 5-500 times of accessing disk block cache (0.1-1.0 ms) from the same starting place. We need to hide storage latencies or mortize the average response time.

Problem 1: how to avoid cache misses?

Miss Ratio dominates the avarage access time computation than hit ratio. A cache miss takes much slower than a cache hit. It can be seen in the avarage equation.

Cache misses scenarios:

- among reads: read for which not all data is in cache. Have to go to disk and the reader has to wait. Prefetches go to disk too. Though it do that in the background.

- among writes: writes put new (block-aligned) data into cache and move one. Writers don’t wait unless no cache space is available for new data or it must read existing block because only part set of data being written to disk.

Internal consistency¶

Problem 2: Challenges to internal consistensy

- concurrent modifications: two processes induce race conditions -> concurrency control

- media defect growth: contents of newly-defective sectors are lost -> redundancy

- transient storage subsystem bug: flipped bits on bus etc -> integrity checks + redundancy

- system crashes are unpredictable and can happen at any time: volatile main memory contents lost. On-disk img must be sufficiently consistent for this type of failures. Only persistent storage is there upon restart. Clean-up must be done after system failures.

Tools for protecting consistency:

-

static mapping: if they do not change, they do not cause problems

-

atomicity of writes: checksum or per-server ECC provides per-sector with unwritten guarantee by FS/app. -> good for grouping inter-related updates, like directory chunks and inodes

Problem 2.1: data is lost when write is partially completed

-

update ordering: ensure that one update propagates before another -> good for single-direction dependencies

Problem 2.2: not good for bidirectional dependencies (most of them in fs)

Solution 2.2: convert some of them to single direction giving importance of direction.

-

atomicity: ensure that a set of updates all occur or none do

Purpose of update ordering: provide integrity of metadata pointers in face of system failures

Basic rules are like rules of programming with pointers. Allocate before use, must free when deallocating, set new pointer before free when moving. In pointer world, freeing means nullifying. Something always left dangling if there is a badly-timed crash.

Crash recovery¶

Tradional way examines entire contents by walking entire dir hierarchy and each file’s block list, indentify and rebuild free space/inode bitmaps. It is exhausting.

Problem 2.3: clever way of crash recovery

Solution 2.3.1: update ordering

- synchronous writes: wait for one write to complete before proceeding -> huge performance overhead

- soft updates: write-back caching for all (non-fsync) updates -> good for delayed writes

Solution 2.3.2: multi-write atomicity. Always present safe version.

- write-ahead logging (WAL, or journaling): changes are kept in a log first.

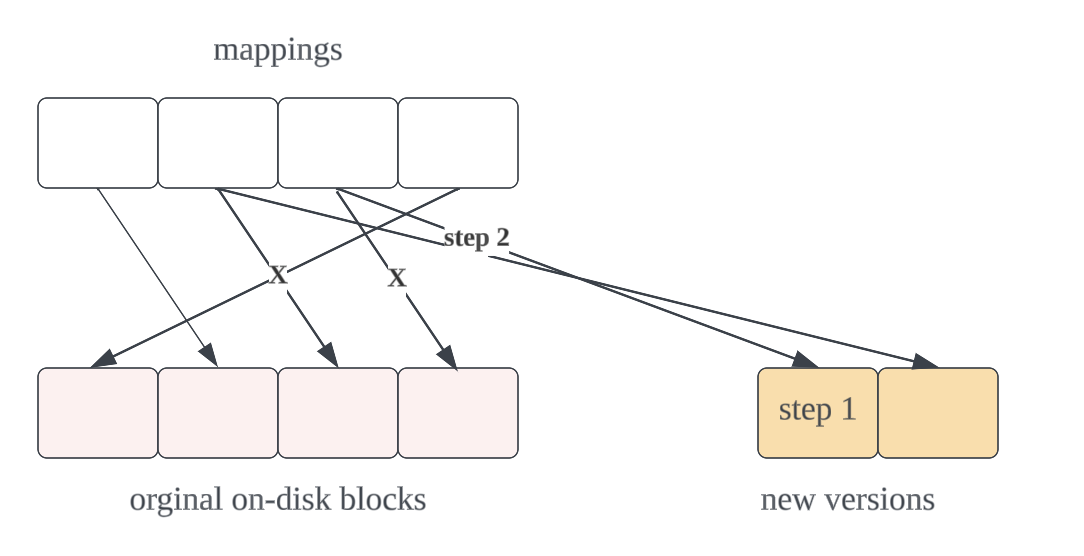

- shadow paging[3]: New versions of data blocks written to new locations. Write changes to alternate location and replace previous.

Need to revisit P240[4].

https://afterhoursacademic.medium.com/a-fast-file-system-for-unix-15117fb7425b ↩︎

https://stackoverflow.com/questions/2910229/what-is-a-virtual-file-system-or-a-file-system-in-userspace ↩︎

https://stackoverflow.com/questions/55223741/understanding-shadow-paging-and-how-it-differs-from-journaling-file-systems ↩︎