From Virtualization to Virtual Machines

chapter 5 Enforcing Modularity with Virtualization

Problem: how to build a virtual computer? how to build as many computers as we want for running the desired modules?

Model: client and service modules connect through network

- bounded buffers -> communication linkes

- virtual memory -> memory

- thread -> processors

Client/server organization¶

-

modularity/isolation offers

- reduce errors propagating

- security, fault tolerance(geo-distributed)

-

virtualization methods

- many VM -> Multiplexing one physical instance

- one VM -> Emulation: preserve existing interfaces

- one big VM -> Aggregation: provide with many physical instance

-

virtualize computers

- thread of execution: data, text, heap, stack -> thread manager in the context of os(processors)

- interrupt -> interrupt handler in the context of os (processors, if one processor is processing an interrupt already, next interrupt may interrupt another processor)

- exception -> exception handler in the context of the interrupted thread

-

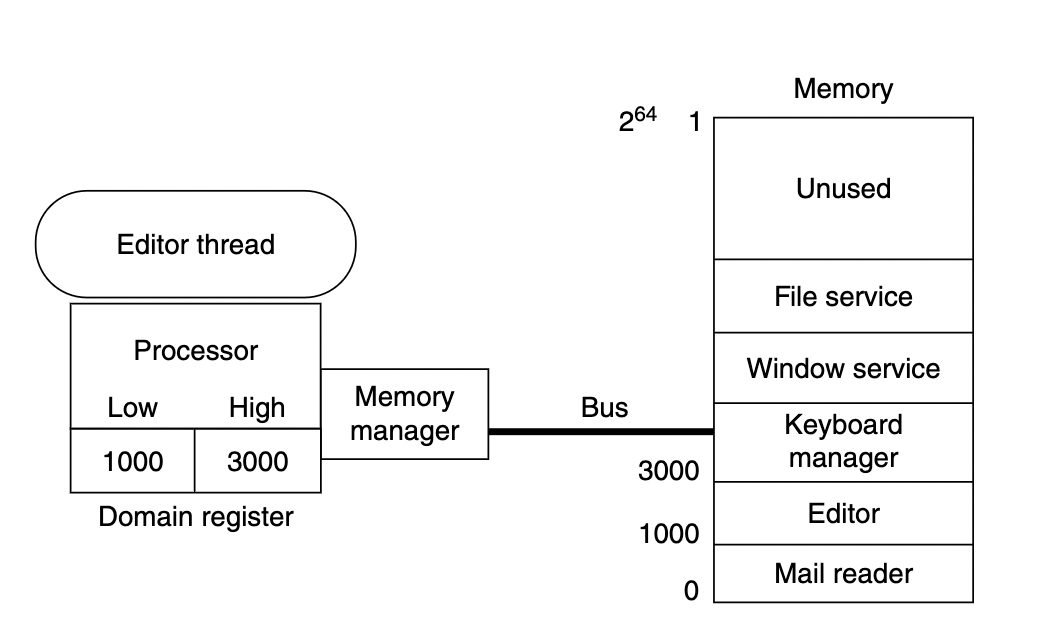

Processors share the same physical memory. -> controlled sharing is required -> virtual memory manager(a hw gadget) in the context of os

-

why emulation costs a lot?

steps to interrept the instructions of the emulated machine: decode the simulated instructions -> perform the operations -> update the state of the simulated processors

-> result to *10 overhead

-> method: virtual machines: use M physical processors to emulation M virtual objects

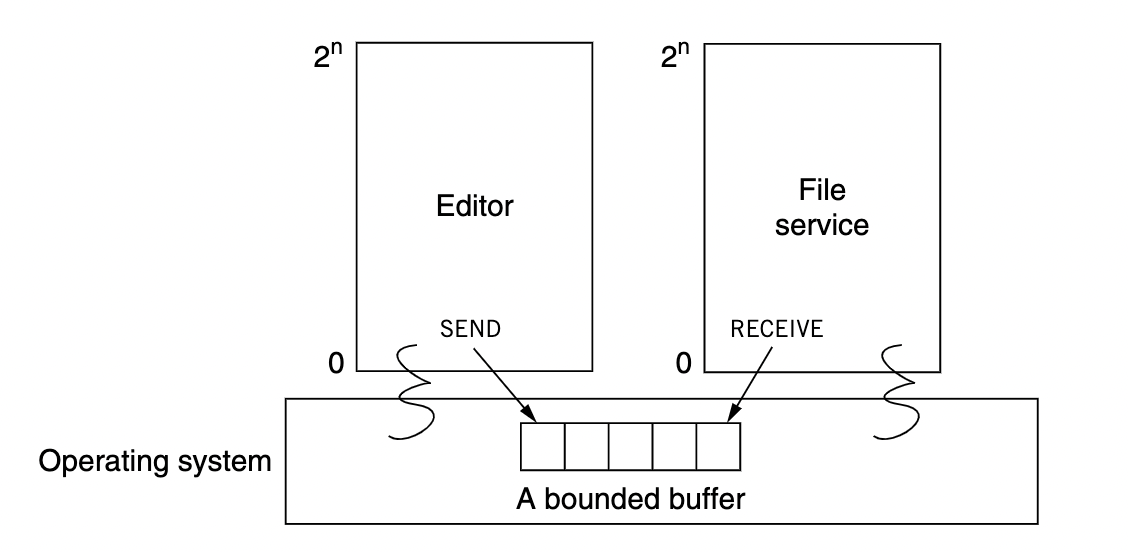

Ex: Editor want to send ‘x’ to File service to store.

Virtual links¶

Scenario : how to share a bounded buffer between SENDER and RECEIVER?

Fact: all threads share the same physical memory

problem: sequence coordination, one event in thread 1 must precede an event in another thread

If the bounded buffer is a fixed array N, and there are only one sender, one receiver, then coordinations are easy to handle:

by following this rule:

- if room = (IN - OUT) < N, sender can put more messages to the buffer

- if IN > OUT, receiver can consume more messages from the buffer

otherwise, it loops until conditions are satisfied, which is called spin loops(loops in which a thread is waiting for an event without giving up its processor).

six assumptions to guarantee the correctness of the program:

- one writer principle

- Spin loops require one processor for thread

- data overflow -> Integers of width 64 or 96 bits/ in/out modulo N

- Read/write coherence: the shared memory should make sure LOAD variable from right thread

- Before-or-after atomicity of multistep LOAD and STORE sequence

- fact: a 64-bit or 96-bit integer require multiple memory cells

- update in/out will require multiple LOAD/STORE

- result of executions is visible to other threads

- fact: out-of-order, an optimizing processor or compiler reorders statements to achieve better performance

Coordinations will get complicated when one-writer-principle breaks when:

- More than one sender/receiver(multiple sending threads, receiving threads) -> break spin loops’ assumption

- the bounded buffer is implemented in a different way. Like linked list where the sender and receriver can update a shared variable at the same time.

Then, these updates need to be coordinated.

coordination of concurrent activities¶

Concurrent programming needs the attention of specialists: all it takes is one subtle change to make a correct program wrong.

when sequence coordinations go wrong?

by removing above assumptions one by one:

-

race condition: it depends on the exact timing of two threads. The results caused by different scenarios are the same, which is that a particular ordering of the instructions of the threads disrupts the correctness of the program. In our case, it can be ranged from multiple senders to updating long integers required two instructions.

-

before-and-after actions: introduce locks

The before-and-after atomicity has been realised in many contexts. We should know they describe the same things when using different names. For example, atomicity/atomic actions, isolation/isolated actions, mutual exclusion/critical sections. The idea behind this is to find the race conditions and to avoid it in our systems.

-

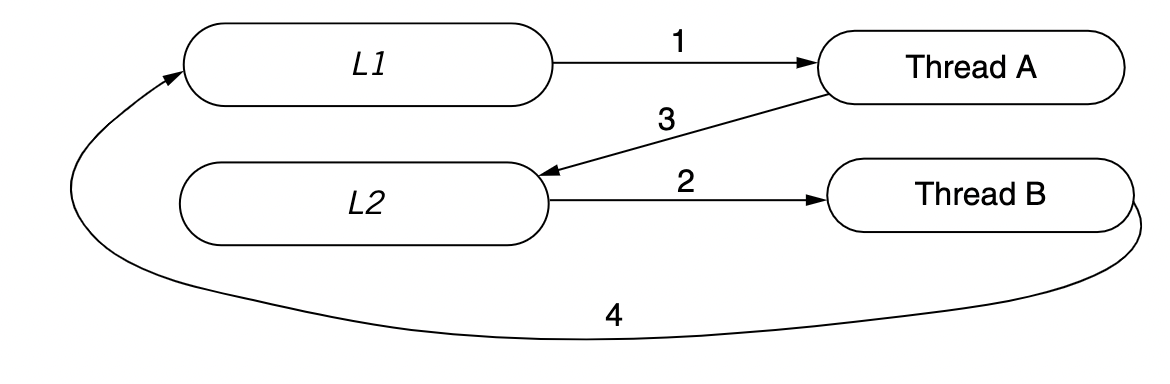

deadlock: new problem introduced due to locks

Rule: enumerate all lock usages and ensure that all threads of the program acquire the locks in the same order.

-

Livelock: an interaction among a group of threads in which each thread is repeatedly performing some operations but is never able to complete the whole sequence of operations.

lock machanisms¶

-

Single-acquire protocol : only one thread can acquire the lock

-

Multiple-reader, one-writer protocol protocol

Notice: all threads can still access the shared variables unless it is told not to. Instead of mechanically protecting the shared resources, locks serve as a flag to indicate if one thread holds the variable, the other thread should wait for it.

how to avoid those cases?

problem: how the protected shared variables will be used?

Bootstrapping: Reduce a general problem to some narrower particular version of the same problem. Then solve the narrow problem using special cases. Finally, construct the solution to the general problem which is a mthod for solving the special case and a mthod for reducing problem. (sounds like divide-and-conquer method for me)

In the case of ACQUIRE:

making multistep operations on shared variables before-or-after actions

-> making an operation on a single shared lock a before-or-after action

-> build a hw instruction that is a before-or-after action

arbiter: how arbiters can fail? 5.2.8

Enforce modularity in memory¶

Scenario: enforce mudularity on sharing memory which is assumed to have a large address space.

Invariant: a thread can access only its references.

Threads need more features:

- one thread needs more than one domains

- one thread cannot change to another domain by itself (modularity, it cannot access other thread’s references, aka data)

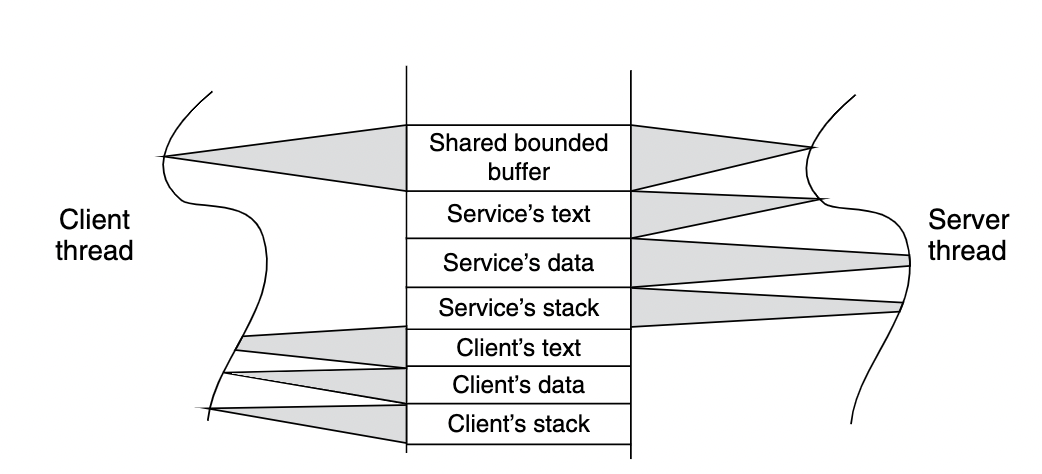

controlled sharing¶

-

cliend thread and server thread share the bounded buffer.

-

-

the trade-offs involved in implementing parts of the memory manager in software 5.4.4