Zoned Block Devices

As the homepage says, QEMU is a generic and open source machine emulator and virtualizer. The project I’ve been working on is adding zoned device support to virtio-blk emulation[1].

QEMU is a big project which looks a bit intimidating at first (and afterward :). It’s also lots of fun investigating different parts to solve problems in the way. Sometimes, I feel like a lousy detective trying to figure out how things fit together. Luckily, my mentors in this project always give me a hand when I am stuck at wrong directions.

Brief introduction¶

| Terminology | Abbr. | Concept |

|---|---|---|

| Shingled Magnetic Recording | SMR | write data in zones of overlapped tracks |

| Perpendicular/Conventional Magnetic Recording | PMR/CMR | write data in discrete tracks |

| write pointer | WP | it points to the lowest LBA(Logic Block Address) that have not been written of that zone |

| sequential write required | SWR | Host Managed Model(HM): write operations must be sequential |

| sequential write preferred | SWP | Host Aware Model(HA): unconstrained writes possible |

| open zone resource | OZR | the open zone resource in a zone block device is limited |

Zoned storage¶

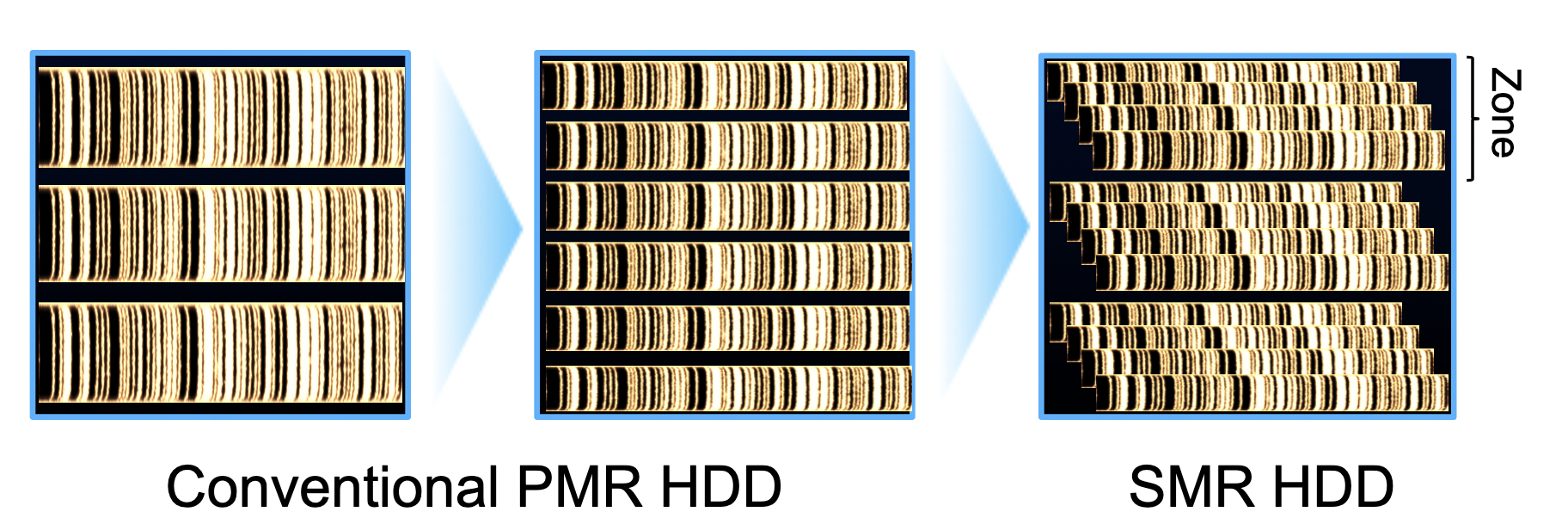

Imagining the shingles in the roof, SMR writes data in a similar overlapping manner. BY removing the gaps, more data tracks placing on each magnetic surface reflects in wider write head of design. The recording head will advance partial width of one data track after a write. Such overlapping tracks are grouped into zones. The gaps between zones prevents data overwrite from write head changing from one zone to another. (Ignoring-physics version)

What we want to take advantage of SMR in the project is the SWR property. For example, if LBA x, x+a is in zone 1, we can’t write x+a after write x because it is potentially under another zone. The area dencity of SMR results in higher disk capacity, better erasure coding, reducing adjacent-track interference exposure. Meanwhile, SMR also supports random read like CMR. Therefore SWR is good for data detection and recovery.

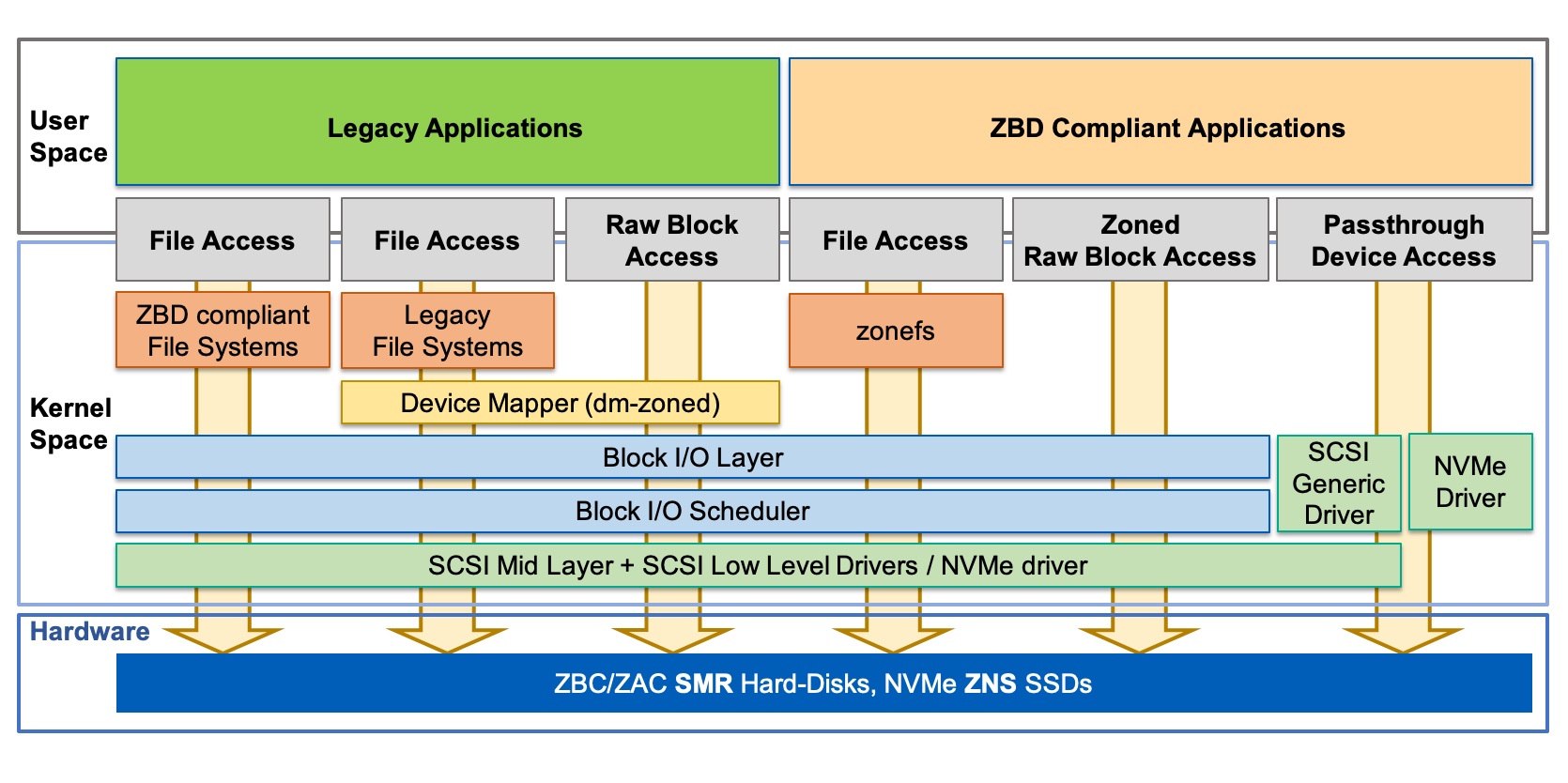

The main purpose of zoned storage emulation is to let VM Guest OS see a zoned block device file on the Host OS. However QEMU sees the zoned device as regular block device right now. Meanwhile, I should enable guest driver testing in NVMe ZNS so we can test if our previous works and benchmark it, which would be a nice topic for another blog(once I figure out how benmarking works :)

The cool vision about this project is to enable zoned storage on a regular file. It means VM Guest OS can see a regular file as zoned block device file, which is helpful for development related to zoned storage on a machine without such support. Whereas I have some doubts about the overhead and design of such improvement. For example, why should we emulate such zoned device if it maybe not offer enough performance for using? Again, another topic’s saving later.

Virtio-blk device¶

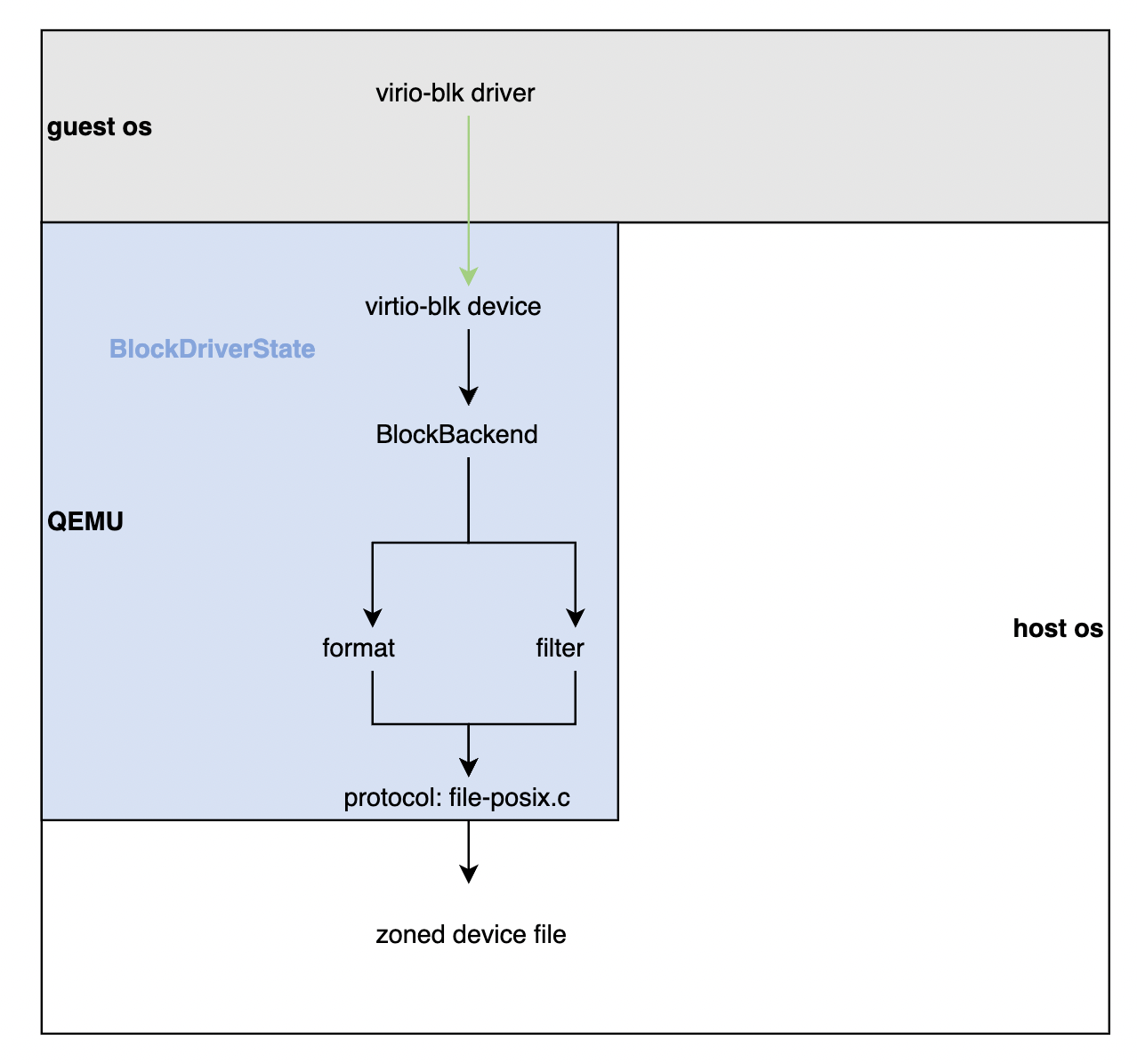

Virtio-blk device is a storage controller that presents host’s block devices to the guest. The structure of storage layers is designed for extensibility and performance. There is a BlockDriverState graph involved with block layers. BlockBackend provides a interface connecting block drivers to other part like qemu-io or virtio-blk device. It has a root pointer points to other nodes in the BlockDriverState graph. Format node and filter node are above protocal node. It is good for adding a BlockDriver inside the big project.

The overview of the emulation part is: the guest os sends a request of zoned commands which contains parameters to its virtio-blk driver. Then virtio-blk device receives the request which is basically a big chunk of memory and transforms it to the QEMU’s struct so that block layers can execute correlated commands on the zoned block device. After that, we can transform the results to the guest’s struct and send it back.

I haven’t though through the performance of virtio-blk device brings in though. Roughly speaking, zoned block devices can offer better performance than regular one especially above HDD level.

Zone state machine & APIs¶

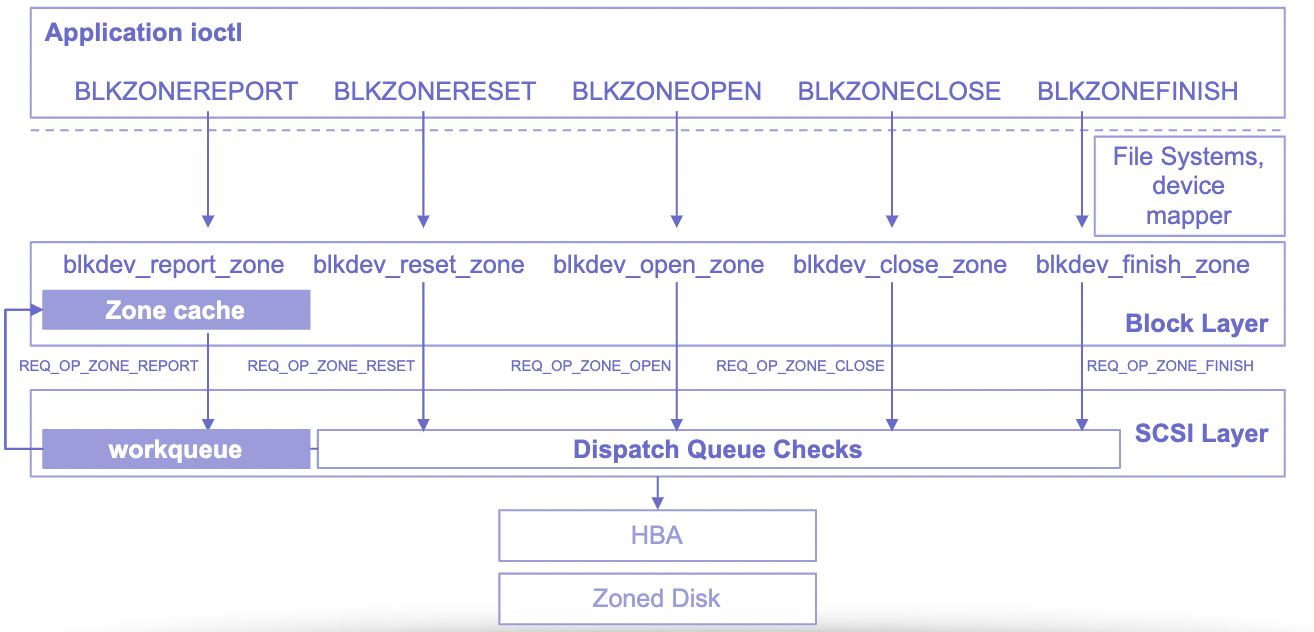

Zone state machine tracks open zone resource of zoned block devices. Sufficient open zone resource are required for open state in HDD. Zone operations will change states in the write pointer zone models.

The basic structs of zoned block devices handling is: zone_descriptors, zone_dev, zone_report_struct. Other than the five operations ioctl provides, we would like to add zone append write in the emulation.

[3]

The picture is from Damien Le Moal’s website. You can find more about zoned storage from: https://zonedstorage.io/. ↩︎

It is from a very old 2016 presentation ZBC/ZAC support in Linux when the initial SMR support for the kernel was still being developped. The first support release in 2017 (kernel 4.10) ended up being quite different from what this picture shows. E.g., there is no “zone cache” and no “workqueue” for report zones in the kernel.(annotations from Damien) ↩︎