IO_uring's API and Implementation in QEMU

Qemu structures¶

| Terminology | Concept |

|---|---|

| Protocols | file system, block device, NBD, Ceph, gluster |

| virtio | behave like a memory-mapped register bank, different register layout |

| Network cards | e1000 card on the PCI bus |

| Host formats | raw, qcow2, qed, vhdx |

-

what?

QEMU is a virtual machine trying to emulate OS without actual hw so as to run multiple OS in single machine.

-

why?

The general purpose of virtual machine is to trick the guest os that it is running on the real host os. Qemu is designed to manage this big memory to achieve better formance in emulation.

-

how?

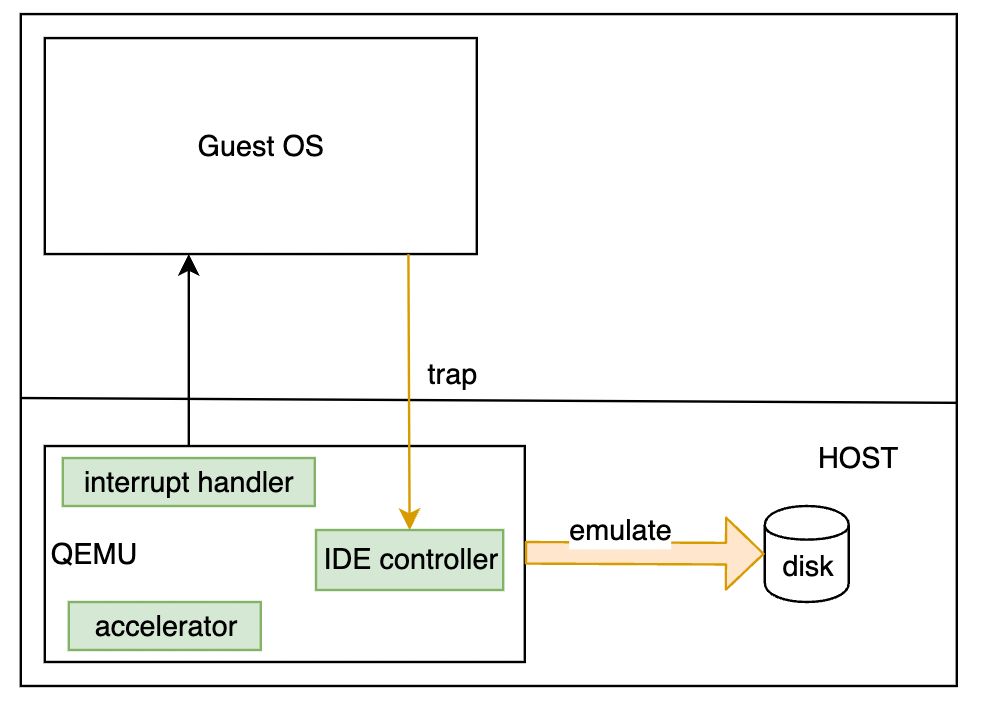

- “Accelerator”: Trap and emulate

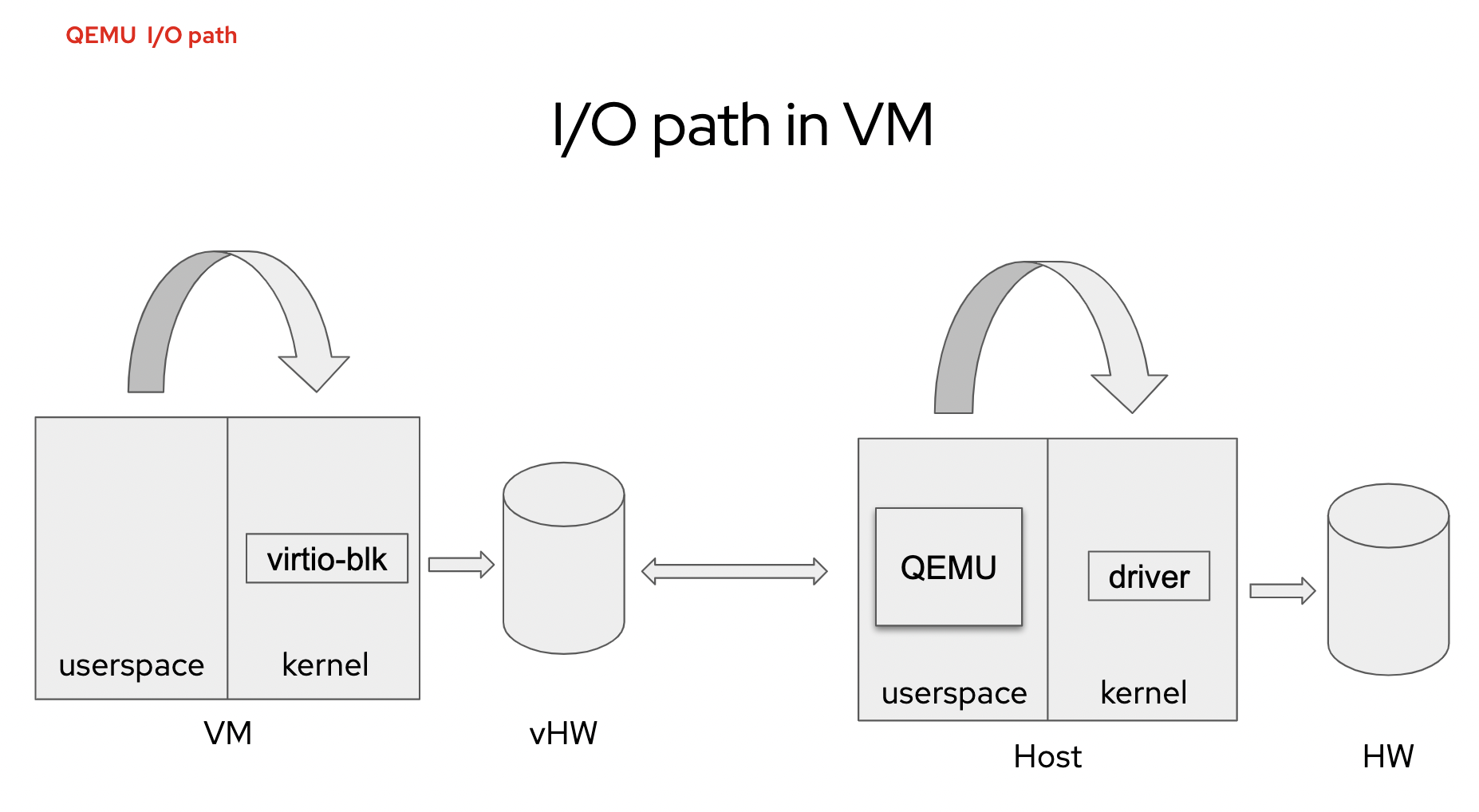

- “Paravirtualization”: tradeoff between virtualization in os and emulation in devices -> virtio

I/O path in VM[1]: host view of qemu is like additional RAM(a big chunk of memory and that’s it)

brief introducation¶

Terminology in QEMU’s event loop:

| Event sources | Abbr. | Usage |

|---|---|---|

| Bottom-halves | BHs | Invoke a function in another thread or deferring a func call to avoid reentrancy |

| Asynchronous I/O context | aio_ctx | Event loop |

-

Io_uring is a kernel ring buffer for asynchronous communication with kernel.

- Current integration into qemu: use epoll for completion check; use io_uring_enter() for submission; check completion on irq

- Improvement: want to use fd registration

-

Two ways to solve blocking mode problems(blocked processes are suspended): nonblocking mode, I/O multiplexing syscalls(async IO).

Async IO syscalls like select, poll, epoll

- Scenario: server wants to know if new input comes. Run program to know states -> Ask Linux kernel which file changes -> monitor file descriptors

- poll: choose specific fds to monitor. Select:choose a range of fds to monitor.

- Poll and select need kernel to check fds whether are available for writing, which takes a lot of time. Epoll(not for regular files) avoids the problem: create, control[2], wait

-

Coroutines in qemu: write multiple processes in a sequential way compared to callback[3] for correct order in scheduling.

-

rules of scheduling:

- Explicit: other cpu sees coroutines.

- only one coroutine executes at a time; can’t be interrupted

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17# using callbacks: the list can go on and on

start():

send("ask",step1)

step1():

read(step2)

step2():

send("hi, %s",name, step3)

step3():

done

# using coroutines

coroutine_say_hi():

co_send("ask")

name = co_read()

co_send("hi, %s", name)

# done here

-

API[4]

Struct: coroutine

Transfer of control: enter, yield

1

2typedef void coroutine_fn CoroutineEntry(void *opaque);

Coroutine *qemu_coroutine_create(CoroutineEntry *entry, void *opaque);

-

-

event loop in QEMU

two stucts appear in io_uring: AioContext, BH.

common APIs[5] in even loop are: aio_set_fd_handler(), aio_set_event_handler(), aio_timer_init(), aio_bh_new()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18struct QEMUBH {

AioContext *ctx;

const char *name;

QEMUBHFunc *cb;

void *opaque;

QSLIST_ENTRY(QEMUBH) next; // point to next eleent

unsigned flags;

};

struct AioContext {

BHList bh_list;

QSIMPLEQ_HEAD(, BHListSlice) bh_slice_list;

...

QSLIST_HEAD(, Coroutine) scheduled_coroutines;

QEMUBH *co_schedule_bh;

struct ThreadPool *thread_pool;

...

};

API¶

Implementation¶

Callback function is reentrant code meaning it can be called even while a call to it is underway. Like recv(pkt, via_ron), it is called when a packet arrives for the client program. ↩︎