Intro to xv6

Design points¶

- Few mechanisms provide enough generality: xv6 provides a Unix-like interface which contains the basic concepts to resolve the tradeoff between supporting many devices and using a simple interface.

- What is one syscall in xv6? Xv6 has one

kernelruns programs (process), process in the user space calls (syscall) kernel to provide services.- Each process has memory which cantains instructions, data and stack. Stacks manages procedure calls.

- Kernel provides services as process, memory, fd, pipes, fs.

- Shell is a command-line user interface. It’s a user program.

- Chap1: xv6 provides simplicity and generality for Unix-like os flavour but it does not follow POSIX standard and follow users notion. All xv6 processes run as root. The functionalities it misses are user-level threads, … (Intended to develop this)

- Chap2:

- xv6 runs entire os in kernel. The concept is monolithic though it provides less kernel services than some microkernel.

- xv6’s process has one thread. Modern os supports several threads within a process

- Chap3: 3-level page table to save memory when most va is not mapped. The idea is to allocate pages on demand.

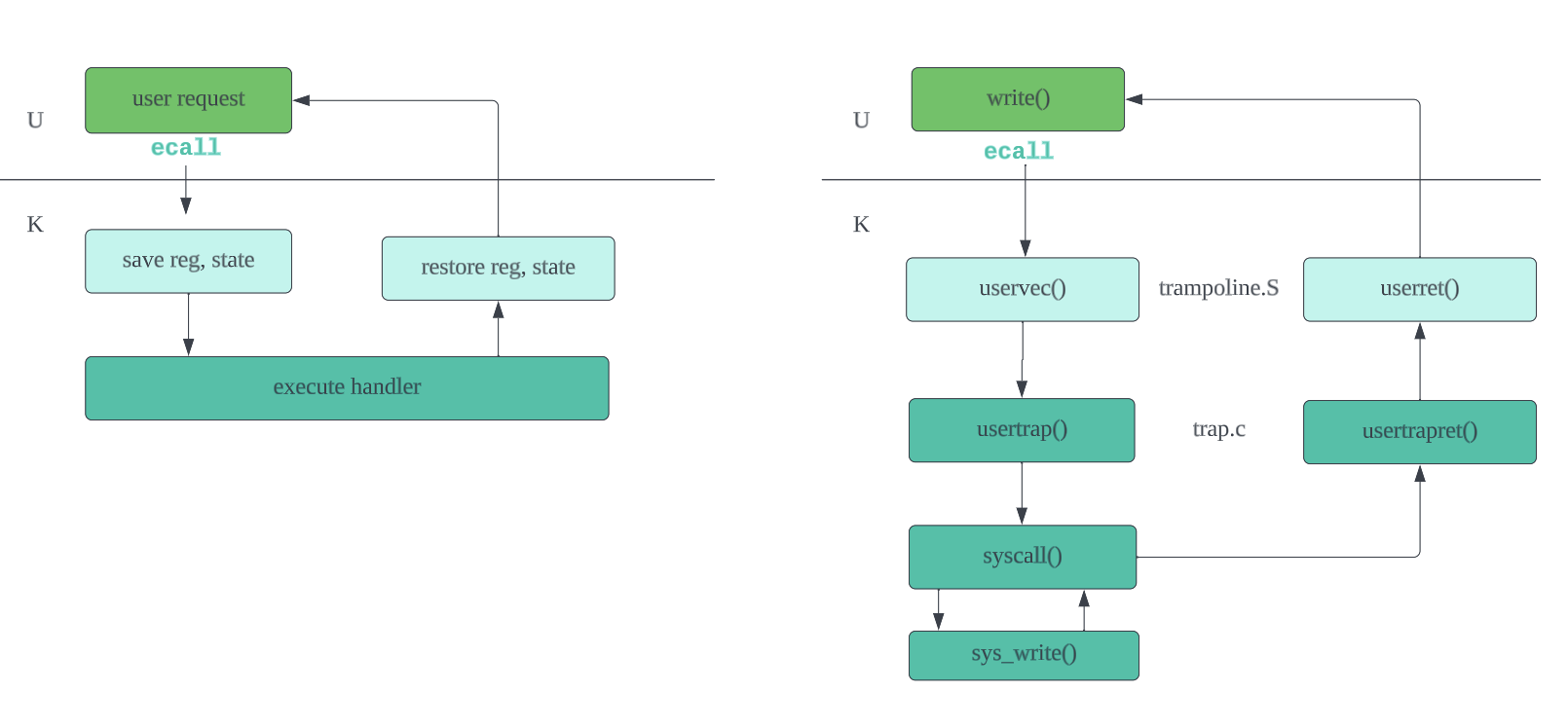

- Chap4: Xv6 kernel handles all traps. It has separate io path for three cases for convenience (

for commonality only single path): traps from user space, traps from kernel space, timer interrupts.- k/u isolation security: Xv6 simplifies the CPU h/w’s trap handling sequence by making kernel s/w switch to kpgtbl, kstack and save regs except pc.

- Chap5: device and timer interrupts while executing in the kernel and user programs.

- Chap7: the scheduling policy is running processes in turn as is

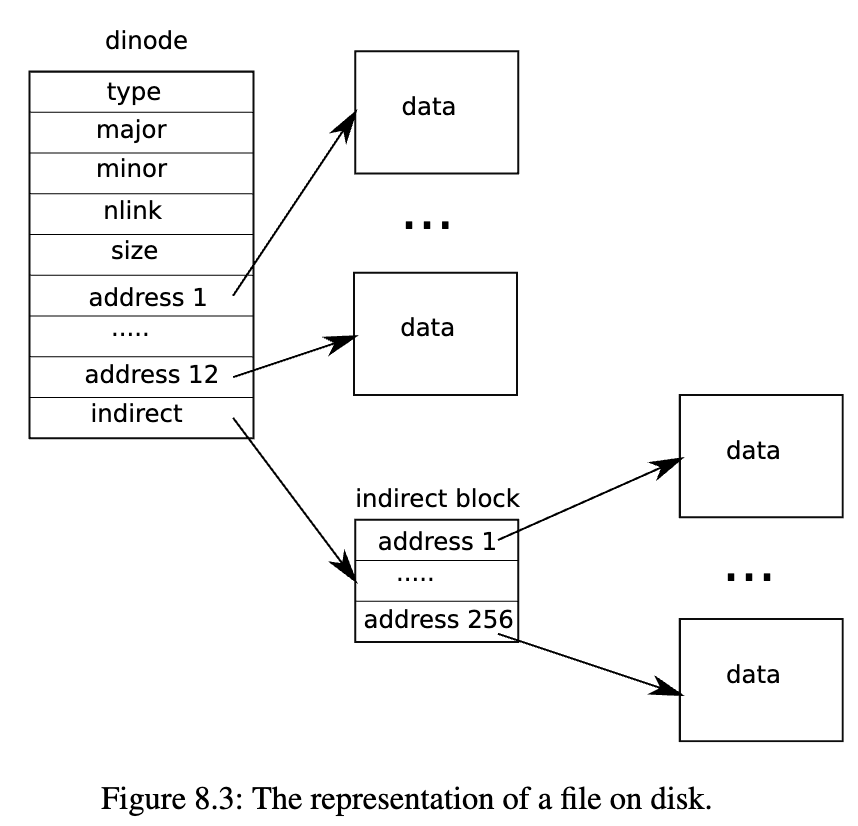

round robin - Chap8: xv6 provides Unix-like fs and stores data on a virtio disk for persistence.

Interfaces¶

Process¶

- Xv6 time-shares processes by switching the available CPU between the process waiting to execute.

- Xv6 allocates memory for each process implicitly:

forkallocates the enough memory for its child process (copy of the parent’s memory),execallocates the memory for the executable file- run-time memory is changed by

sbrk(n), which grows the data memory of process by n.

- Xv6 manages processes:

- create:

fork - close:

exit,wait(wait for the child’s process) - replace the process memory of calling process with new file img:

exec

- create:

File descriptor and I/O¶

-

File descriptors abstract I/O objects like device, file, pipes.

-

Every fd has its own offset. Fds share the offset of the file if the fd is crated by

duporfork. That’s why I/O direction in the shell is easy to implement.-

forkcopies parent’s fd table as well whileexecpreserves the fd table of calling process. So exec can change the original fd. -

dup:ls existing-file non-existing-file > tmp1 2>&1, &1 means dup(1) which shares err files with output file tmp1, therefore tmp1 would have correct and wrong ones.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24// cat < input.txt

// Read from stdin

char *argv[2];

argv[0] = 'cat';

argv[1] = 0;

if (fork() == 0) {

close(0);

open("input.txt", O_RDONLY);

exec("cat", argv);

}

// (echo hello; echo world) >output.txt

// fork version:

if (fork() == 0) {

// write 1 hello;

exit(0);

} else {

wait(0);

// write 1 world

}

// dup version:

fd = dup(1);

write(1, );

write(fd, ); -

-

Processes have 3 private fds, 0 for stdin, 1 for stdout, 2 for stderr.

read,write: returns 0 if data r/w completes, > 0 if in the process, < 0 if err occurspipe: A pipe is a kernel buffer. It has a pair of fds, one for read and one for write.They share the data read from or written to.

Pipe¶

-

The read on the pipe blocks until it’s improssible to receive new data.

If stdin is associated with either end of pipe, then exec will wait if there is the new data waiting to r/w. So the pipe ends before write/read are alway closed in this chunk.

- Pipe[0] read, pipe[1] write.

1

2

3

4

5

6

7

8

9

10

11

12// wc < "hello world"

if(fork() == 0) {

close(0);

dup(p[0]); // p[0] donates its data to file descriptor 0

close(p[0]); // p[0] no longer needed in child since stdin is a copy

close(p[1]);

exec("/bin/wc", argv);

} else {

close(p[0]);

write(p[1], "hello world\n", 12);

close(p[1]);

} -

Process tree:

grep fork sh.c | wc -lThe child process creates a pipe to connect the left end of the pipe with the right end. Fork + runcmd will then run the cmd of the left end and the right end. The right end of the pipeline can also be a pipe. Therefore a processes tree generates.

echo hi | wcsleep 10 | echo hi

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16case PIPE:

pcmd = (struct pipecmd*)cmd;

if(pipe(p) < 0)

panic("pipe");

if(fork1() == 0){

... //p[1]

runcmd(pcmd->left);

}

if(fork1() == 0){

... //p[0]

runcmd(pcmd->right);

}

... // close pipe ends

wait(0);

wait(0);

break; -

Pipe vs temp files: in memory vs in disk

echo hello world | wcecho hello world >/tmp/xyz; wc </tmp/xyz

Fs¶

-

Fs -> data files -> File/Dir -> filename + inode -> type +length + location + Links -> (filename, reference/to/inode)

-

Find file: path/to/file,

/is the root path; relative path. -

Create file/device file:

mkdir,mknod. Mknod associates kernel device with the major and the minor device numbers. The kernel diverts syscalls to the device implementation instead of fs. -

A file has an underlying file called inode. It cantains metadata.

fstat

-

Fs utilities are user-level programs. The command line interfaces can add the same user-level programs to provide such utilities. However,

cdis built into the shell because the forked child process cannot change the memory of the parent process.

Organization¶

- Os MUST provides time-sharing resources and applicable isolation(interact when required) among processes.

- Fancy terms:

multiplexing, isolation, interaction

- Fancy terms:

- Let’s count what does xv6 have:

- 1 monolithic kernel

- many processes (the unit of isolation)

- runs on a multi-core RISC-V microprocessor: 64-bit, “LP64” C

- hardwares: RAM, ROM, screen, disk (simulated by qemu -machine virt)

- Design points:

- Abstract physical resources: Library Vs Outside manager

- Library: Applications access devices like a library. App decides when to leave the process.

- Manager: Os manages who enters/leaves the process and when.

- Provide strong isolation: if one process is down, others should not be corrupted by it.

- hw support of CPU when executes instructions: RISC-V provides three modes:

machine, supervisor, user. - privileged instruction - kernel space, user-mode instruction - user space

- A user app invoking kernel service must transit to the kernel space first: RISC-V

ecall. It switches CPU u -> k and enters k at an entry point controlled by k

- hw support of CPU when executes instructions: RISC-V provides three modes:

- What part of os runs in supervisor mode:

- Term:

monolithic kernel: all. Good to coperate/share and bad side is unsafe. - Term:

microkernel: part. Good side is safe and bad to coperate/share. - Term: Os running processes as service is called

server

- Term:

- Abstract physical resources: Library Vs Outside manager

- Process provides strong isolation

- mechanisms implementing process: user/super mode flag, address spaces, time-slicing of threads

- hw support: Xv6 manages process memory via page tables (RISC-V support).

- Process internals:

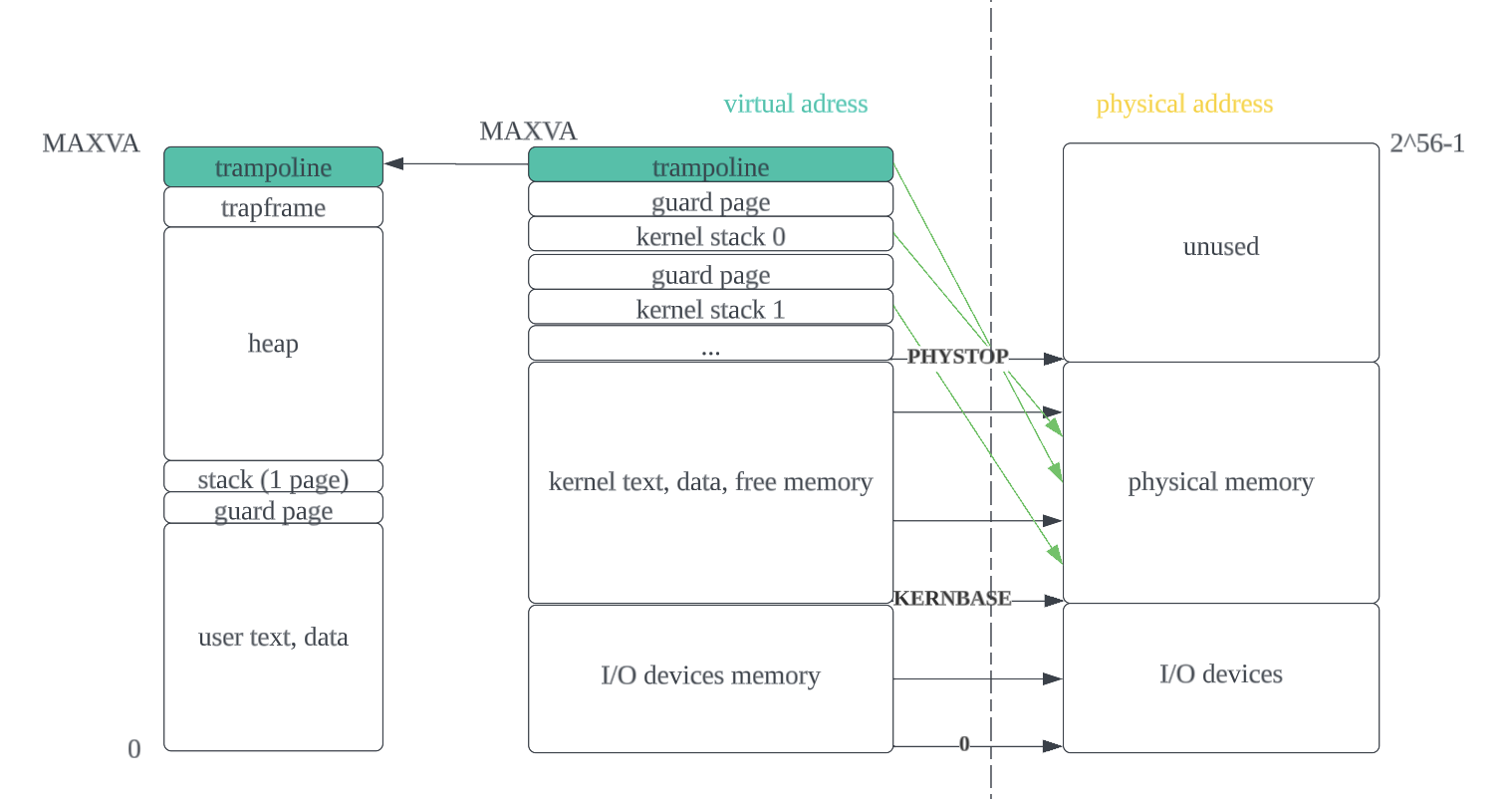

- Process has private memory system/address space. An address space contains user memory starting at va 0. Xv6 uses 38 bits of 39 bits. Max address is 2^38 - 1.

| user text and data | user stack | heap| trapframe|trampoline|

0 ------------------------------------------------------------> MAXVA - Process has kernel states and user states. Kernel states are its pgtbl, kernel stack, run state.

- A process has a thread of execution that executes the process’s instructions. Thread state (local variables, function call,return address) is stored on the thread’s stacks.

- A process has two stacks: u stack, k stack.

- A process makes sys call by

ecall, exit k space bysret.

- Process has private memory system/address space. An address space contains user memory starting at va 0. Xv6 uses 38 bits of 39 bits. Max address is 2^38 - 1.

- I/O path

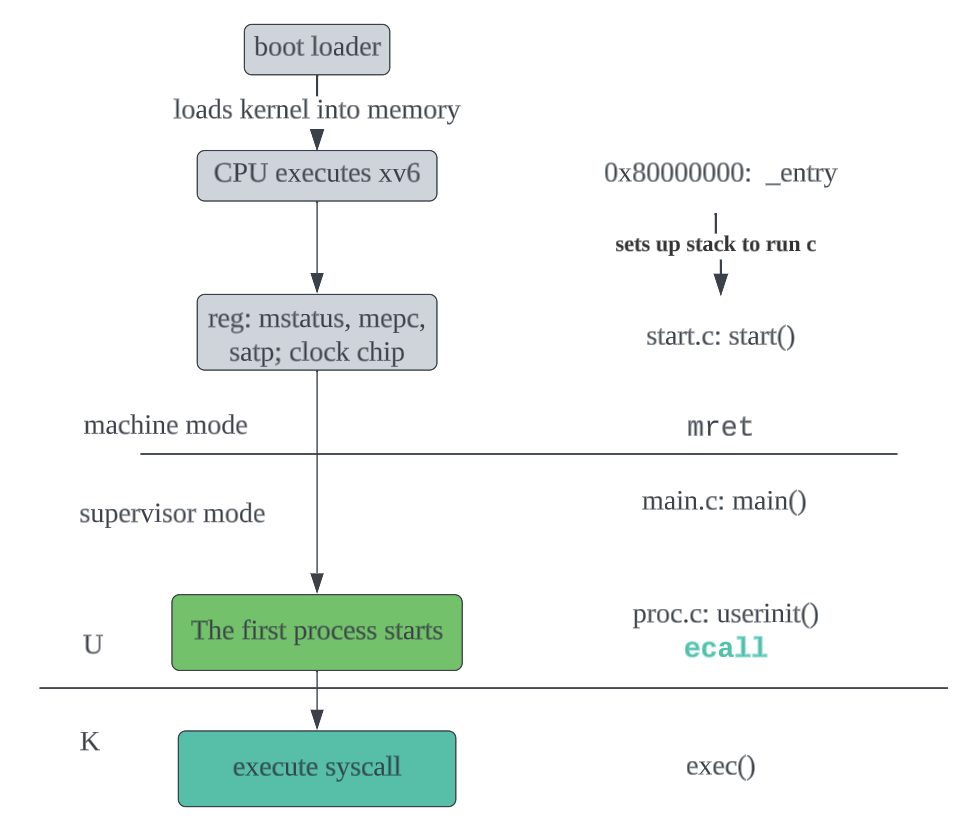

- Initialization: console, vm stuff (page table,process table, trap vector, interrupt handler, device interrupts), fs stuff (buffer cache, inode cache, file table, hard disk)

- Boot xv6 and start the first process

- RISC-V Machine mode -> Supervisor mode; xv6 jumps to kernel space

- RISC-V Machine mode -> Supervisor mode; xv6 jumps to kernel space

Virtual memory techniques¶

-

paging hardware:

- terms

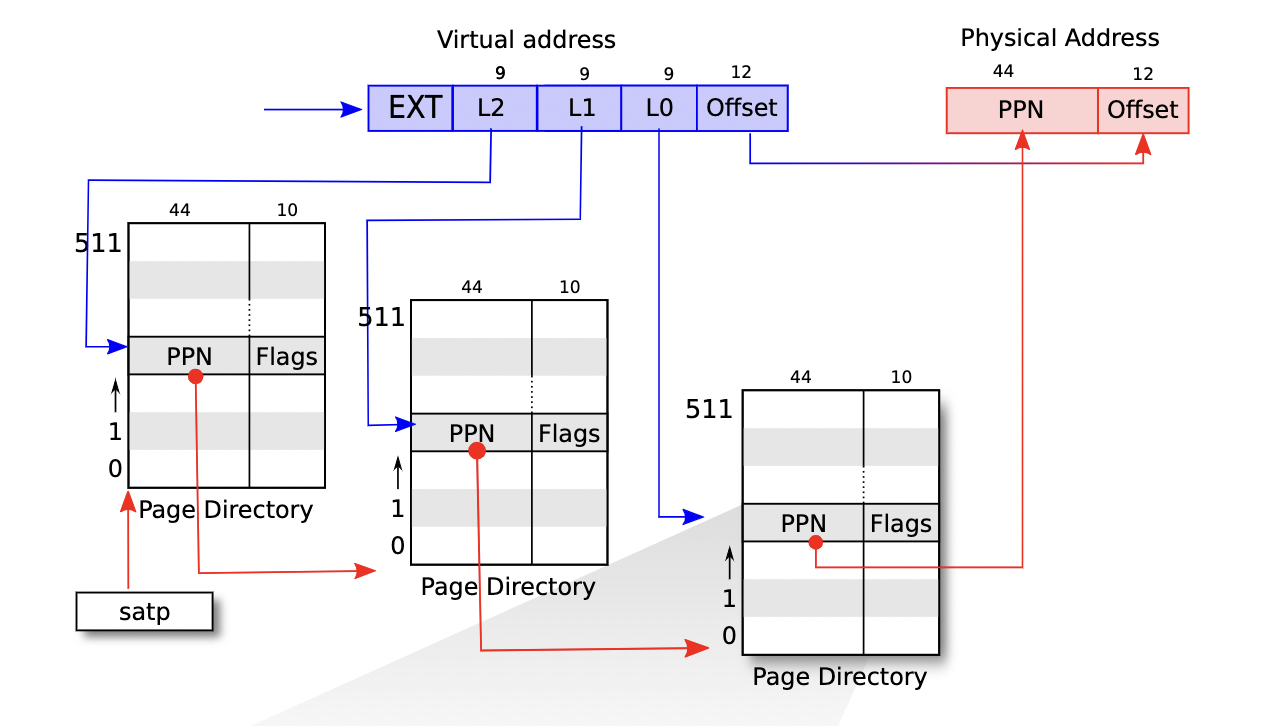

- RISC-V hw supports page table. Its instructions deals with

va. - Sv39 RISC-V only uses the bottom 39 bits to represent

va. - Storage cell DRAM is where r/w physical memory.

- Virtual memory is not physical as va and pa but the abstraction and machanisms to manage va and pa.

- RISC-V hw supports page table. Its instructions deals with

- geometry:

va(39 bit) = PTE_index (27 bit) + flag bit (12 bit). PA (56 bit) = PPN (upper 44 bits of the PTE) + offset (lower 12 bits of VA). 2^27 = # of PTE. satp: supervisor address translation and protection, reserves thepaof page tables.-

- terms

-

Cache of (VA, PA) is Translation Lookside Buffer when finding PA for the VA

- TLB will be cleared when switching page table. RISC-V instruction for that is:

sfence_vma

- TLB will be cleared when switching page table. RISC-V instruction for that is:

-

Address space:

-

Xv6 maintains 1 page table per process in the user space and 1 page table for kernel space.

-

Kernel uses direct mapping to get access to RAM and memory mapped devices.

-

Not directly mapped: high-memory mapping

- trampoline page

- stack pages: have an unmapped guard page with PTE invalid.

-

process address space and kernel address space

-

-

I/O path

- physical memory allocation: free pages are stored in the linked list.

- Syscall

- sbrk: grow/shrink the memory

- exec: create the user part of an address space

Page tables¶

Translation of va to pa: Page tables are stored as a three-level tree in physical memory. The 27 bits of a virtual address denote the indices of three levels. The root level tree is a 4KB page which holds 512 PTEs which point to the next-level page via the physical address (PPN) stored at the PTE. Each of those pages hold the 512 PTEs for the final level in the tree. If any PTE required by the va is not there, a page fault exception occurs.

High-memory mapping indirectly maps trampoline page and kernel stack (along with the guard page) high of virtual address space. They are also accessiable through direct mapping, making one virtual address mapped twice. Using direct mapping only would waste physical memory whose corresponding virtual memory is unmapped for allocating guard pages. If a kernel stack page in the kernel address space overflows, kernel panics for invalid mapping of the correspnding guard page.

Each process has a page table. If the user stack of a process overflows, the hardware generates a page-fault exception for invalid mapping. The kernel is not a process. A process is a running program together with all the state the kernel needs to manage it.

The limitations of xv6’s paging mechanism:

- Direct mapping and known address of the physical RAM supported by QEMU.

- The RISC-V feature of protection at the level of physical address is not used.

- Xv6 allocates 4096-byte pages. It doesn’t support small allocations and thus cannot use sophicated data structures that require dynamic allocation.

Traps¶

Trap machanism¶

- What:

traprefers to event that mandatorily forces a transfer of control from the execution of ordinary instructions to special code that handles the event. Three kinds of such event are: syscalls, exception and device interrupt.- syscalls:

ecall - exception: u/k illegal instruction, e.g. divide by zero, invalid virtual address

- device interrupt: device signals when r/w req finishes

- syscalls:

- RISC-V machinery:

- The kernel writes address/value to registers to control io path (risc-v detects regs)

stvec, addr of trap handlersepc, risc-v saves program counter when a trap occurs.sretcopiessepctopcwhen returned from trap.scause, risc-v saves the cause of the trapsscratch, a value at the start of a trap handler (as from scratch)sstatus,SIEset if interrupts are enabled.SPPtells user/supervisor mode a trap came from and controls whatsretreturns

- The registers related to traps handled in supervisor mode. Xv6 has a similar set of registers for traps handled in machine mode.

- Each CPU has its own set of registers. >=1 CPU may be handling a trap at anytime.

- Procedures of risc-v h/w: if an interrupt -> no, disable SIE ->

pc => sepc,sw SPP => sstatus-> set scause, su mode ->stvec => pc-> execute new pc

- The kernel writes address/value to registers to control io path (risc-v detects regs)

- Trap machanism

-

- Traps from user space

- Traps from kernel space

Page fault exceptions¶

key mechanism: when a CPU cannot translate a virtual address to a physicall address, the CPU generates page-fault exception.

Copy-on-write (COW) fork

- fork allocates physical memory for the child and copies parent’s memory into it. The idea of COW fork is to share the parent’s memory to the child. We cannot let the parent and the child to directly access the physical memory because of disruption of multiple writes.

However, a safer way is to share memory with the read-only permission.

- If either parent or child can write a given page, the CPU raises a page-fault exception.

- The trap handler responds by allocating a new physical page and copying the memory from the physical page that the faulted address points to.

- The kernel then changes the PTE in the faulting process’s page table to point to the copy and to allow r/w. After that, it resumes the faulting process.

- The process will write to the new physical page (copy).

- Meanwhile, the users of that original physical page can still read it without copying.

COW fork needs book-keeping to track when the physical page can be freed. An optimization is that if there is only one user of the physical page, no copy is needed.

COW is an optimization mechanism and needed for a case when two or more processes have separate memory spaces and are isolated. The kernel optimizes it by allowing copies only when it is needed such as writes. It’s different than the case of shared memory model where multiple processes modify the same memory space and see the change.

Lazy allocation: When an application asks for more memory, the kernel is aware of the size increase but doesn’t allocate physical memory until a page fault incurs on one of those address.

Demand paging: The kernel creates the page table for the user address space and marks it invalid.

- Paging to disk: store only a fraction of user pages in RAM and map the rest on disk in a paging area. The pages on disk (paged out) are marked invalid and must be read from disk to RAM (paged in) for an application to access, which is triggered by a page fault on a invalid memory access.

- If there is no physical RAM, a RAM page must be evicted to the paging area on disk (paged out) and marked invalid.

- Eviction is expensive.

Demand paging is advantageous when the memory is scarce.

Memory mapped file

MMAP creates a new mapping of the contents of a file on disks in the virtual address space of the calling process/program. The program then accesses the file pages as if they reside in memory. OS automatically evicts unused pages if memory fills up.

The procedures of MMAP are:

- Mmap retruns a pointer to the memory-mapped file contents.

- The OS reserves a part of virtual address space of the calling process/program.

- The program accesses the file’s contents via the pointer.

- The OS attempts to retrieve a page (

va). - If no valid mapping exists for the va, a page fault exception occurs which loads the file’s content on disk to physical pages and creates a new mapping from the

vato the newpa. The initiating CPU core also caches this PTE in its local translation lookaside buffer (TLB).

Device interrupts¶

- Interrupts are one type of trap. Trap handler recognizes a device interrupt and calls the driver’s interrupt handler.

- Device drivers execute in two contexts:

Top half: run in a process’s kernel thread, read/write()Bottom half: interrupt handler copies the input data to buffer and wake up top-half code to do op. It runs in theinterrupt contextwhich is also referred toatomic context, unable to block.- Linux has opposite names for those two contexts.

- Terms

interrupt contextis time-critical that is not associated with processes. Interrupt handler putting one process sleep may let other process blocking therefore it cannot sleep in this context.process contextis the mode of operation the kernel is in while it is executing on behalf of a process (LKD3 Chap7). Process can sleep or invoke the schedulor

- Driver is programmed by memory mapped I/O.

- How xv6 displays “$” in console?

device -> (uart->interrupt ->) console - How xv6 displays “ls” in console?

key stroke -> keyboard -> (uart input -> merge to byte -> interrupt)->interrupt handler - Riscv interrupts related registers: SIE(supervisor interrupt enable), SSTATUS(supervisor status), SIP(supervisor interrupt pending), SCAUSE: means interrupts, STVEC: program counter of user program that cpu runs when trap/interrupts/page faults occur

- timer interrupts: to maintain its clock and enable it to switch among processes. Execute in the machine mode.

- CLINT (core-local interruptor)

- time-slice CPU

- Interrupts have high CPU overhead, ways to reduce the need for interrupts

- single interrupt for a batch of incoming requests

- disable interrupts and check the device for attention:

polling, wastes CPU time when the device is mostly idle - dynamically switch between interrupts and polling

- Date movement to h/w

- programmed I/O: UART driver, kernel buffer => user buffer => h/w, low speed

- DMA (direct memory access): most modern drivers, user-space buffers <=> device hardware, high speed

Concurrency control¶

Lock¶

- Xv6 has two locks: spinlocks and sleeplocks

- spinlocks

- continue polling for the lock. Hold a lock for a short time. Cannot

yield CPU(a thread switch). - Cost: polling

- disable interrupts: if a lock can be interruptted, then the interrupt acquires the same lock that is being used which leads to dead lock. -> concurrency in same CPU

- sw vs atomic_swap: sw differs in different CPU settings. It may have one more instruction which disables the atomicity. -> concurrency in different CPU

- continue polling for the lock. Hold a lock for a short time. Cannot

- Sleeplocks:

- sleep until certain condition occurs. Hold a lock for a long time. Can yield CPU. (ex, mutex)

- Cost: high CPU overhead due to context switch (open another kernel thread)

locked, a word that is zero when the lock is available and non-zero when it is held.

- spinlocks

- Why locks: one data stucture are accessed by multiple processes(race condition) -> atomicity in critical section.

- How? system designers should define orders of locks for the purpose of performance and correctness

- memory barrier: tells the compiler and CPU to not reorder loads or stores across the barrier,

__sync_synchronize()

Scenario 1: race condition in kfree()

Attempt 1: corse-grain locking/two processs are accessing one sharing data structure and one of them updates the data.

scenario 2: rename files: rename(“d1/x”, “d2/y”)

Attempt 2: lock operations not data structures.

Terminology:

- Deadlock: unlocking A requires B, unlocking B requires A, A and B are locked. example: deadly embrace

Limitations of xv6: xv6 avoids lock-free programming. Although, programming with locks is also hard.

Threads¶

-

Why threads? Run parallel programs

-

What: One thread is the state of one serial execution. Thread states include PC, REGS, STACK, [state:runnning, runable, sleeping]

-

How: problems of implementing threads

Assumption: one core(CPU) one thing -> thread either sleeps or runs on one core

- how to switch between processes(scheduling)

- What to save and restore in switching

- Compute-bound threads: long-running program

-

Cooperative scheduling is user processes explicitly give up control to kernel

-

Scheduling policies:

- +priority: fairness Vs throughput

priority inversion: low-priority process and high-priority process share a lockconvoys: many high-priority processes are waiting for a low-priority process that acquires a shared lock

- implementation: sleep+wakeup

sleepdisabled interrupts -> single-CPUsleep+ lock -> xv6 (multiprocessor systems), FreeBSD- use explicit process queue,

waitqueue-> Linux

wakeup:signal, wakeup one/all process- many processes are waiting for that particular channel,

thundering herd

- many processes are waiting for that particular channel,

- +priority: fairness Vs throughput

-

scheduler

- A kernel thread gives up its CPU (

sched) and switches to the kernel thread which previously-calledschedinscheduler. - xv6 holds p->lock in

swtch: the caller ofswtchacquires p->lock in one thread and the callee ofswtchreleases it in other. This breaks convention of locks where the holder of a lock will also release it.

- A kernel thread gives up its CPU (

Problem1: what if the process runs for a long time? -> [Pre-emptive scheduling] the process is:

- user processes run

- time interrupts make it give up control to kernel

- kernel then yields the CPU

Problem2: lost wake-up problem, wakeup before sleeping and no wakeup while sleeping

- another process blocking (acquire lock that is not released by sleep(object)) while sleeping -> Fixed by sleep(lock, object). Sleep will realese the lock after sleeping. It holds the lock before sleeping.

- sleep/wakeup + spinlock, a synchronization method

Example of context switching swtch between a kernel thread and a scheduler thread (kernel/proc.c):

-

The xv6 scheduler has a dedicated thread (saved registers and stack) per CPU. A register set is called

context. In xv6, the context struct contains the process and cpu structs. -

Switching threads means saving the registers of the old thread and restoring the previously-saved registers of the new thread.

-

Switching plan:

swtchsavesra,spwhere swtch was called and the current context in p->context. Then it restores regs from new context.1

2

3

4

5

6

7

8

9

10# sched:swtch(&p->context, &mycpu()->context);

swtch:

sd ra, 0(a0)

sd sp, 8(a0) # sw contents of sp, Offset(RegSource)

...

ld ra, 0(a1) # ld RegDest, Offset(RegSource)

ld sp, 8(a1)

...

ret

File systems¶

Design points:

- on-disk data structures for dir and files tree

- crash recovery

- coordinate multi-process to maintain invariants

- in-memory cache for fast retrieval of data

case study: ext3¶

Network stack¶

case study: meltdown¶

case study: RCU¶

Readings:

- RCU Usage In the Linux Kernel: One Decade Later, 2013

- LWN[1]: rcu basics, https://lwn.net/Articles/262464/

Terms:

- detry, directory entry metadata

- NMI, non maskable interrupts (cannot be disabled)

- type safe memory is memory that keeps its type after being deallocated.

- explicit tracking includes reference counts, reader-writer locks, events, etc.

The most basic form of RCU is a way of waiting for things to finish. … The greatest advantage of RCU is that it can wait for each of 20,000 different things without having to explicitly track each and every one of them, and without worrying about performance, deadlock scenarios and memory-leak hazards etc. that are inherent in schemes using explicit tracking.

RCU is faster than other synchronization mechanisms in the kernel by allowing a single updater and multiple readers. It caters three needs: read while updating, low computation + storage overhead (a few bytes/dentry) and deterministic completion.

Design¶

- It has a read-side critical section and a synchronization section.

- Read_lock, read_unlock: By disabling thread preemption (a thread cannot context switch in the critical section). Can be called many times. -> non-blocking

- call_rcu, synchronize_rcu: wait for the completion of all the previous caller of rcu into the critical section. It does not wait for the caller after synchronize_rcu is invoked.

- The design is based on scheduler context switch, maintaining state shared between CPUs.

- Handles compiler and memory reordering (processor) carefully. Assign_pointer, dereference.

- RCU maintains multiple versions of objects and only frees them until all pre-existing critical sections completes.

Mechanisms¶

-

publish-subscribe: enforce ordering for insertions and reads

This mechanism is designed to cope with behaviors of CPU and compiler.

- There is not guarantee that the sequential assignments are in-order from the views of compiler and CPU.

Rcu_assign_pointerenforces the assignment of gp is the last one of current assignments. - The value-speculation compiler optimizations: compiler guesses p, fetches p->a, p->b and p->c, then fetches the actual p value to check if the guess is right.

Rcu_dereference

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28// #1 publication: allow assignment without values uninitialized

// and avoid using memory barriers.

struct foo {

int a;

int b;

int c;

}

struct foo *gp = NULL;

p = kmalloc(size, GFP_kernel);

p->a = 1;

p->b = 2;

p->c = 3;

rcu_assign_pointer(gp, p) // gp = p

// #2

p = gp;

if (p != NULL) {

do_something_with(p->a, p->b, p->c);

}

// subscribtion: subscribe to a given value of the specifiled pointer

// and make sure the deference ops see initialization before any

// publish op

rcu_read_lock();

p = rcu_dereference(gp);

if (p != NULL) {

do_something_with(p->a, p->b, p->c);

}

rcu_read_unlock(); - There is not guarantee that the sequential assignments are in-order from the views of compiler and CPU.

-

wait for pre-existing rcu readers to complete, for deletion

- It is implied that read-side critical sections have completed before the next context switch. Because no sleep or blocking is allowed in the critical sections.

- A grace period waits for completion of pre-existing reads and will extend as needed.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23struct foo {

struct list_head list;

int a;

int b;

int c;

};

LIST_HEAD(head);

/* . . . */

p = search(head, key);

if (p == NULL) {

/* Take appropriate action, unlock, and return. */

}

q = kmalloc(sizeof(*p), GFP_KERNEL);

// read-copy-update: copies

*q = *p;

// and updates

q->b = 2;

q->c = 3;

list_replace_rcu(&p->list, &q->list);

// do context_switch() on each CPU.

synchronize_rcu();

kfree(p); -

multiple versions of recently updated objects, for reader

- Deletion example: two versions of one pointer values are only kept in the critical sections. After exiting, on readers references that pointer and it will be safely freed.

Usages¶

- wait for completion. Put a spin_lock around rcu critical section. The NMI sys runs every NMI handler in the critical section.

- reference counting. Users of the data item execute in the critical section. When freeing a data item, use call_rcu to free the memory after no other threads using the data item. It’s efficient for getting rid of updates, memory barriers, atomic operations, etc…

- type safe memory. Reverse page map maps a physical page (page_t) to all the virtual address (anon_vma_t) mappings that include that physical page. Deallocating a page without waiting for all threads to relinquish the pointer to the page.

- publish-subscribe. Rcu_assign_pointer publishes a pointer and concurrent readers read through rcu_dereference.

- read-write lock alternative. Read in the critical section, write synchronize with other writes using spin locks.

Basics¶

C¶

-

1

2

3

4

5

6

7

8

9// Want the last X bits

unsigned mask;

mask = (1 << X) - 1; // -1 is like fliping the bit

lastXbits = value & mask;

// Want X bits in the middle

unsigned mask;

mask = ((1 << X) - 1) << startBit;

isolatedXbits = value & mask; -

struct vs packed struct: if the fileds aligned

- Fact: misaligned load/store can be slow or unsupported (platform-dependent)

RISC-V convention¶

Understanding RISC-V Calling Convention by Nick Riasanovsky:

-

RISC-V abstract machine: Base ISA: Program counter, 32 general-purpose registers (x0–x31).

-

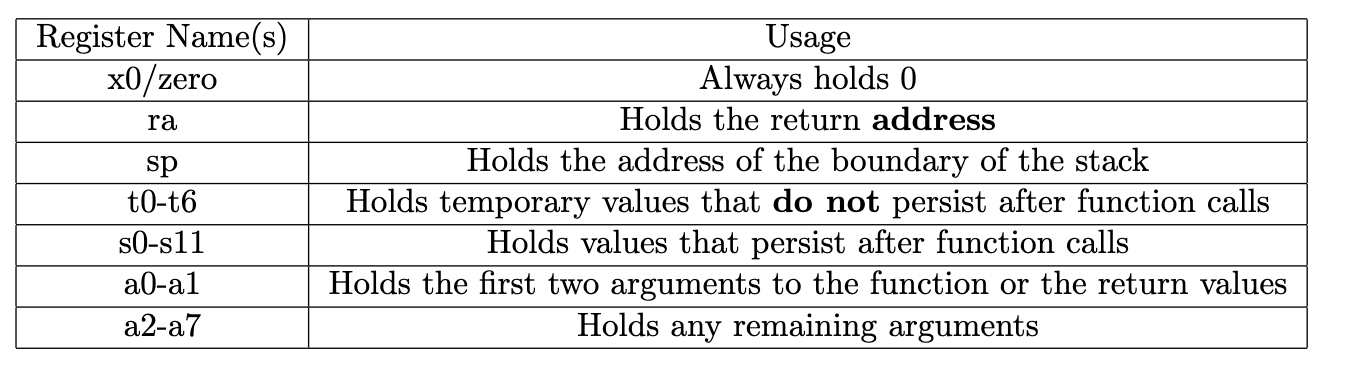

Unlike high-level programming language, convention is a choice to organize code like naming and line < 80. Assembly is built on convention. Three parts is key to understand assembly: registers, function calls, and entering/exiting a function (prologue/epilogue).

-

.section; .global means it can call this function from other files; .text means it’s executable code[2]

-

-

Registers

- RISC-V has 32 registers.

spholds the current base of the stack.rareturn address.pcholds the address of the current instruction.tfor temporary.aregs -> 8 arguments, 2 return values ina0-a1.-

freg for floating point

-

- RISC-V has 32 registers.

-

Function calls

- Used after a function call(),

sregs; unused after a call(),tregs. - (not persistent) Making a call, pass arguments in

a2-a7; (persistent) after a call, get return values ina0-a1

- Used after a function call(),

-

Prologue/Epilogue

-

guarantees:

- The sp will have the same value when exiting the function that it did entering (unless we store return values on the stack).

- All s registers will have the same value exiting the function that they did entering.

- The function will return to the value stored in ra, assuming no abnormal execution.

1

2

3

4

5

6

7

8

9

10

11def prologue():

sp -= space of (s registers + local var);

store any saved regs used;

store ra if call();

def epilogue():

reload any saved regs;

reload ra;

sp = previous sp;

jump back to return address

-

-

Stack Frames: stack grows downwards and heap grows upwards

- return address, to prev.frame(fp), saved registers, local variables… (higher <- lower addr)

-

Why this convention? Avoid to save every regs

-

Debug tips using convention

- Check that you stored

raproperly. For recursion make sure you linkrafor each recursive call. You can test this by putting a break point at the end of the epilogue and seeing where you return. - Check that you don’t use any

tregisters after a function call. - Check that

spenters and exits with the same value. - Check the number of times you enter the prologue equals the number of times you enter the epilogue.

- Make sure you restore every register you modified.

- Check that you stored

-

Instructions

-

w(word) is 32 bit in RISC-V,d(double) is 64 bit,i(immediate) is constant (see 6.004 isa reference card for the maximum value it can represent) -

Reg is 32-bit (4 byte) wide in RV32; 64 bit in RV64 etc.

-

rais the destination reg -

csrr: Read CSR (Control System Register) -

mhartidis Hart ID Register: The mhartid CSR is an MXLEN-bit read-only register containing the integer ID of the hardware thread running the code (Riscv privileged spec 3.1.5)1

2csrr a1 mhartid

csrr rd, csr -> csrrs rd, csr, x0 -

jal, jump and link;jalr, jump and link register;1

2

3

4

5

6

7// call()

jal ra lable // link ra with pc + 4 (store pc + 4 in ra), jump to lable (pc = lable);

jalr ra rd imm // link ra with pc + 4, jump to reg (pc = rd + imm)

// no call(), just jump to lable

jal x0 lable // j lable

jalr x0 rd imm // jr rd imm

-

GDB¶

gdb notes from lecture and csapp, more:

-

file filename: read symbols from that file -

show source code:

tui enable,layout split-> both C and asm,layout source-> only Clayout reg,layout asm: show reg, assemblylayout next: steps through layouts

-

info: breakpoints, program, functions, stack, frame, registers,

info b

-

execution:

step,continue,finish,run,until-

until 3: run until breakpoint 3 -

si: execute one instruction

-

-

breakpoints: you can disable/enble, break/delete it

- conditional breakpoints: break, only when a condition holds (e.g. variable has a certain value)

clear sum: Clear any breakpoints at the entry to function sum

-

watchpoints: break when a memory location changes value,

wawatch -l address: when the address contents changewatch expression: when expression changes

-

examine data:

print /[format] $raxprint /d (int)$rax: Print contents of %rax in decimal after sign-extending lower 32-bits. -> negative valueprint *(int *) 0xbffff890: Print integer at address 0xbffff890print *(int *) ($rsp+8): Print integer at address %rsp + 8print (char *) 0xbfff890: Examine a string stored at 0xbffff890x/[num][size][format] where:num, number of objects to display,size= size of each object (b=byte, h=half-word, w=word, g=giant (quad-word)),format= how to display each object (d=decimal, x=hex, o=octal, t=binary etc.)

-

Example

1

2

3

4

5

6

7

8//malloc can fail

int *a = (int*)malloc(12*sizeof(int)); //allocated memory is not initialized, should change to alloc(12,sizeof(int))

if (a == NULL) return 0;

//macro shouldnot be used interchangeably.

Lookup table¶

RISC-V regs:

| reg | name | saver | description |

|---|---|---|---|

| x0 | zero | - | hardwired zero, always 0 |

| x1 | ra | return address | |

| x2 | sp | callee | stack pointer |

| x3 | gp | - | global pointer |

| x4 | tp | - | thread pointer |

| x5-7 | t0-2 | temporary registers | |

| x8 | s0/fp | callee | saved register / frame pointer |

| x9 | s1 | callee | saved register |

| x10-11 | a0-1 | function arguments / return values | |

| x12-17 | a2-7 | function arguments | |

| x18-27 | s2-s11 | callee | saved registers |

| x28-31 | t3-6 | temporary registers | |

| pc | - | - | program counter |

Related readings:

- A nice blog explains pipe and inter-process communication in details: https://www.rozmichelle.com/pipes-forks-dups/

- risc-v examples: https://marz.utk.edu/my-courses/cosc230/book/example-risc-v-assembly-programs/

Rcu doc. More rcu readings: https://docs.kernel.org/RCU/rcu.html ↩︎

RISC-V Assembler Reference, https://michaeljclark.github.io/asm.html ↩︎