db index

DBMS’s execution engine needs to access pages before doing operation on those.

Design decisions when implementing data structures for the DBMS:

- Data organization: memory layout and information to store -> access

- Concurrency: multiple threads to access without causing problems -> efficient access

There are two types of data structures: hash table, trees.

Hash tables¶

-

Complexity: space: O(n); runtime: amortized O(1) lookup

-

hash tables implementations:

-

hashing functions: collision rate

-

schemes: handle key collisions after hashing

-

Static hashing¶

The size of the hash table is fixed. DBMS has to rebuild a larger hash tables if it runs out of storage space.

Assume: knowing the number of elements it wants to store.

-

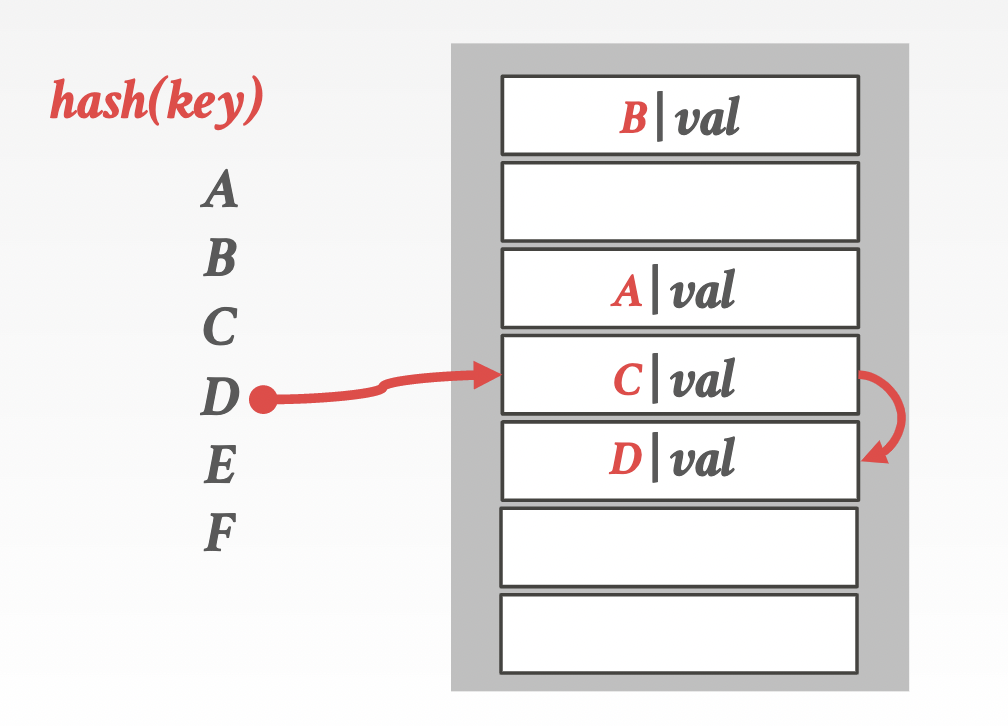

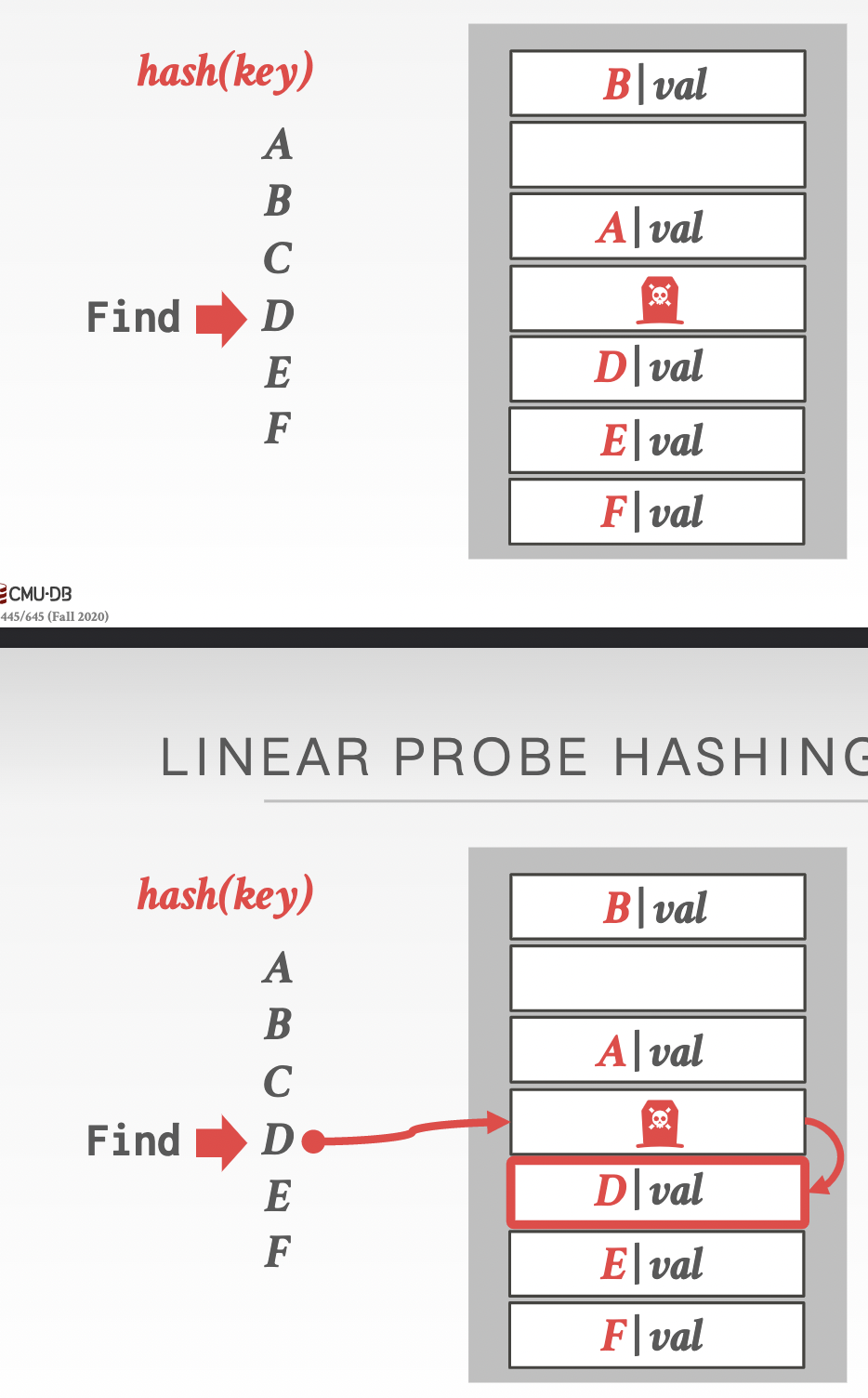

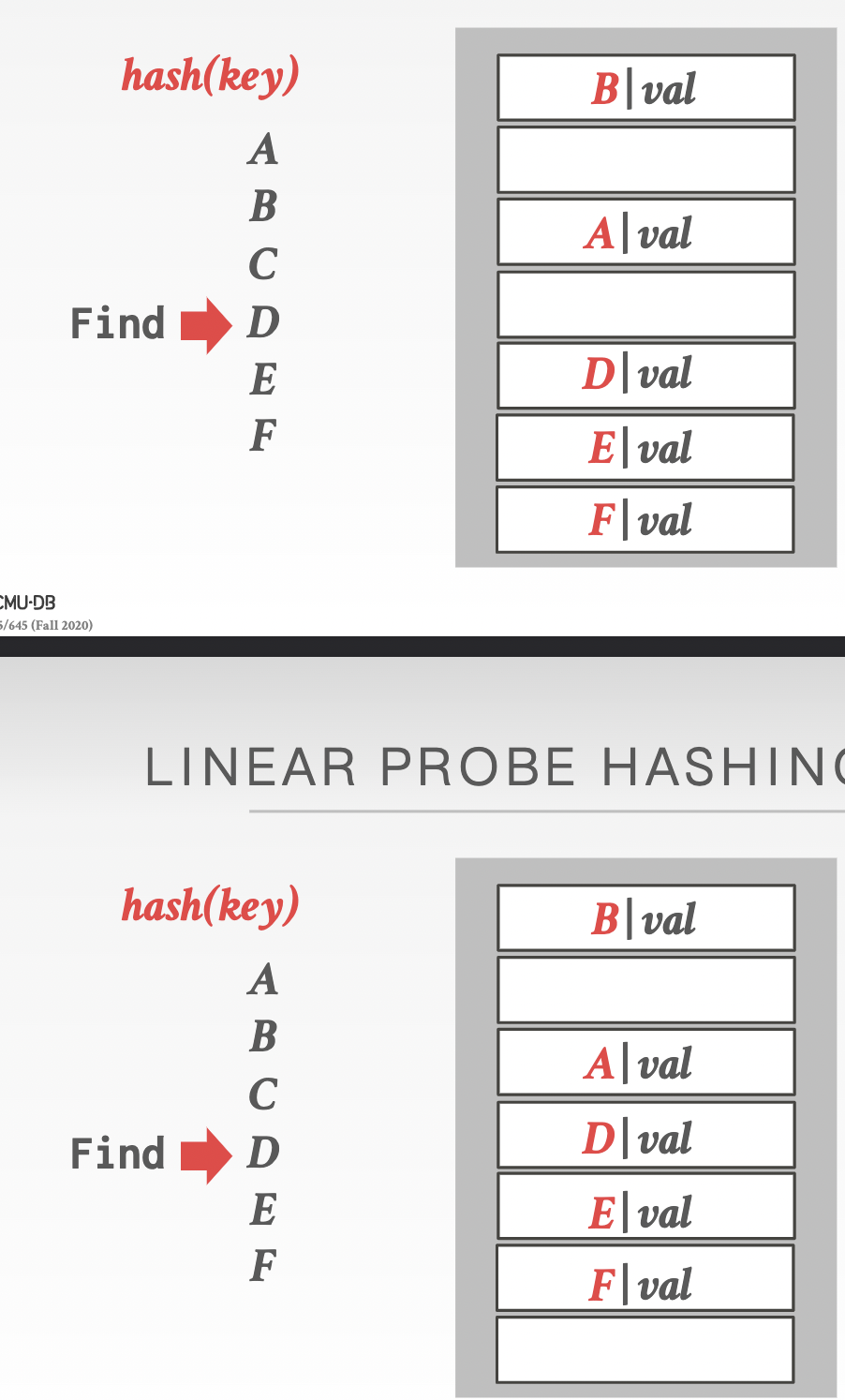

Linear probe hashing

-

collision: linearly seach the adjacent slots until an open one is found

-

Non-unique keys: the same key may be associated with multiple different values or tuples

- approach 1: separate linked list

- approach 2: redundant keys: store the same key multiple times in the table

-

deletion: removing the entry from the slot -> can’t find the slots having collisions with the deleted one.

-

approach 1: tombstone: instead of deletion, replace it with magic entry indicating to keep scanning for future lookups.

-

Approach 2: movement: shift the adjacent data after deleting an entry to fill the now empty slot.

-

-

-

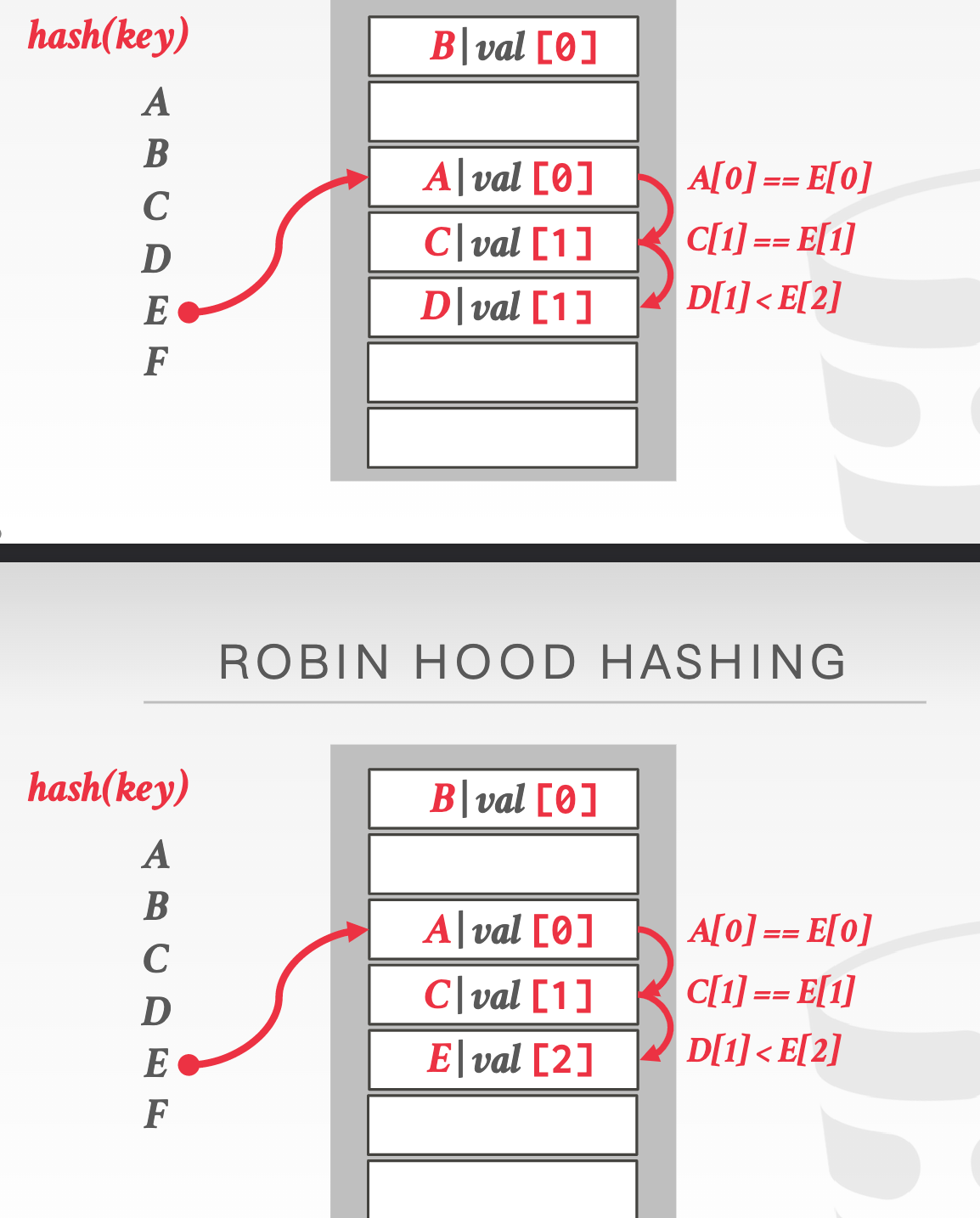

Robin Hood Hashing: steals slots from “rich” keys and give them to “poor” keys

Each key tracks the number of positions they are from where its optimal position in the table

-

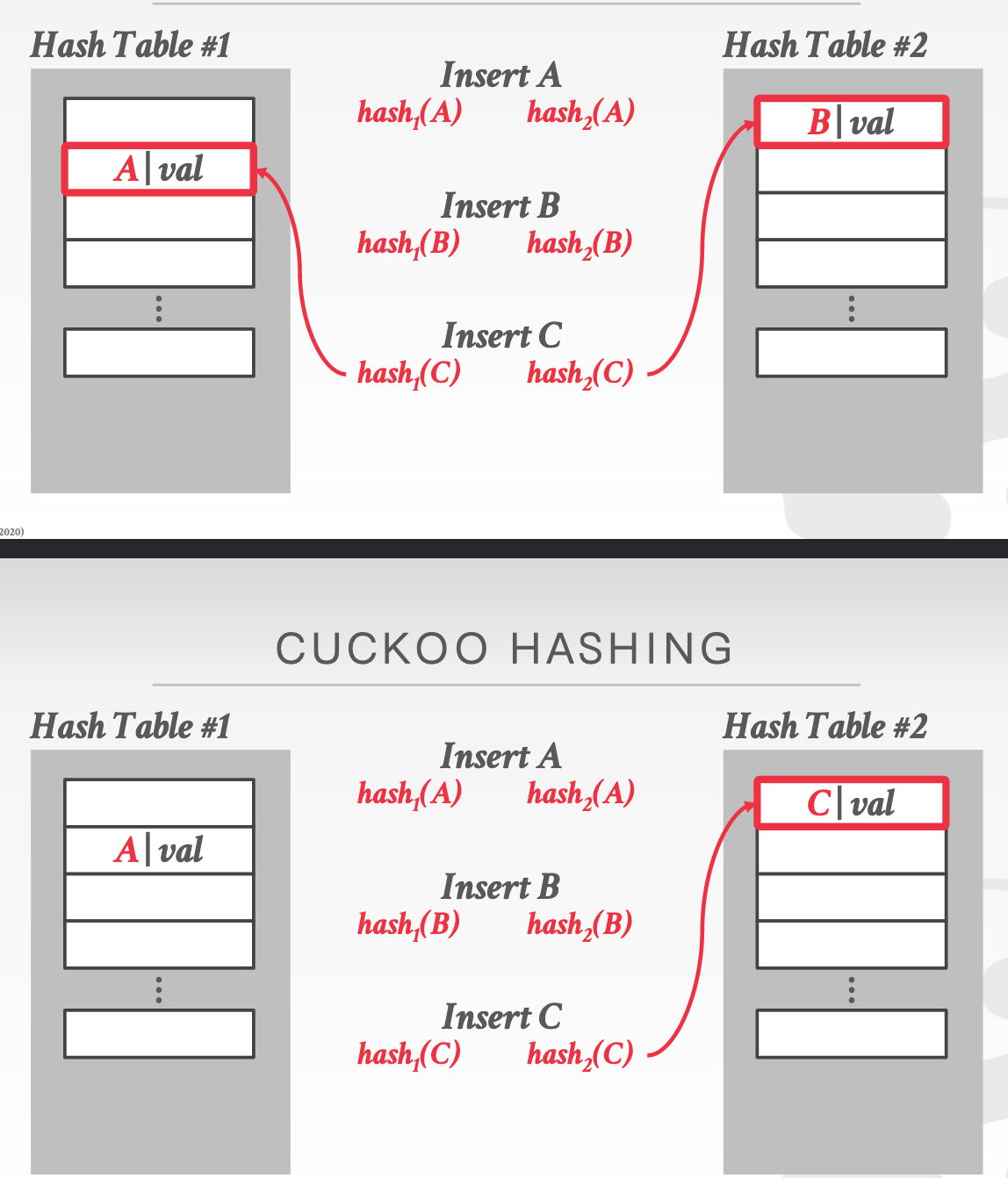

Cuckoo Hashing: Use multiple hash tables with different hash function seeds.

Dynamic hashing¶

property: Dynamic hash tables resize themselves on demand

-

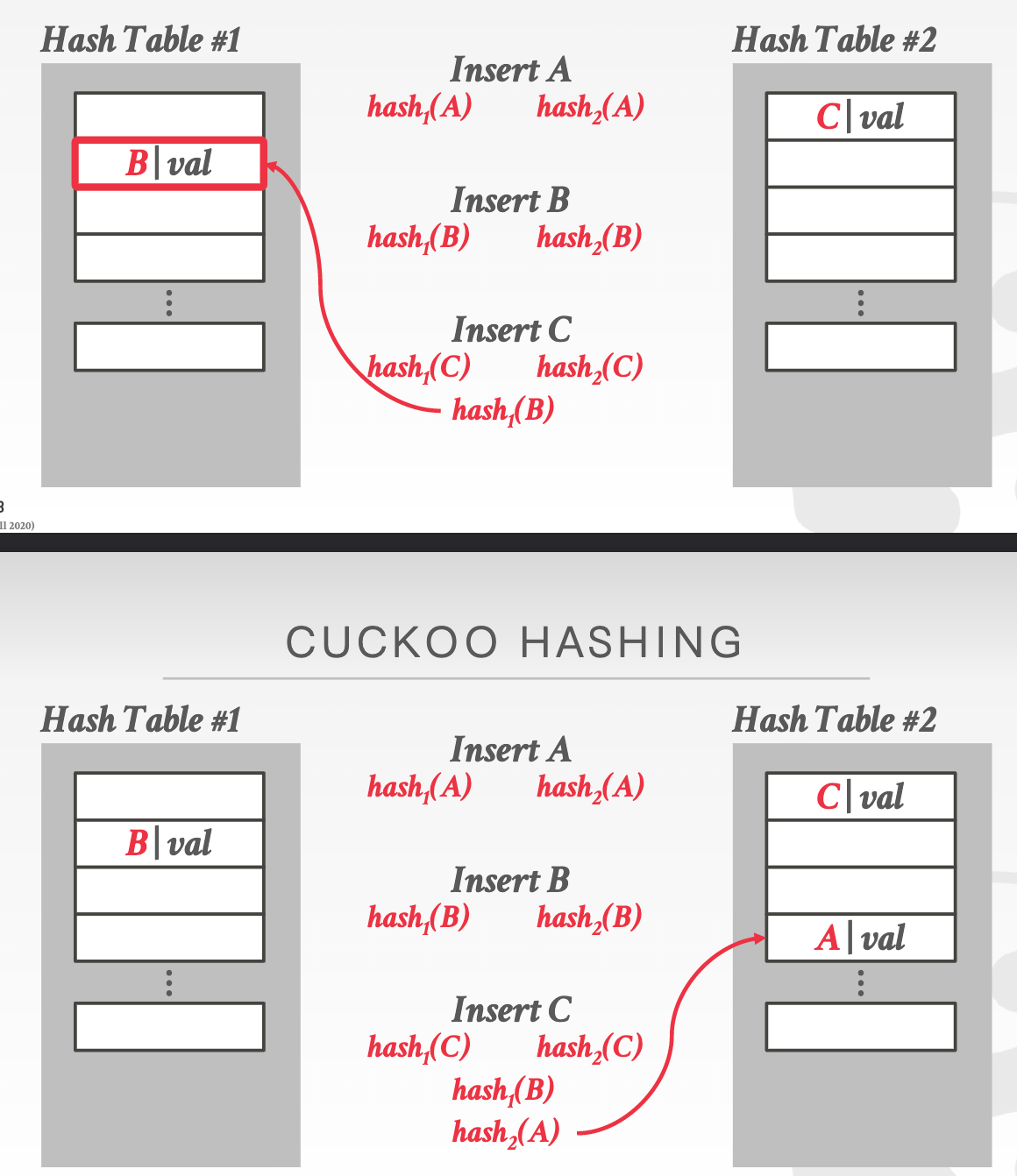

Chained hashing: linked list to handle collisions

-

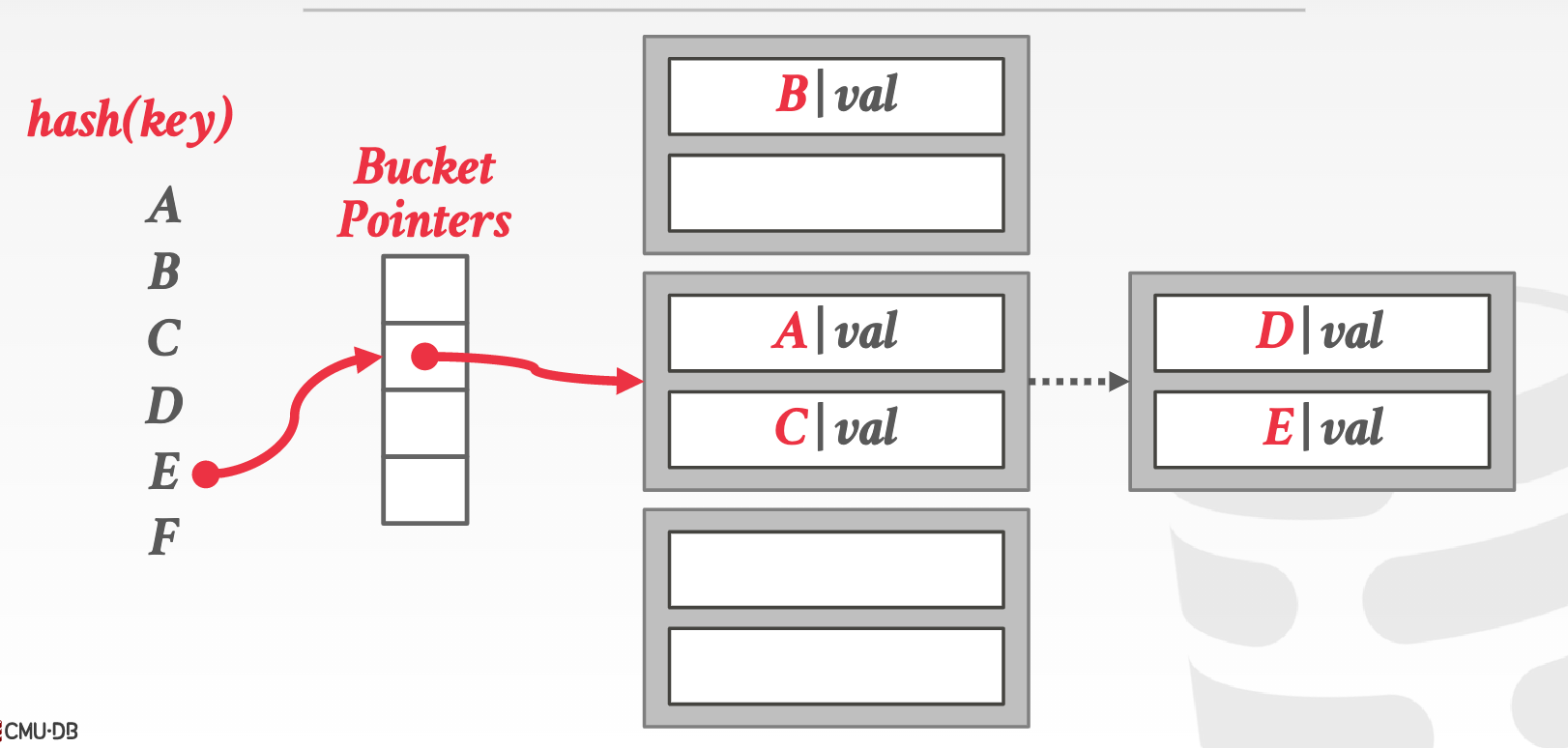

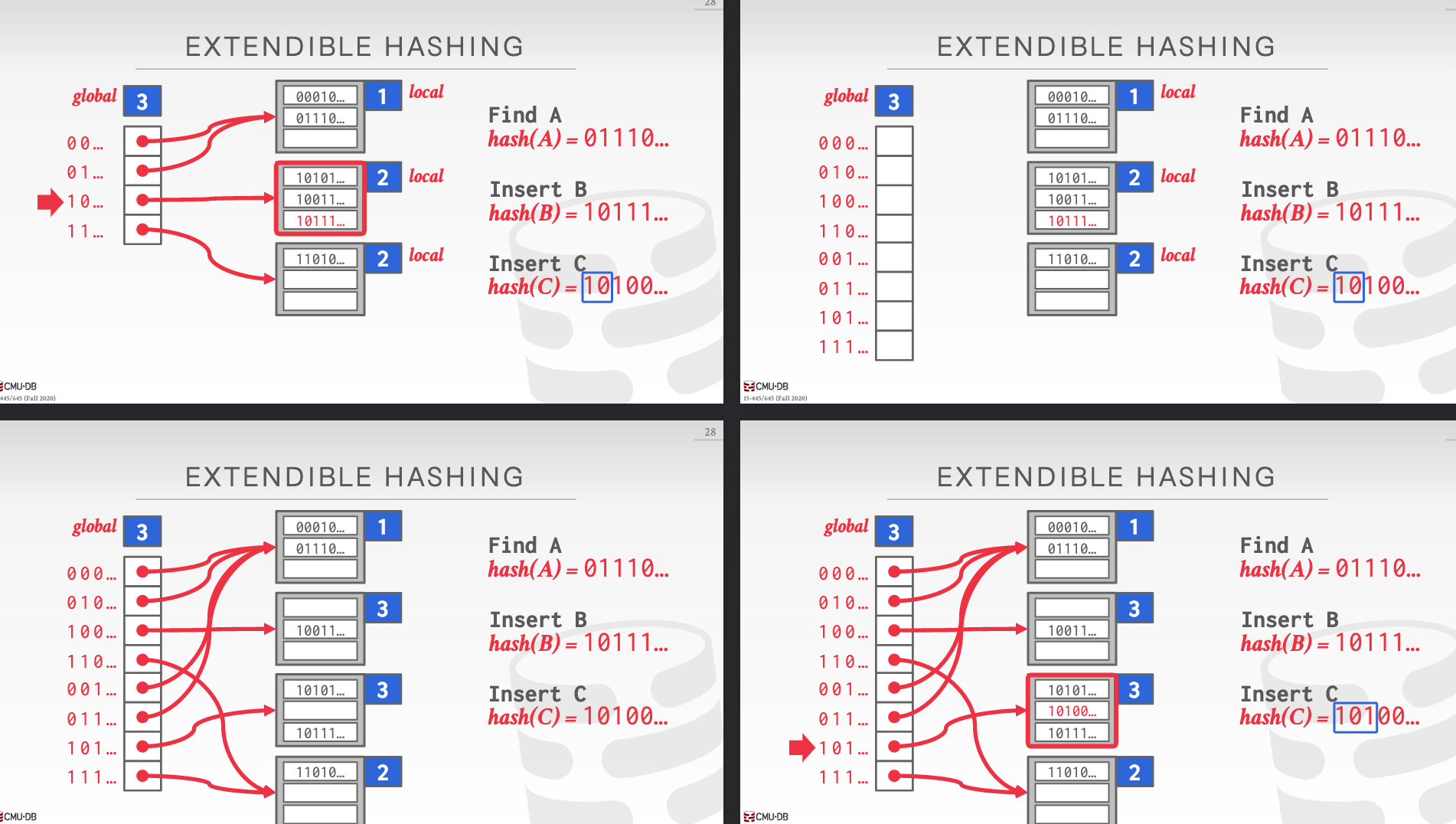

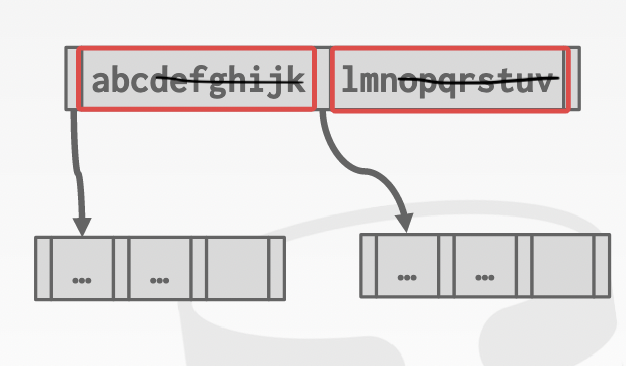

extendible hashing: splits buckets in case of letting chains to grow forever

Local/global depth bit: track slots

problem 1: what to split: data movement: move data within the buckets of the split chain; all other buckets are left untouched.

problem 2: when to split: if the bucket is full, splits the bucket and reshuffle its elements.

observe: the changed pointers to slots are on the split chain.

-

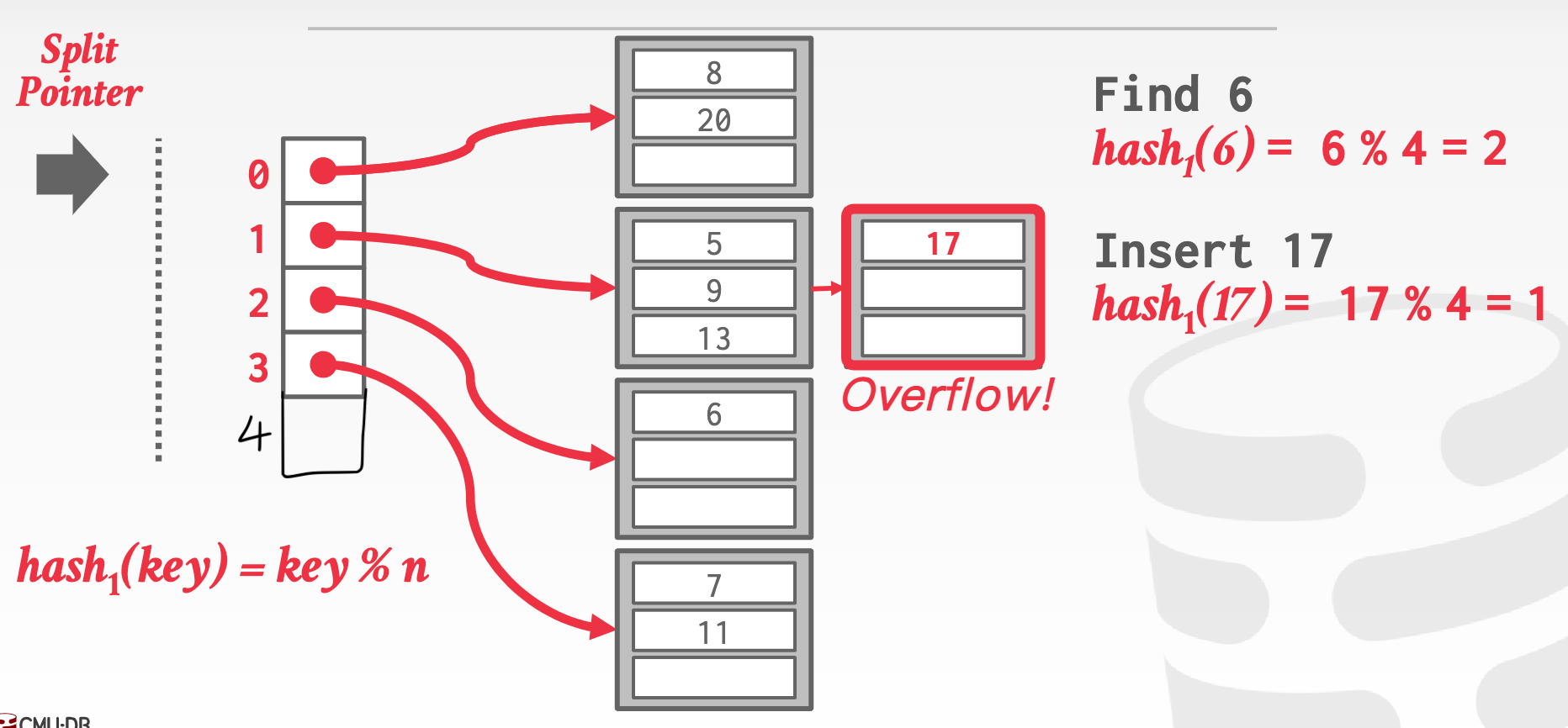

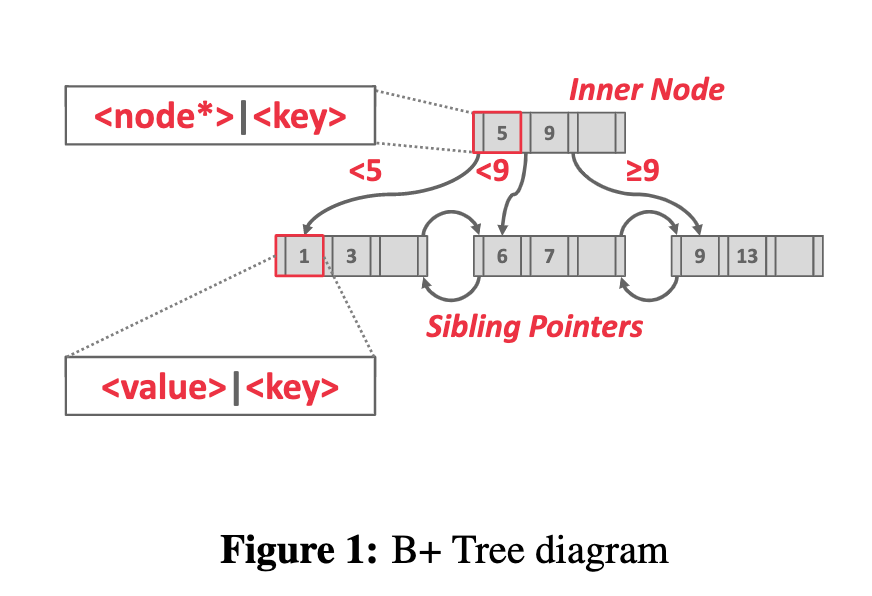

linear hashing: maintains a split pointer that keeps track of the next bucket to split instead of immediately splitting a bucket when it overflows.

Procedures:

- Add a new slot in split pointers, and create a new hash function

- Decide whether to apply the new hash fuction on new query index.

- Delete original hash function and replace with new hash function when run out of slots.

-

Insertion -> when buckets are full

-

Deletion: reverse of insertion: pointers move backwards -> when buckets are empty

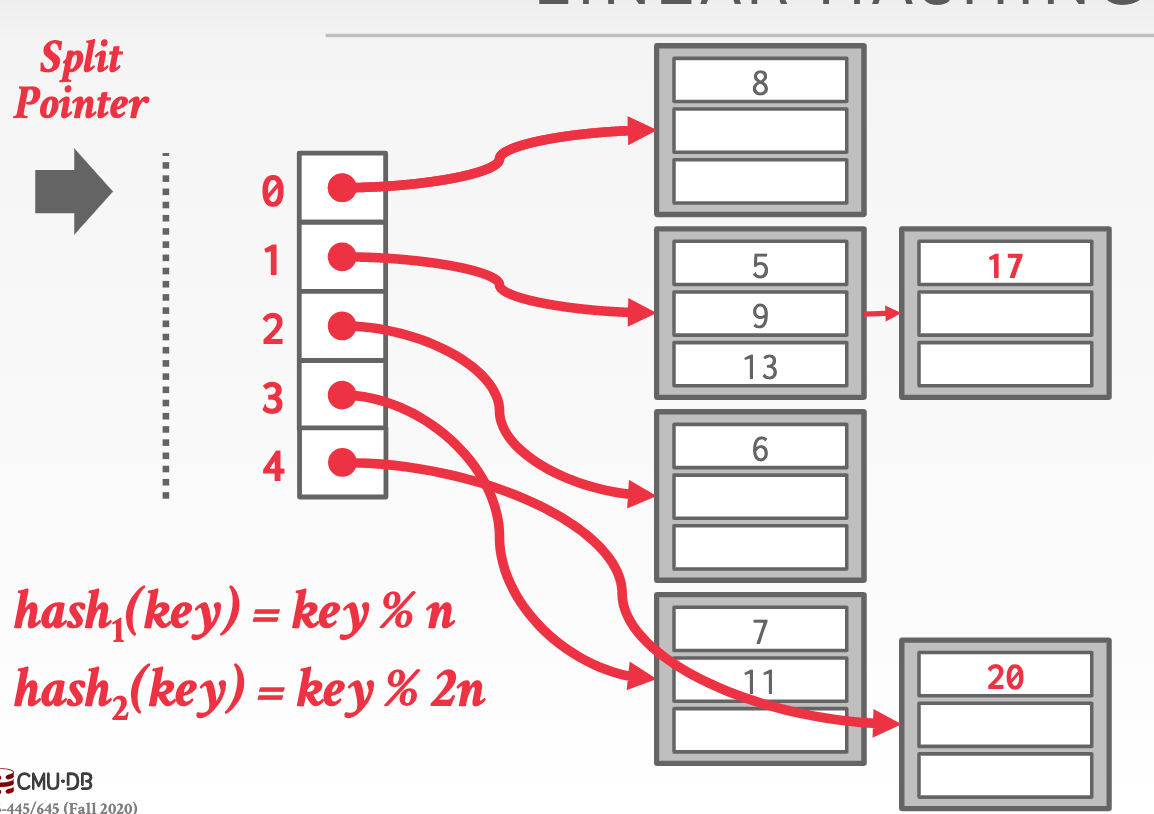

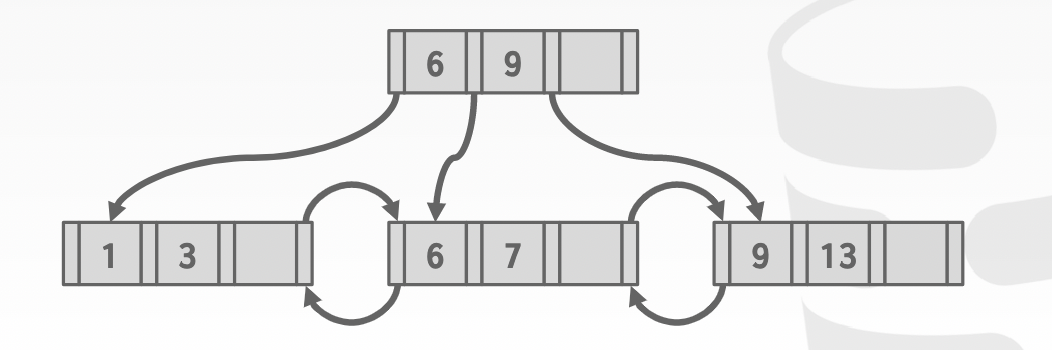

B+ tree¶

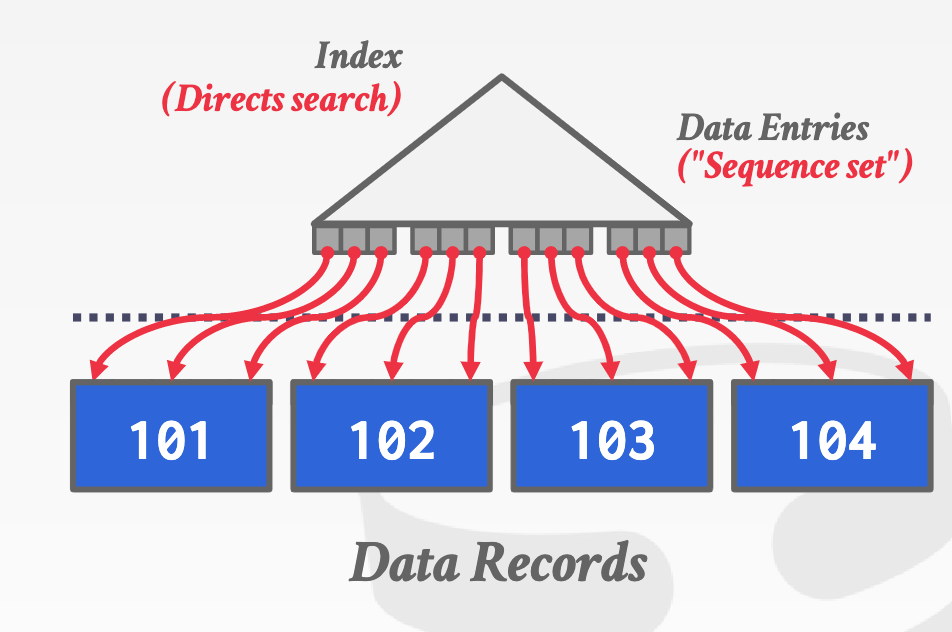

- table index: index of a table’s attributes. The table is stored in the sort order specified by the primary key. Can be either heap- or index-organized storage

- Trade-off on the number of indexes to create per database: storage overhead Vs. maintenance overhead

Overview:

-

b+ tree: a self-balancing tree data structure that keeps data sorted and allows searches, sequential access, insertion, and deletions in O(log(n)). Good at read/write large blocks of data.

-

difference with b tree: b+ tree stores values only in leaf nodes; b tree store values in all nodes.

Structure:

-

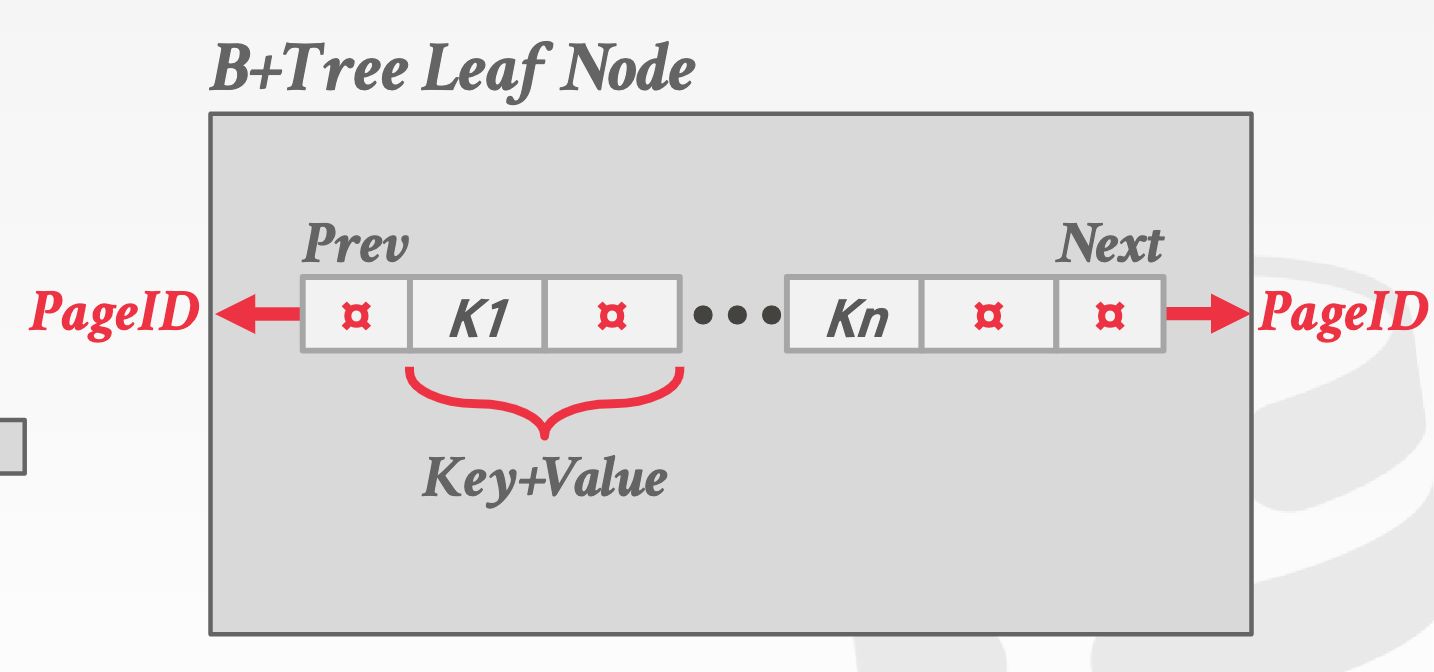

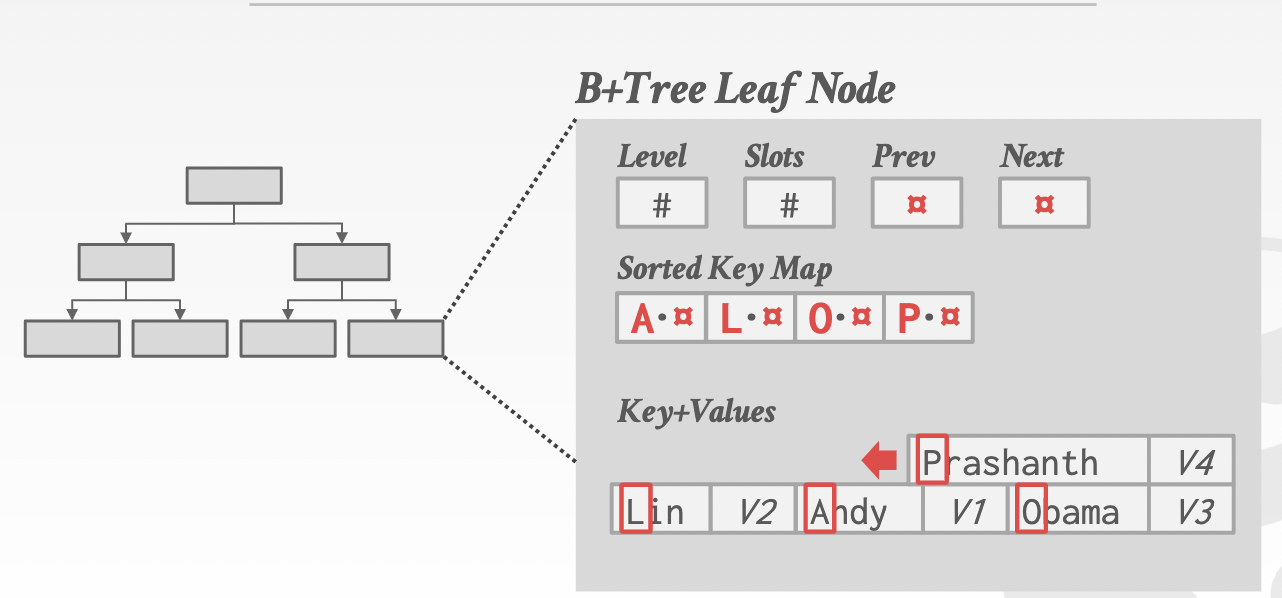

Key-value pair: inner node: store pointers; leaf node: values.

- Leaf node values have two approaches: 1) record IDs: pointers to the location of tuple; 2) tuple data: store the actual contents of the tuple in each node

-

node: Arrays at every node are sorted by the keys.

Operations:

-

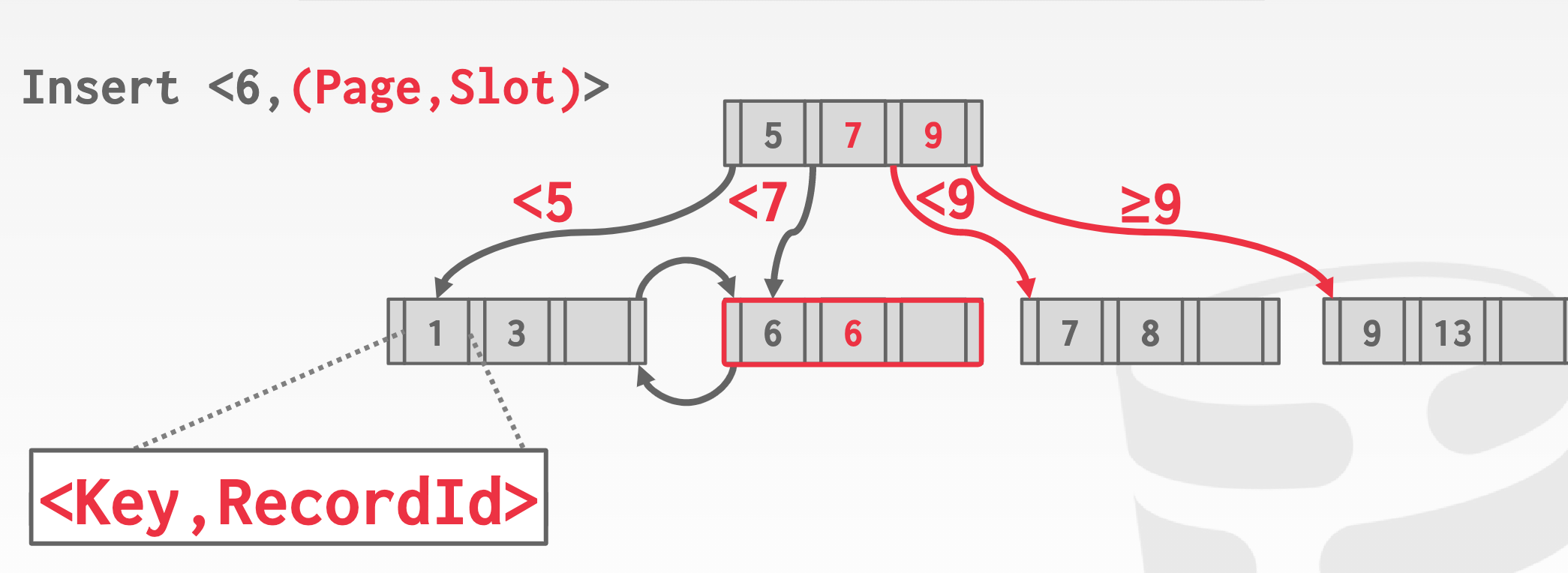

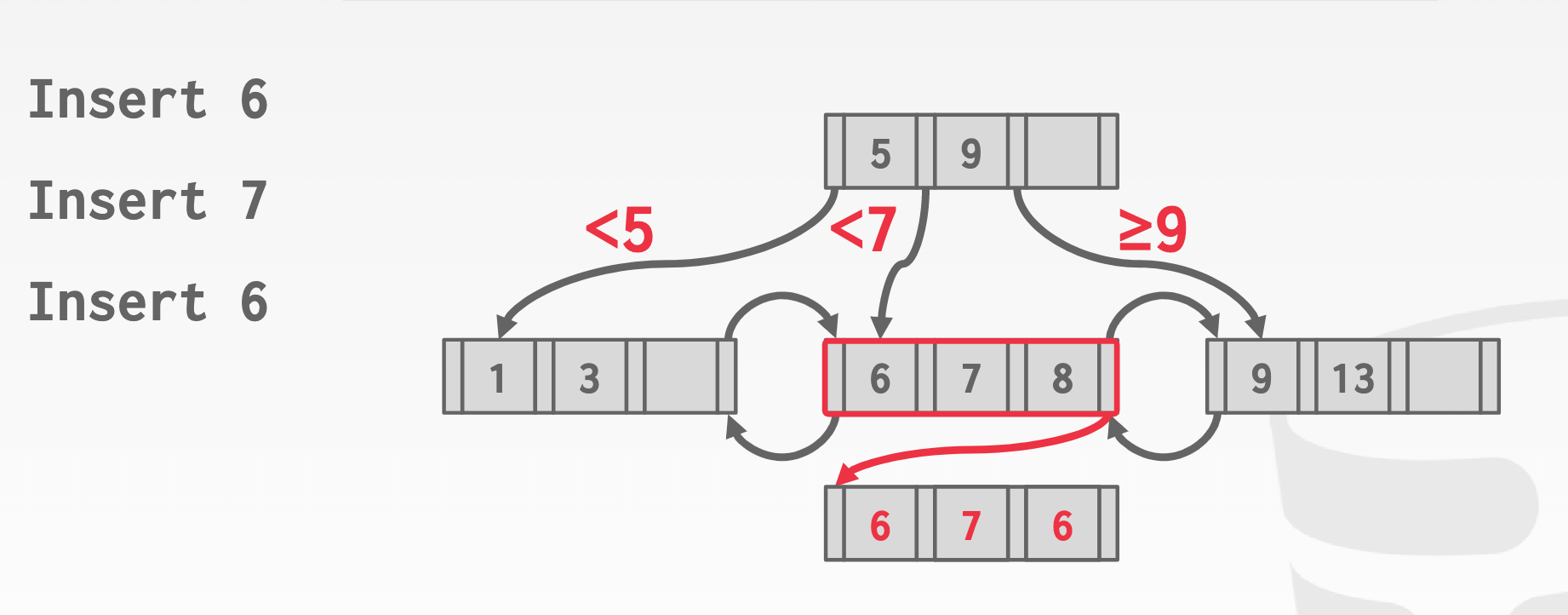

Insertion:

Split leaves when the tree got too full

- Leaf node: copy up middle key

- inner node: push up middle key

-

deletion

Redistribute by borrowing from sibling when lead is not half full; Merge nodes when redistribution fails.

- Leaf node: delete the key

- Inner node: delete entry in parent pointing to the key

-

selection conditions: Support search provides any of the attributes of the search key.

-> Hash index requires all attributes in the search key.

-

Non-unique indexes

like hash tables: 1) duplicate keys, 2)store value lists.

-

duplicate keys approaches

-

Append record IDs

-

overflow leafnodes: Allow leaf nodes to spill into overflow nodes that contain

the duplicate keys.- complex to maintain

-

-

Clustered indexes: If a table does not contain a primary key, the DBMS will automatically make a hidden row id primary key

(think about VEB tree)

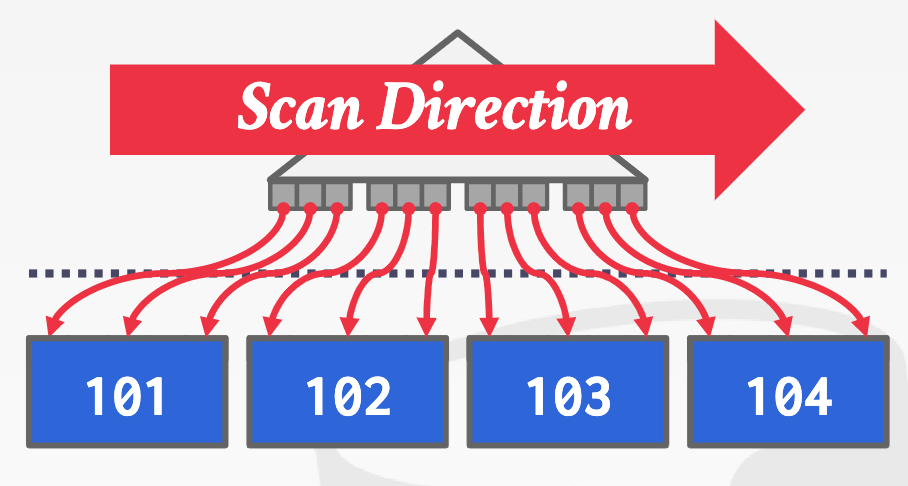

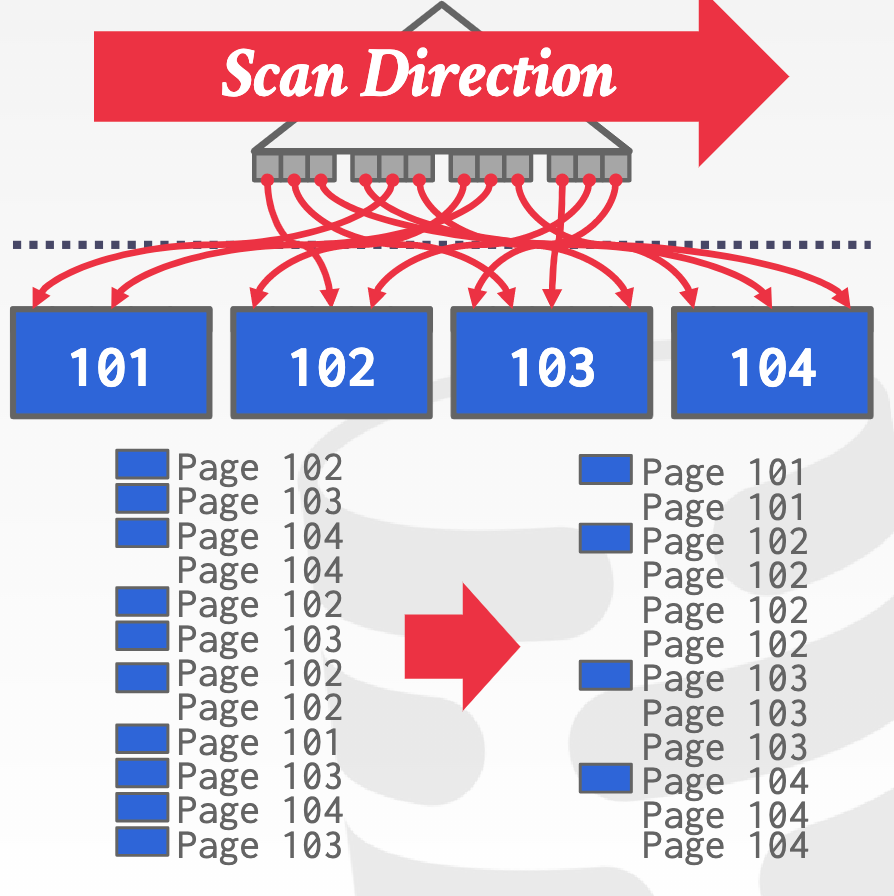

Sorting:

- heap clustering: tuples are sorted in the heap’s pages using the order specified by a clustering index. DBMS can jump directly to the pages if clustering index’s attributes are used to access tuples

- index scan page sorting: sort all the tuples that it needs based on their page id.

Design choices¶

-

node size

-

~ storage device: the faster the storage device is, the smaller the node size is. Because we want to reduce the number of accesses in slower device like HDD compared with RAM. And the amortized runtime of read over large chunk of data is more optimal.

-

~ workload: there are two types of workload: point query; sequential scan. A point query would prefer small pages to reduce extra info while sequential scan likes large pages to reduce the number of fetches.

-

-

merge threshold

- like lazy allocation in os

- Delaying merge to reduce the amount of reorganization like expensive write latches.

-

variable length keys

-

good in a way: 1)space saving: reduce a small subset of large keys lead to lot of wasted space

-

approach 1: pointers -> embedded devices: registers, cache

Store the keys as pointers to the tuple’s attribute

-

approach 2: Variable Length Nodes -> infeasible: large memory management overhead

allow nodes to have variable length.

-

approach 3: padding -> infeasible: big waste of memory

Set each key’s size to the size of the max key and pad out all the shorter keys.

-

approach 4: key map/indirection

Embed an array of pointers that map to the key + value list within the node. Place prefix of each key alongside the index.

- unlike approach 1, a4 stores the dictionary index that needs small space not the key pointers, which allows storing prefix alongside of the index.

-

-

Intra-node search: search within the node

-

A1: linear: Scan node keys from beginning to end.

-

A2: binary: Jump to middle key, pivot left/right depending on comparison

-

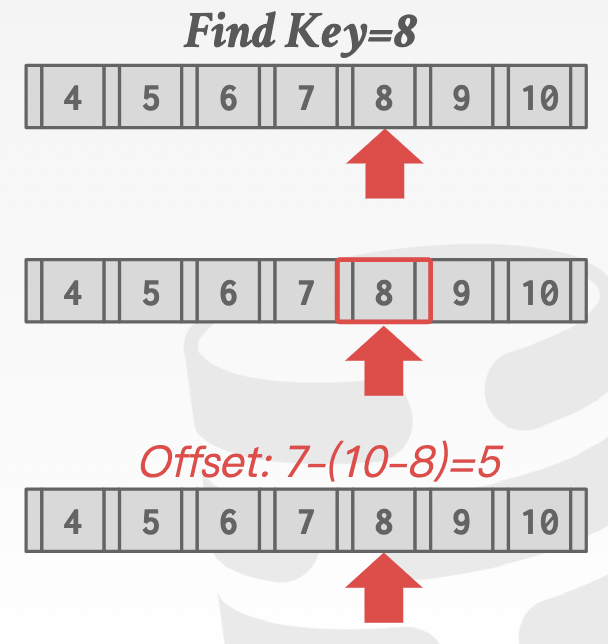

A3: interpolation: Approximate location of desired key based on known distribution of keys -> infeasible: limited applicability to keys with certain properties and complexity, only seen in academic use

take advantage of metadata(max, min ,avg …) and infer approximate location

-

Optimizations¶

-

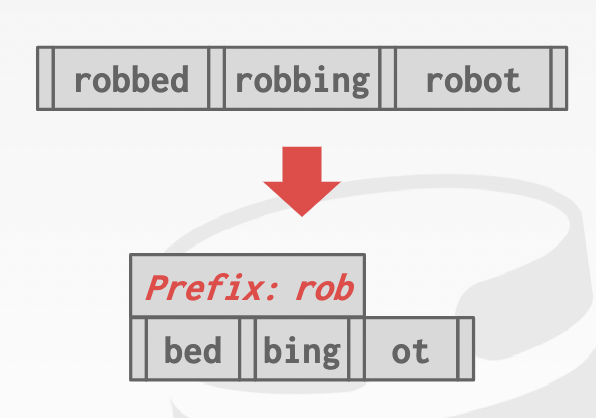

Prefix compression: extract prefix and store unique suffix for each key -> keys in the same node have overlapping prefix

-

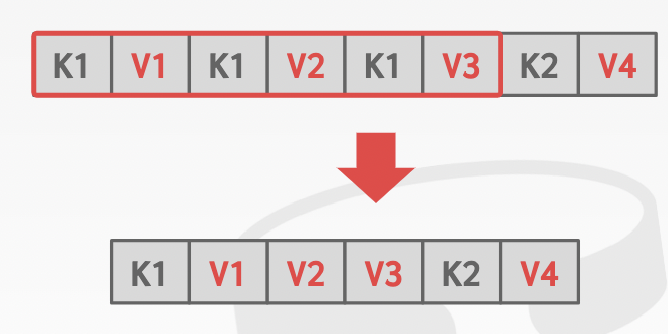

duplication: write key once and maintain a list of record ids with the key -> non-unique keys

~ MVCC

-

suffix truncation: only storing the minimum differentiating prefix of each key at a given inner node. -> No need to search the entire key

- leave few redundant digits: fault tolerance -> indeterminable insertion/deletion due to an identical prefix

-

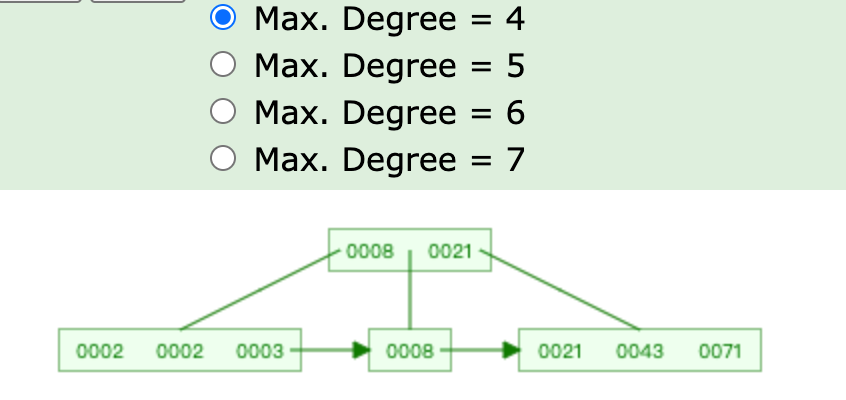

bulk insert: sort the keys and build the index from the bottom up -> fastest way to build a new b+ tree

- Trade-off: leaving vacancy or not when packing the leaves depends on context

Keys: 3, 7, 9, 13, 6, 1; sorted keys: 1, 3, 6, 7, 9, 13

-

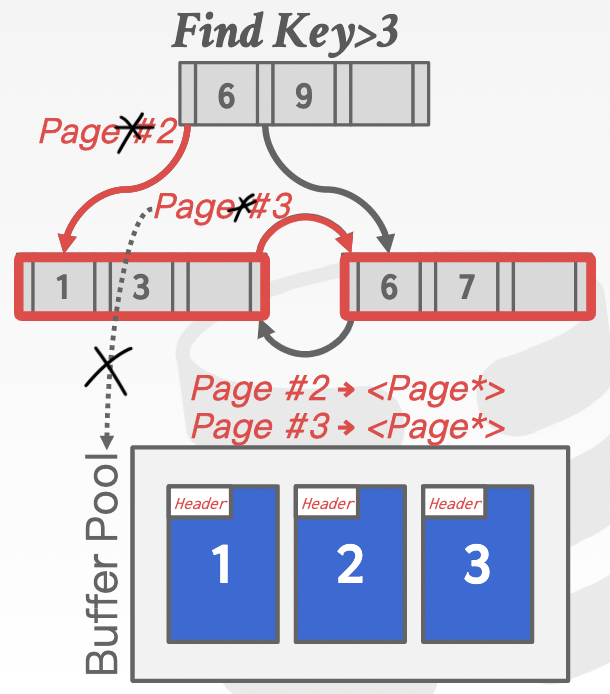

Pointer swizzling: store raw pointers instead of page ids for pinned page in the buffer pool -> reduce expensive buffer pool fetches

Index¶

-

what is index: provide fast access to data items.

-

methods

-

implicit indexes: provide primary key with integerity constraints

-

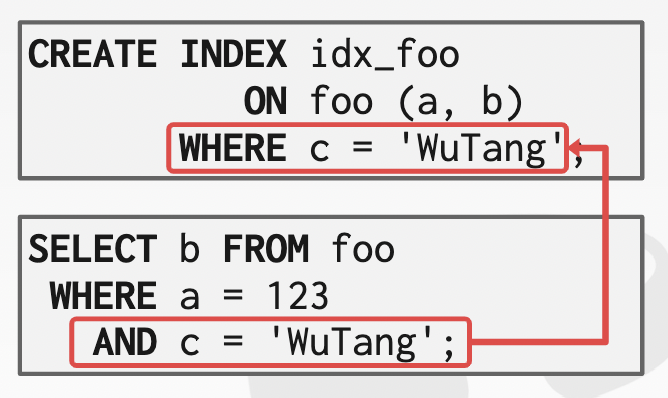

partial indexes: Create an index on a subset of the entire table. -> reduce full page fetches overhead

-

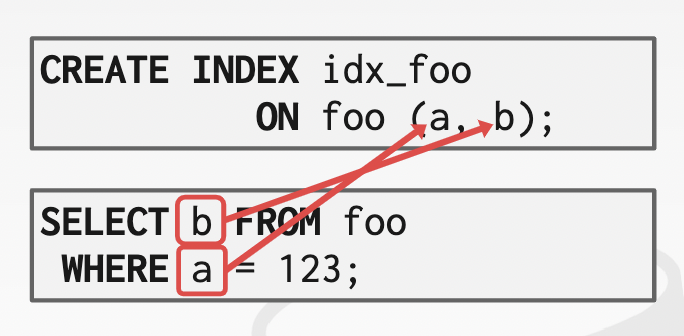

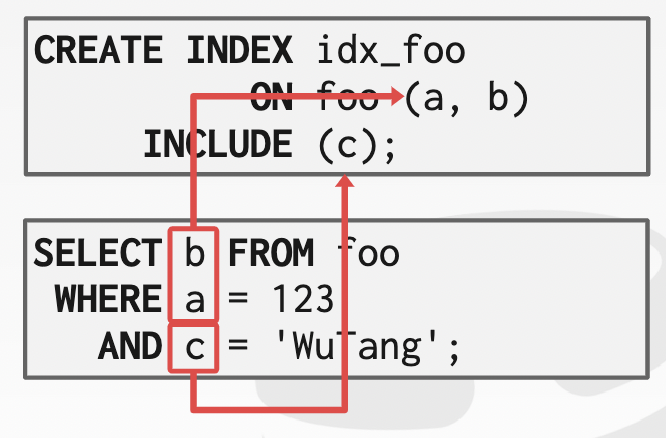

covering indexes(index-only scans): locate data records in the table and not to return data -> reduces contention on the DBMS’s buffer pool resources

-

index include columns: Embed additional columns in indexes

to support index-only queries.- Extra columns are only stored in the leaf nodes and are not part of the search key

-

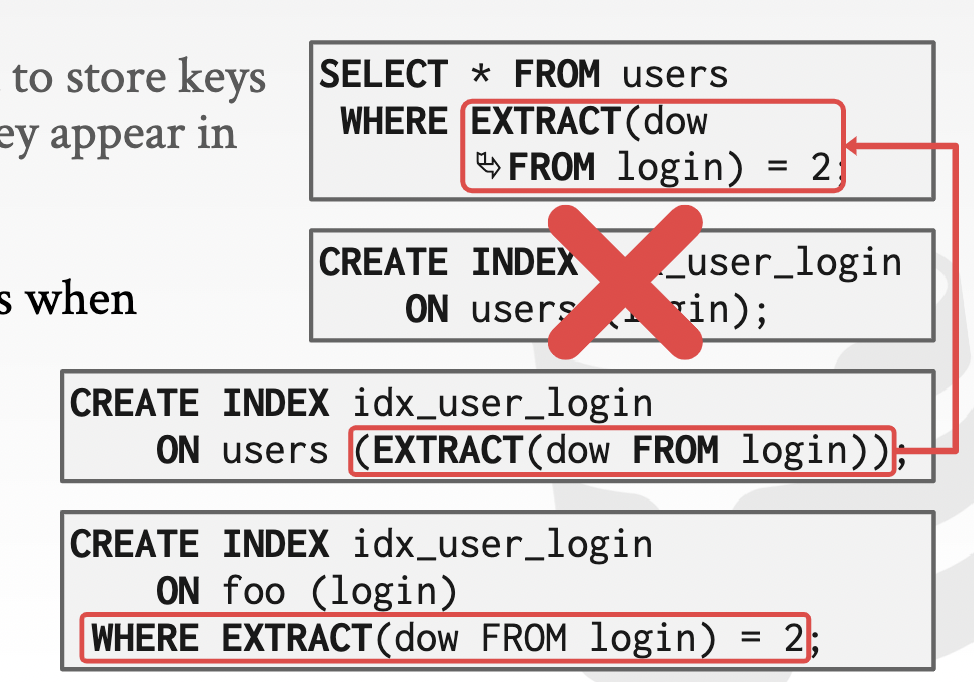

Function/expression indexes: use expressions when declaring an index. store the output of a function or expression as the key instead of the original value. -> ? which queries can use that index

-

Trie index¶

-

make oberservations: the inner node keys in a B+Tree cannot tell you whether or not a key exists in the index -> at least one buffer pool page miss per level in the tree

-

approach: trie index

-

Properties: complexity in operations and space

-

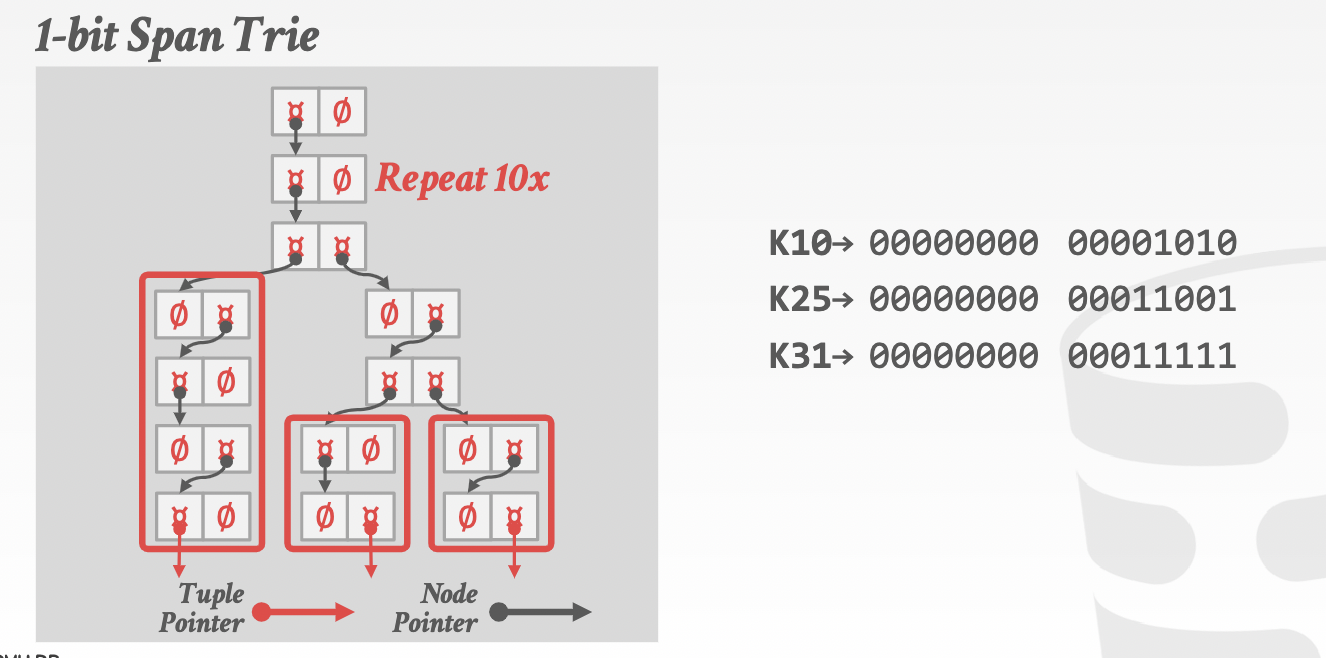

trie key span: digital representation of keys

-

Fan-out of node, height of tree

图中:空表示该值不存在,指针指向下一个位置,使用0/1的二进制表示

-

-

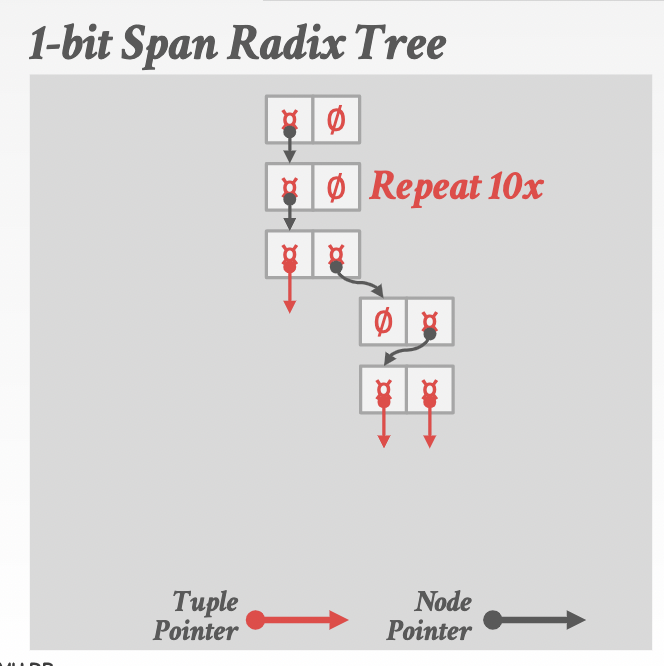

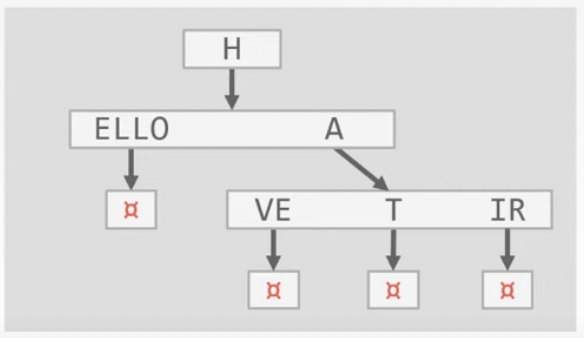

Radix tree¶

variant of trie index. Omit all nodes with only a single child.

Inverted index¶

-

make observations: Tree indexs above are useful for point and range queries; not good for keyword searches

-

Approach: inverted index(full-text search index) -> keyword search

stores a mapping of words to records that contain those words in the target attribute.

-

supported query type:

- phrase searches: records that contain a list of words in the given order

- proximity searches: two words occur within n words of each other

- wildcard searches:words that match some pattern (e.g., regular expression).

-

design decisions

- decision 1: what to store: depend on the context

- decision 2: when to update: update is expensive. So stage updates and then update in batches

Concurrency control¶

- make observations: multi-threads world means that cpu cores and disk I/O stalls matters.

- come up with a protocol: a protocol needs criteria

- logical correctness: thread view

- Physical correctness: internal representation of object soundness -> memory leak

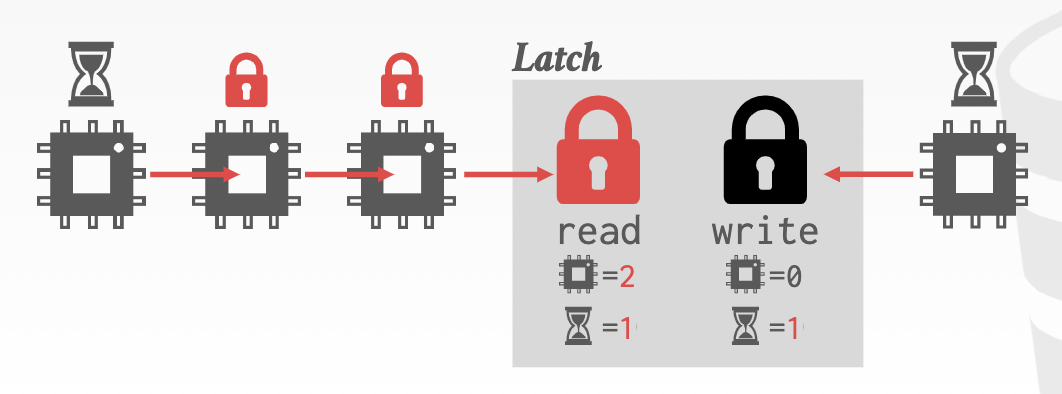

Locks vs. latches¶

- Locks: protects the contents of a database (e.g., tuples, tables, databases) from other transactions -> object view

- Latches: protects critical sections the DBMS’s internal data structures (e.g., data structure, regions of memory) from other threads -> operation view

- Read mode: Multiple threads are allowed to read the same item at the same time

- write mode: Only one thread is allowed to access the item.

Latch designs¶

latch takes advantage of CPU’s atomic compare-and-swap(CAS) instruction. A thread can check the contents of a memory location to see whether it has a certain value.

Definition: scalability of latches means if latches perform well when there is a lot of contention.

Approaches:

-

Blocking OS mutex: os build-in mutex infra.

User space -> kernel space -> fail/blocked -> descheduled

-

Pro: no extra work for DBMS

-

Con: ~ os scheduling: Non-scalable (about 25ns per lock/unlock invocation)

1

2

3

4std::mutex m;

m.lock();

//

m.unlock(); -

-

Test-and-set spin latch(TAS)

use CAS to update value -> fail -> DBMS’s choice now(keep trying/descheduled)

- Spin latch: a location in memory that threads try to update.

- pro: efficient operations

- con: not scalable nor cache-friendly

1

2

3

4

5

6//ex: std::atomic<T>

std::atomic_flag latch;

...

while (latch.test_and_set(...)){

//

} -

Reader-writer locks

based on two modes of latches, keep track of reads and writes.

-

pro: concurrent reads

-

con: extra work for DBMS management; larger storage overhead

-

Practice¶

-

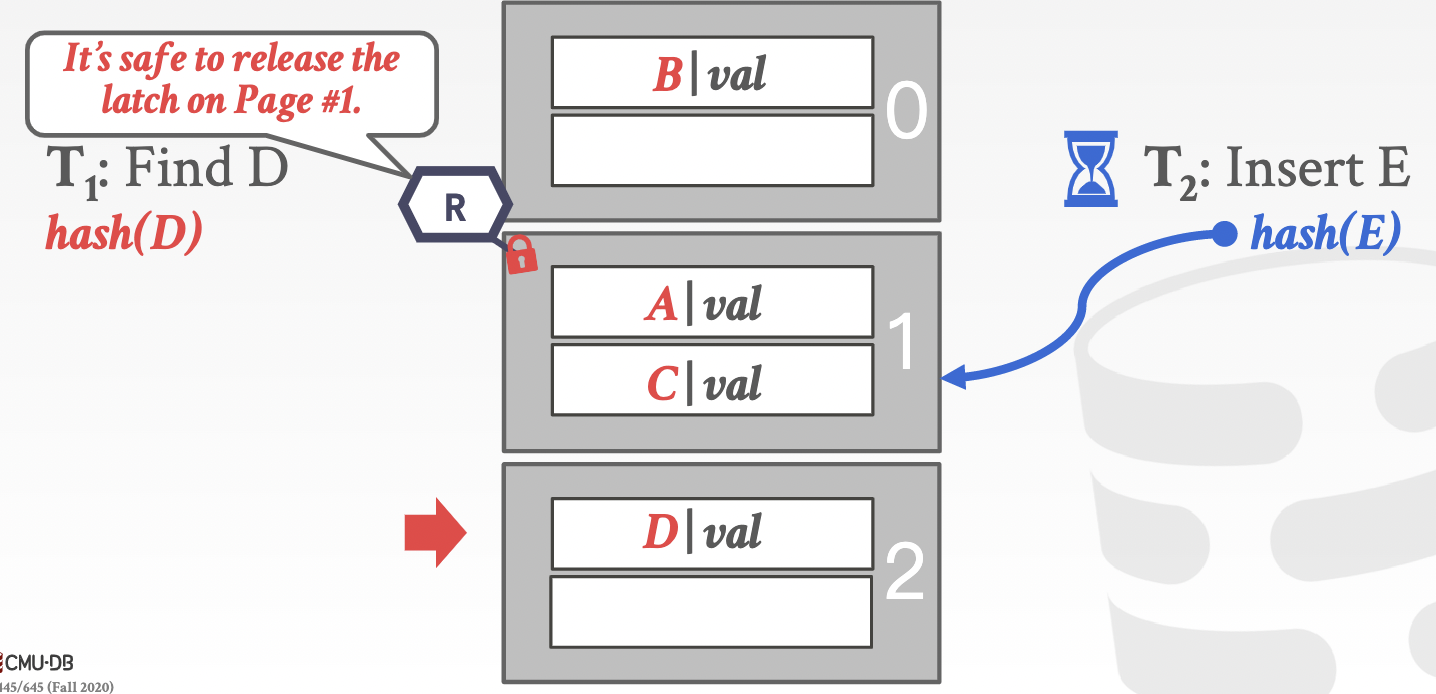

Hash table

-

observation 1: Limited ways threads access the data structure(top-down) -> no deadlock problem -> global latch

-

Trade-off: Parallelism vs. storage, computational overhead of accessing the table, efficiency

-

approach 1: page latches

-

approach 2: slot latches

-

-

b+tree

Observation 1: several way to access b+ tree(top-down/sibling pointers/…) -> come up with a protocol to allow multiple threads to read and update a b+ tree

Problem 1: modify same node at the same time

Problem 2: one thread traversing the tree while another thread splits/merges nodes

-

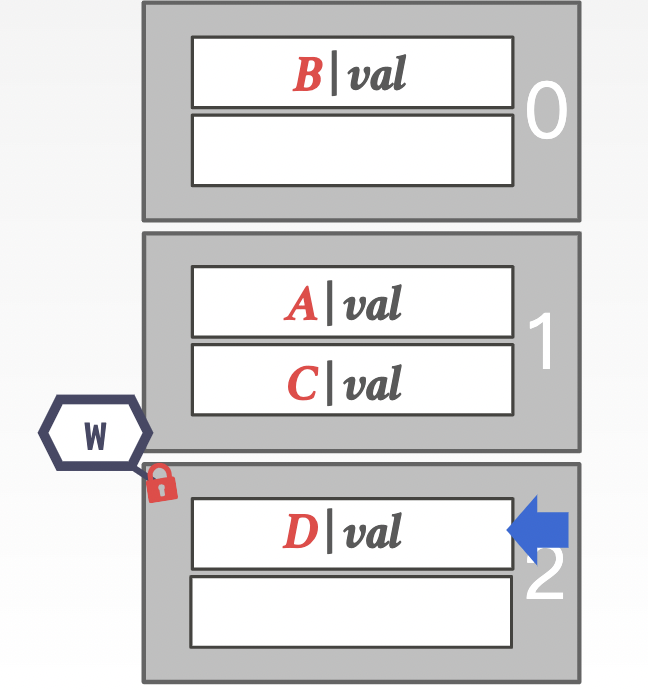

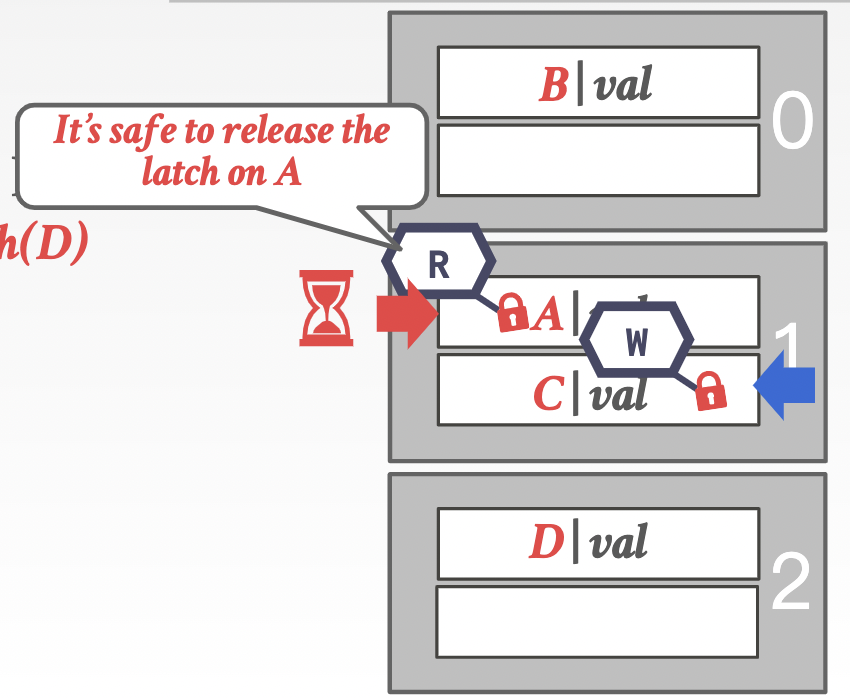

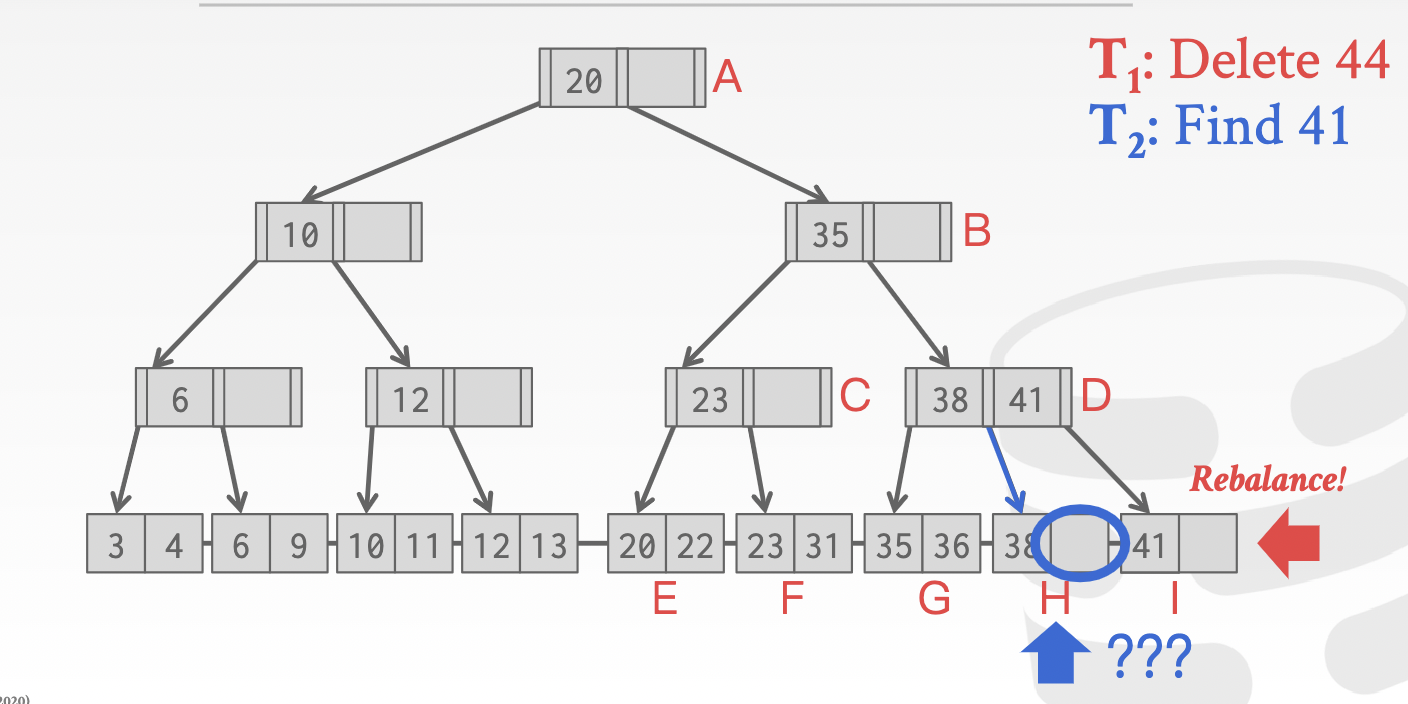

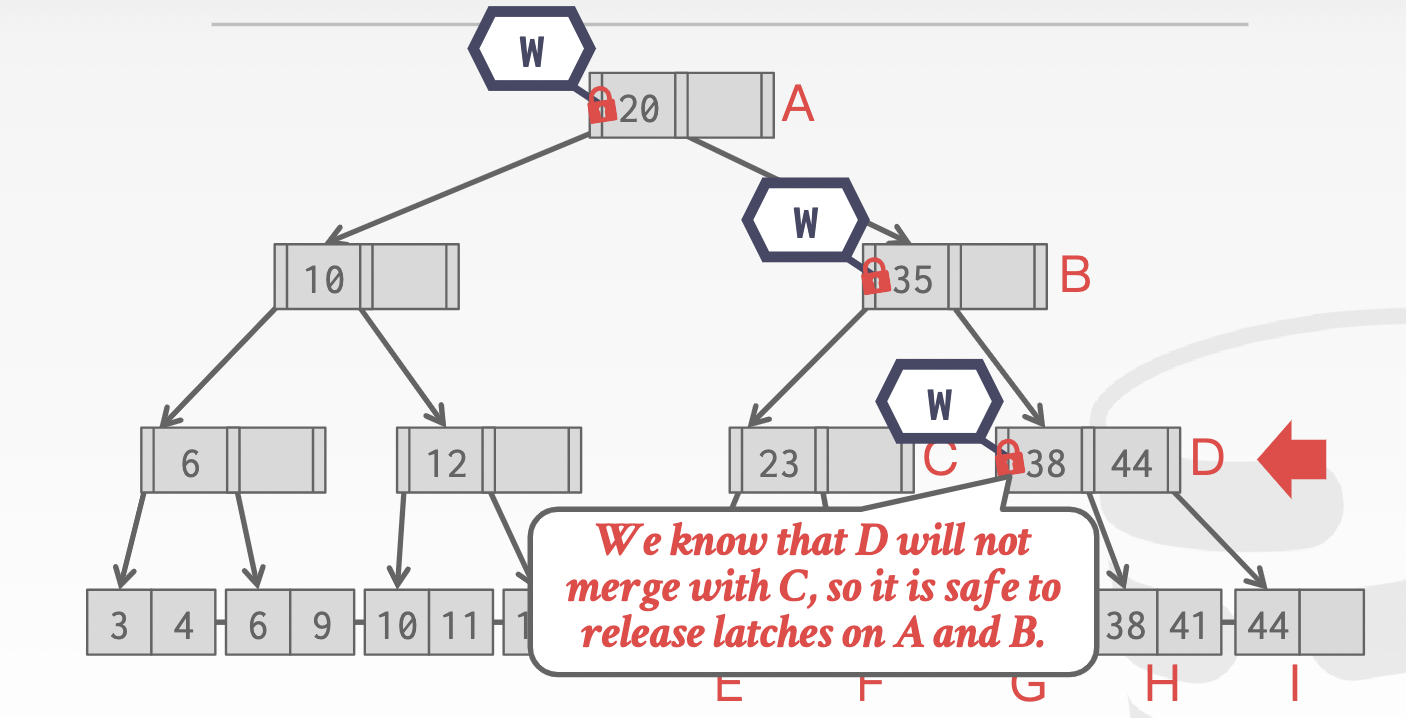

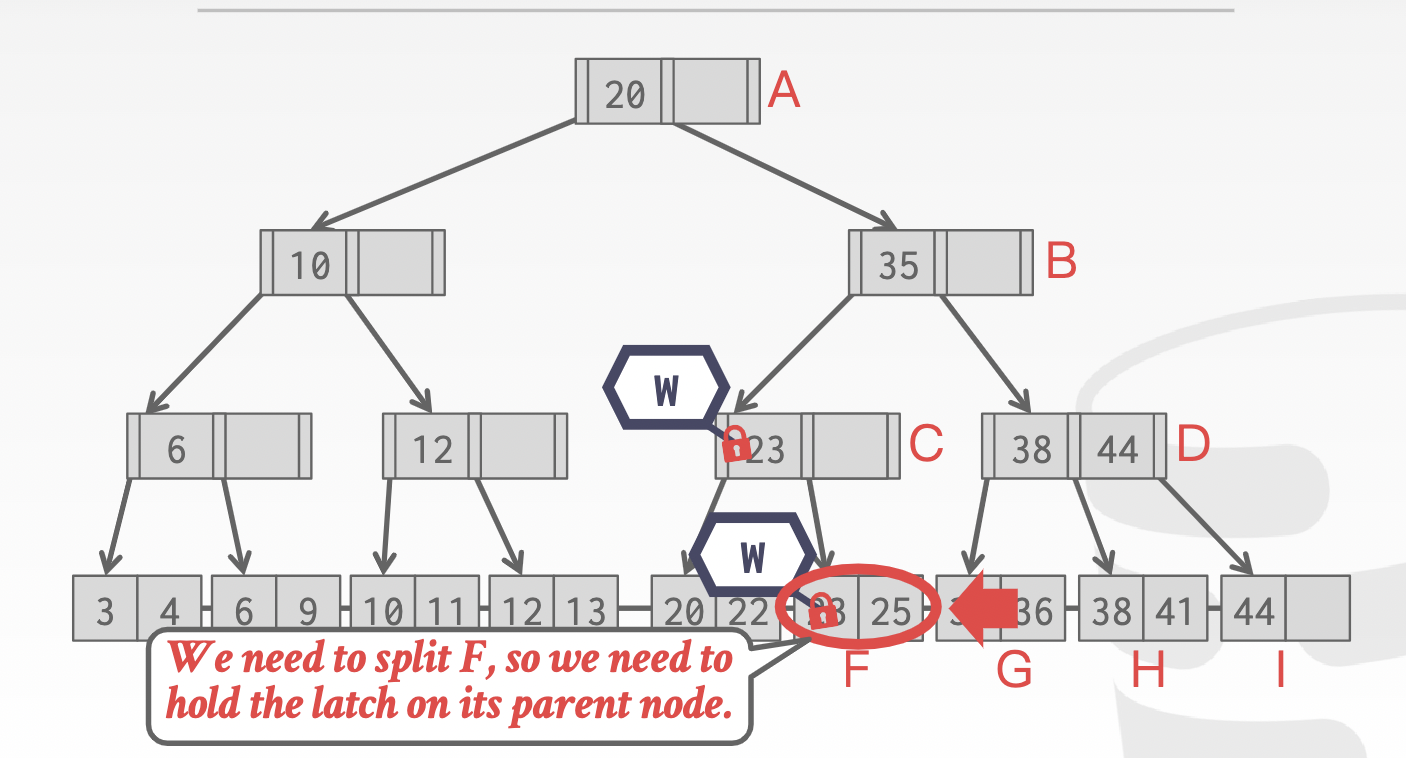

Solution: latch crabbing/coupling protocol -> top-down direction

- define safe node: A “safe” node is one that will not split or merge when updated (not full on insertion or more than half full on deletion -> write view(insert/delete)

- Procedures:

- get latch for the parent

- get latch for the child

- release latch for the parent if it is deemed “safe”

-

Basic latch crabbing protocol for operations

-

Search: top-down

-

Insert/delete: If latched child is safe, release latches on all its ancestors

Delete 38:

Insert 25:

-

-

observation 2: first step of all the updates on the b+tree is taking a write latch on the root. -> bottleneck with higher concurrency

- No need to acquire write latch on the safe node update.

-

Better latching algorithm: assume the target leaf node is safe, use READ latches on the way. If it the node is not safe, apply the old method(WRITE latch on the root)

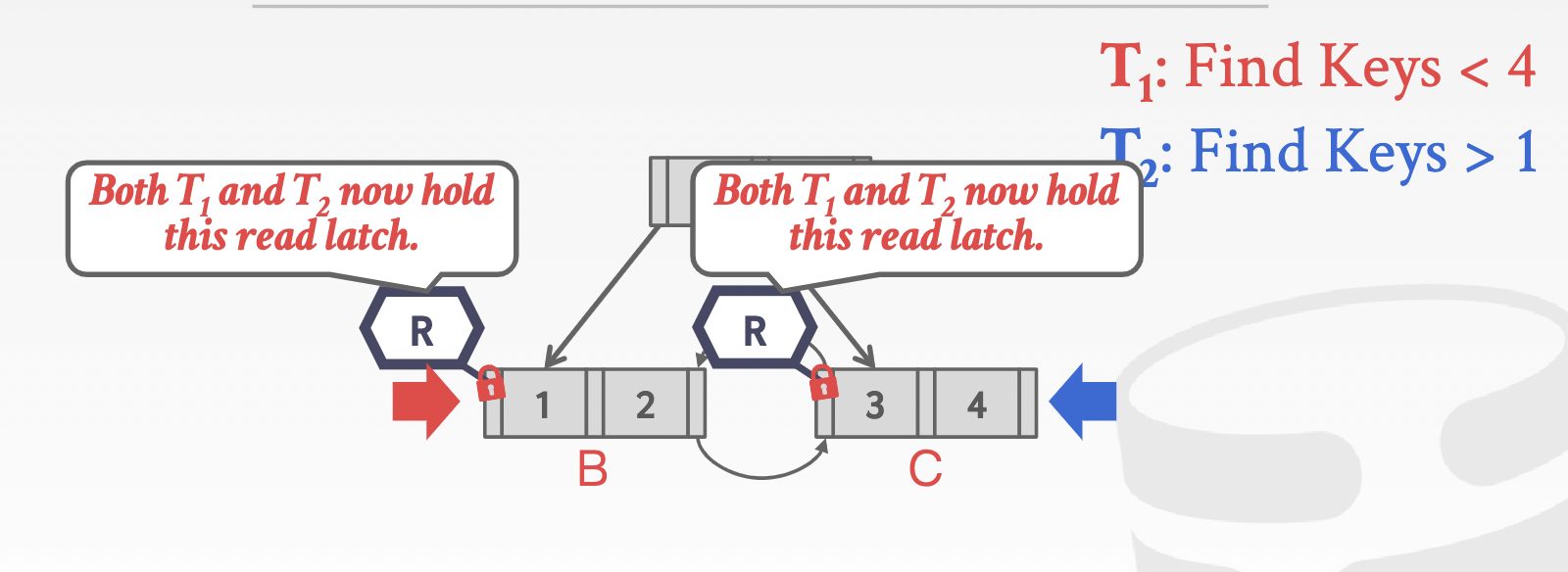

Problem 3: leaf node scan -> dead lock

-

observation: threads acquire locks in two directions at the same time

Problem 4: delayed parent updates

Problem 5: versioned latch coupling

-