4 virtual memory

17 virtual memory¶

Fact:DRAM accessing hard disk is much more slower(100,000) than cache accessing main main memory.

Problem: Enormous miss penalty of secondary storage

Solution/Goal: the miss rate should be very small compared to the rate of executing instructions.(decrease miss ratio)

Design policy:

-

high associativity: avoid collisions between accesses

-

large block size: amortize the cost of miss over many future hits

-

Write-back strategy: separation of hardware and software.only update the contents of disk when data that’s changed in main memory needs to be replaced by data from other blocks of secondary storage.

upside of long latencies: manage the organization of main memory and the accesses to secondary storage in software.

-> handle hits in hw, misses in sw

Virtual memory¶

Fact: we only need to ensure the current working set of a program is actually resident in main memory. Locations not currently being used could live in secondary storage until needed.

Goal:

-

Exploit locality on a large scale

- solution: MMU with single-level page map

- optimizations

- store in RAM

- TLB

- Cache/VM interactions

-

ease memory management, protect multiple contexts from each other.

> MMU:translate VA to PA¶

Solution: two part: VAtoPA + PageFault

- use hw(MMU) to implement VtoP, use sw(page fault exception handler of CPU) to handle exceptional cases

fact: MMU maps virtual pages to physical pages. Cause a page fault if virtual page is not resident in physical memory.

implementation: Paging/demand paging

Plan:

-

All virtual pages in secondary storage, MMU empty.

-

map VA to PA

2.1 RAM-resident page

2.2 non-resident page: page fault

CPU switches execution to page fault handler, the handler allocates a physical page for VA loaded from hard disk, then adjusts the page map entry. If all physical pages are unavailable, it chooses an existing page to replace. Finally return control to program.

-

Working set incrementally loaded via page faults

problem: thrashing: programs constantly generate page faults, which causes the program run very slowly.

Arithmetic: page map(v, m, p)

> Where to store page map:¶

-

store in RAM(main memory): RAM-resident page maps

use a register, called the page map pointer, to hold the address of the page map array in main memory

problem: each memory reference takes 2 accesses to physical memory

-

cache the page map entries: translation look-aside buffer: a special-purpose fully-associative cache

TLB(VA, PA)

If the PPN is found by using the TLB, the access to main memory for the page table entry can be avoided.

-

Variations:

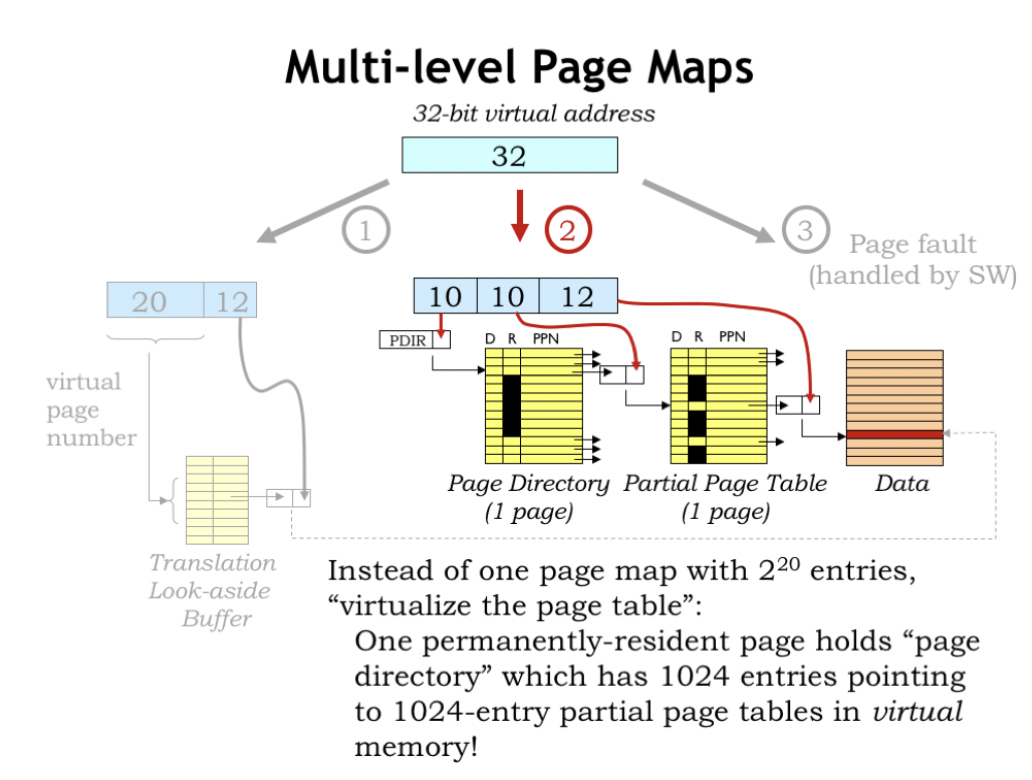

- Multi-level page map

The key idea is that the page map segments are in virtual memory, i.e., they don’t all have to be resident at any given time. If the running application is only actively using a small portion of its virtual address space, we may only need a handful of pages to hold the page directory and the necessary page map segments.

- paging the page map

-

> sum up: MMU address translation¶

Virtual machine¶

Fact:

context: a mapping of VA to PA

- problem: timesharing among several programs,When new program starts, the context should be updated by flushing TLB which costs a lot.

example: OS kernel/user

-

Solution: perform rapid context-switching

- add a register to hold index of current context.

- switch contexts: update context # and pageablePtr registers.

-

Problem: use caches with Virtual memory

disign choice:

- Virtually-addressed cache

- Physically-addressed cache

- Overlapped operation

several part to consider: MMU time on HIT, flush cache after context switch

…to be continued this part

18 virtualizing the processor¶

回顾一下虚拟内存,简单来说它提供给每个运行的程序一个拥有计算机所有内存地址空间的幻想。它通过内存控制单元实现从虚拟地址到物理地址的转换,用虚拟地址查询页表,而用页表来记录物理地址,完成查询。

由于访问硬盘的延迟比主存储器(main memory)慢的多,通过TLB(一种缓存器)来记录活跃的虚拟地址到物理地址的转换。

build a VM¶

把正在运行的程序抽象成进程,一个进程包含它运行所需要的所有资源(CPU,I/O device, MMU,virtual address space)…

有一个特别的优先的进程叫kernel。OS为各种进程提供服务,比如访问文件中的数据,建立网络连接,管理UI。从不同用户模式的进程切换,OS需要保存现在运行的进程状态,保存在主存储器中或二级存储器中。

Problem: share one physical machine between all the virtual machines. As if each process was running on its own “virtual machine” that works independently of other virtual machines for other processes.(OS’s job)

Fact: one VM for each process

- OS把外接设备都抽象成服务,为每个进程提供独立的虚拟机,周期性地从一个进程转换到下一个进程。

- 外接设备包含一个定时器触发周期性的CPU中断,提供非易失性存储的二级存储器,连接外部设备的USB接口,视频监控器,键盘,鼠标等用户界面服务。

- 抽象包括窗口,访问磁盘内的文件,网络传输等。OS通过supervisor calls(SVCs)配置和控制虚拟服务。

The process state includes

- the hardware state of the CPU, i.e., the values in the registers and program counter.

- the contents of the process’ virtual address space, including code, data values, the stack, and data objects dynamically allocated from the heap. Under the management of the MMU, this portion of the state can be resident in main memory or can reside in secondary storage.

- the hardware state of the MMU, which, as we saw earlier, depends on the context-number and page-directory registers. Also included are the pages allocated for the hierarchical page map.

- additional information about the process’ input and output activities, such as where it has reached in reading or writing files in the file system, the status and buffers associated with open network connections, pending events from the user interface (e.g., keyboard characters and mouse clicks), and so on.

进程如何复用cpu?¶

time-multiplexing of the cpu = timesharing

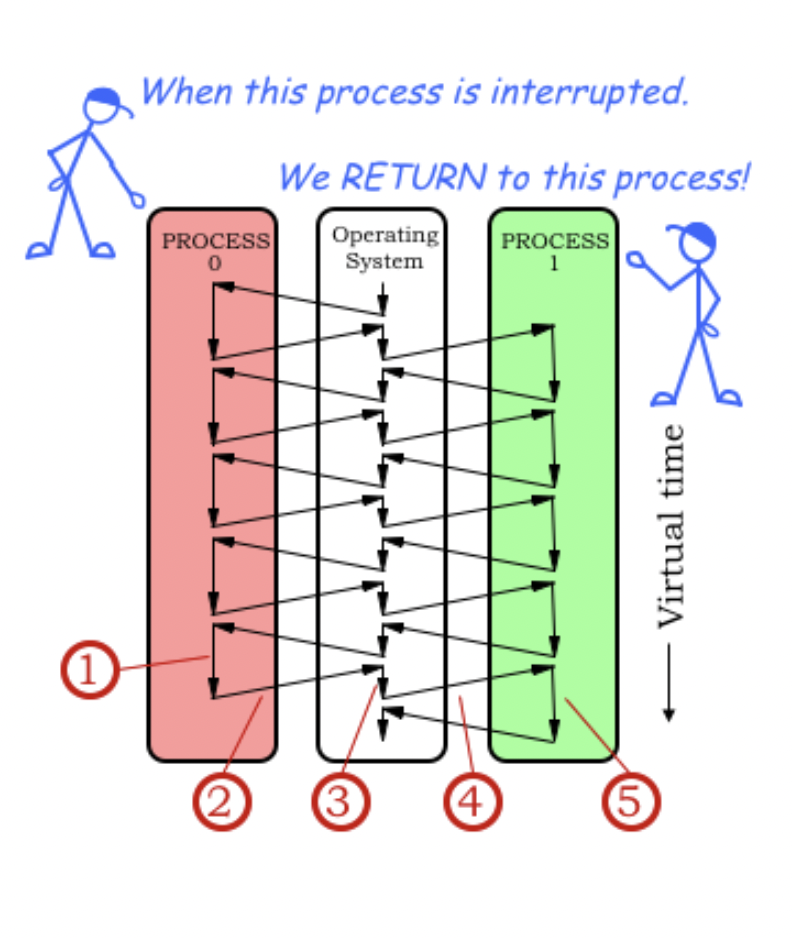

OS从运行进程0到运行进程1:

- 运行进程0 -> 停止运行0,将控制转移回OS kernel,保存当前地址(PC+4)-> 保存进程0的状态并且加载进程1的状态 -> 回到进程1 -> 运行进程1。

- 第2步和第4步是不同的trap handler。

interrupts to time-sharing¶

中断处理:handler

-

Hardware

Timer interrupts: the periodic interrupt from the external timer device.

how the interrupt hardware in the Beta works?

it saves the PC+4 of the interrupted user-mode program in the XP register and sets the program counter to some predetermined value that depends on which external interrupt happened.

-

software: beta interrupt handling

-

保存状态到寄存器中(在os是叫UserMState的数据结构,在main memory中存储CPU的寄存器)-> C程式处理exception ->重装寄存器内保存的状态 -> 回到原来的地址(Reg[XP]-4)

-

Where to find handlers

- Beta scheme: wire in a low-memory address for each exception handler entry point

- Table of handler addresses

-

问题:不是很懂中断对用户程序是透明的?或许是指用户程序能够访问中断改变的变量/状态。

Since the process state is saved and restored during an interrupt, interrupts are transparent to the running user-mode program.

-

-

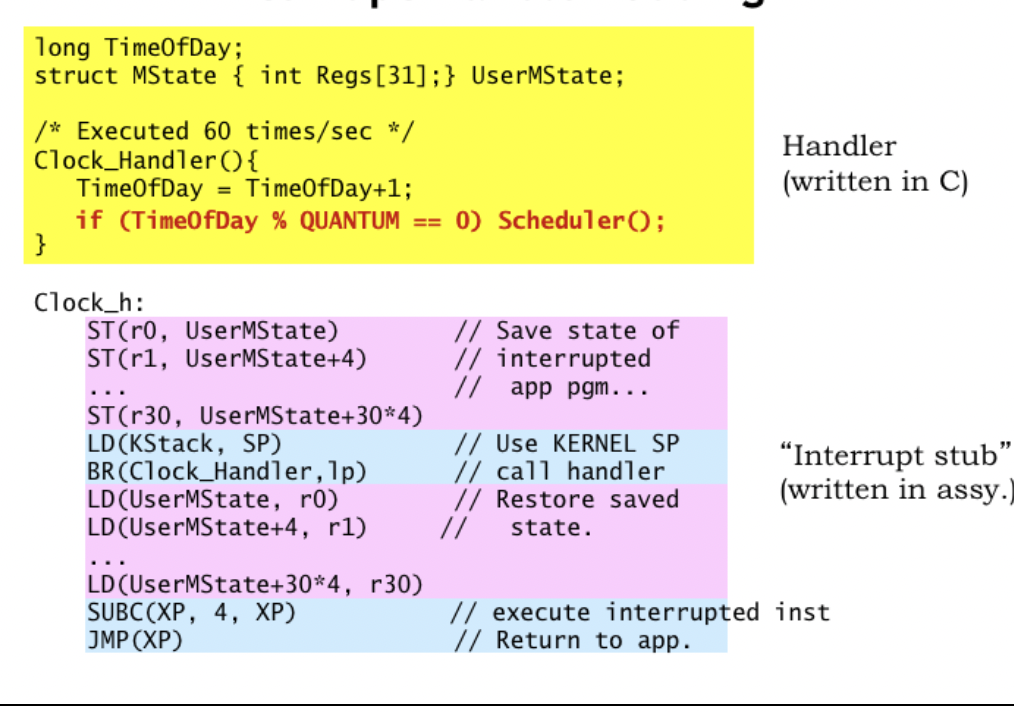

Example: timer interrupt handler

-

设定:os maintains current time of day count in response to clock events.There are program A and clock handler.

-

目标是用时间中断器来更新os中记录现在时间的值。定时器定期中断用户模式程序,以在操作系统中运行时钟中断处理程序代码,然后继续用户模式程序的运行。对A来说,中断仿佛没有发生。如果A需要访问时间,则需要向os做出提出相应的服务要求。

1

2

3

4

5

6

7

8

9

10

11

12

13//Handler(C)

long TimeOfDay;

Struct Mstate {} UserMstate;

Clock_Handler(){}

//Interrupt stub(assymbly)

Clock_h:

save the values of all registers into the UserMState;

set up the kernel-mode stack;

call clock_handler;

reload registers;

decrement the XP register value;

return to the app;

-

-

Scheduler: simple timesharing scheduler

-

设定:UserMState, process control block of each process(process table), index of current process

-

怎样运行一个新的时间共享的进程?

-

scheduler通过保存现在进程的状态,改变索引值为进入下一个进程准备

1

2

3

4

5

6scheduler(){

proctbl[cur].state = UserMState;

cur = (cur+1)%N;

UserMState = proctbl[cur].state; //install state

LoadUserContext(....PageMap); //install context

}

-

-

-

Time-sharing story in os

-

Timer 中断正在运行的用户模式程序并开始执行clock handler代码。clock handler先保存现在的进程状态到相关的数据结构中然后调用scheduler。scheduler将暂时保存的进程状态转移到process table中,而之后调用的scheduler会为下一个运行的进程保存相关的状态到暂时存放的地址,clock handler重新加载寄存器保存的状态继续运行下一个进程,这样循环下去。

-

设定: supervisor mode bit:1时不允许中断,0时允许。kernel mode is set 1; user mode is set 0.

- 中断不能同时运行,一次只能运行一次。设置监控位保证这一点。由于中断发生会改变进程状态,这样是为了确保不会覆盖进程的状态到寄存器(UserMState)中。

-

exception handling¶

problem: how to deal with “illegal” opcodes?

…跳过了硬件,汇编的部分

Communicate with os¶

Problem: how user-mode programs communicate with OS code?

当os在运行其他进程时,用户程序该如何将控制转移回os?

Solution:

- abstraction: Use supervisor calls with args in registers

- Implementation: use illegal instructions to cause an exception, and OS recognize the instruction as a user-mode SVCs

…跳过了汇编部分

19 devices and interrupts¶

Problem 1: how the OS interacts with the devices themselves?

Problem 2: how supervisor calls access the kernel buffers in response to requests from user-mode processes?

Interrupt-based asynch I/O¶

操作系统中有很多输入/输出设备,以键盘输入为例,用户在键盘上打字,引起键盘向cpu发起中断请求。中断停止当前程序的运行而执行这个特别的输入输出事件。当内核中的缓存用完后会发生什么?

早先的处理方式是丢弃收到的键盘输入的字符,提示用户之前输入的已作废。

后来用户模式的程序执行ReadKey() SVC,要求OS返回下一个字母到R0寄存器中,然后遵循SVC继续执行指令。

ReadKey() SVC: a blocking I/O request.

当没有字符返回时不执行直到有可用的结果返回。

the program assumes that when the SVC returns, the next character is in R0. If there isn’t (yet) a character to be returned, execution should be “blocked”, i.e., suspended, until such time that a character is available.

Non-blocking I/O request: return immediately with both a status flag and a result.

当没有字符返回时仍然执行指令,在之后重新发起请求。

-

Operation: no attention to keyboard during normal operation

-

Event-driven approach

用户模式程序和键盘并没有直接互动,而是通过事件驱动方法,设备当需要处理输入时通过中断向操作系统发出信号。这样把责任分离的好处是减少操作系统不停地检查是否有未完成的I/O操作

-

-

The interrupt-driven OS interactions with I/O devices are completely transparent to user programs.

-

Example: keyboard interrupt handler

- sketch: cpu needs access device status and data

- approach:Memory-mapper I/O: a portion of the kernel address space is devoted to servicing I/O devices.

1

2

3

4

5

6

7

8struct Device{

char Flag, Data;

}keyboard;

keyHit_h(){

Buffer[inptr] = keyboard.Data;

inptr = (...)...;

} -

现实中更复杂:

键盘处理还需要识别键的状态是按下还是释放,然后将字符转换成ASCII码,并且需要处理特殊的按键组合。

Implementations of ReadKey¶

Goal: the associated supervisor call that lets user programs read characters.

Attempt1: 直接写

1 | ReadKey_h(){ |

kbdnum:keyboard num;proctbl: process table

-

Problem:kernel/supervisor mode can’t be interrupted.

the SVC handler is running with the supervisor bit (PC[31]) set to 1, disabling interrupts.

-

such that the buffer never gets filled.

-

陷入死循环

-

Attempt2: fix the looping problem by adding code to subtract 4 from the saved value of the XP register before returning

1 | ReadKey_h(){ |

-

和Attempt1的区别是:

-

执行循环的方式:当中断推出时,操作系统通过跳到xp继续执行用户模式的指令。通过改变这一地址来循环发出中断。(汇编的常见循环方式?我认为)

When the handler exits, the OS will resume execution of the user-mode program by reloading the registers and then executing a JMP(XP), which would normally then execute the instruction following the SVC instruction.

-

ReadKey() SVC 进入用户模式

-

-

Problem: waste its time-slice waiting for next keystroke

Attempt3: 利用等待的时间执行别的程序,通过循环调度的方式最后回到ReadKey() SVC

The call to Scheduler() suspends execution of the current process and arranges for the next process to run when the handler exits. (Round-robin scheduling).

建立在以下事实上:

Fact: the time slices for each process are small enough that one round of process execution happens more quickly than the time between two typed characters

- Time-sharing 在此场景下的意义:

- 进行计算密集型的程序时由于分隔时间(cycles)过短,time-sharing可能会增加额外的成本在进程交换(context-switching, scheduling)上。

- 当有很多个程序需要执行时,分隔时间增加,time-sharing才增加了效率

- 进一步的提高效率:将进程的状态(活跃/等待)记录到进程的数据中,通过优先执行活跃的程序减少等待的时间。

- unix系统中sleep, wakeup可以改变进程的相应状态

Attemp4: “进一步”的实现:no CPU cycles are wasted on useless activity.

1 | ReadKey_h(){ |

The effect is that once a process goes to sleep() WAITING for an event, it’s not considered for execution again until the event occurs and wakeup() marks the process as ACTIVE.

Example: which handler & os? 像个解谜游戏。根据handler和os的特点来排除。

Time-sharing can’t meet with ddl¶

Problem: asynchronous world.

Solution: I/O: separate “event handling” from “event processing”.

- Downside: the need for real-time: can’t predict completion time, therefore can’t deal with real-time system

- Example: ESC on cars,需要实时计算各种因素才能准确刹车。而这是时间分享系统不能做到的

Time-sharing system: 在单个硬件系统上通过快速在进程间切换伪造并行的假象,为每个程序提供了在独立的虚拟机上运行的幻想。

downside: processing throughput is more variable

the need for real time¶

-

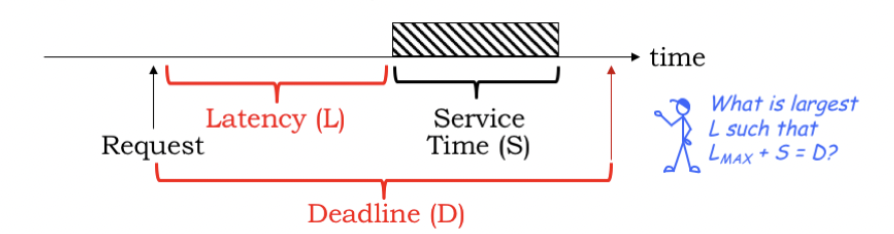

what’s the largest L such that Lmax+S=D?

Measure of performance in a real-time system: interrupt latency L,the amount of time that elapses between a request to run some code and when that code actually starts executing.

-

What factors contribute to interrupt latency?

-

关心这个问题的原因在于实现最小化中断延迟以在期限时间完成程序运行的目标。所以我们需要知道导致延迟的原因然后减小延迟。

-

state save, context switch

While handling an interrupt:it takes times to save the process state, switch to the kernel context, and dispatch to the correct interrupt handler.

-

periods of un-interruptability

- Long,uninterruptable instructions

- explicitly disabled periods: interrupt handler in kernel mode

-

to bound and minimize interrupt latency by all means

We’ll do this by optimizing the cost of taking an interrupt and dispatching to the correct handler code. We’ll avoid instructions whose execution time is data dependent. And we’ll work to minimize the time spent in kernel mode.

-

Real-time scenarios¶

scenario: scheduling of multiple devices: long-running handlers have a huge impact on the worst-case latency seen by the other devices.

> Stragegy0: first-come-first-served scenario.¶

Problems: reducing the worst-case latencies

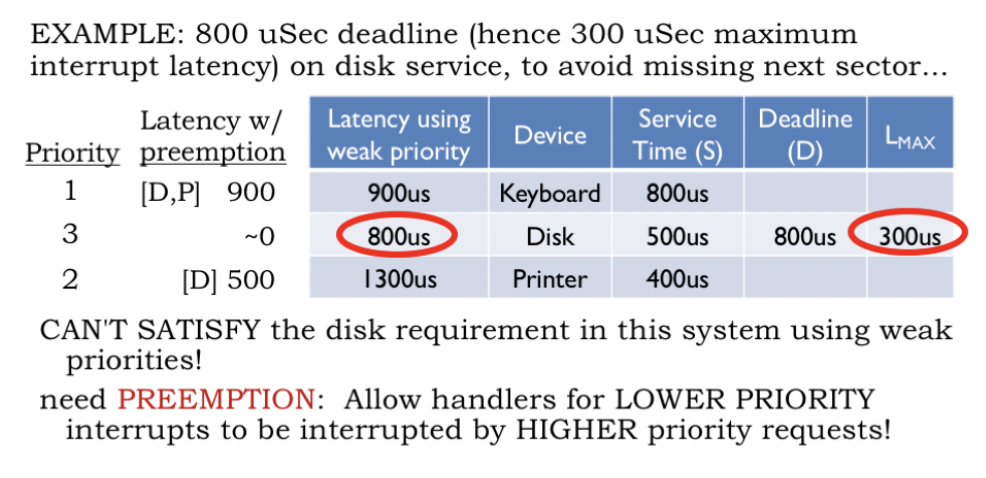

> Strategy1: “nonpreemptive” / “weak” priority system.¶

-

assign priorities to the pending requests and to serve the requests in priority order

-

Result: worsk-case latency = the worst-case service time of all the other devices + the service time of all higher-priority devices

当前运行的程序完成后才会运行下一个(在不支持中断的系统中)。

-

Subproblem: How should priorities be assigned given hard real-time constraints?

-

assume each device has a service deadline D after the arrival of its service request. assume D is the time until the next request for the same device.

-

Strategy: earliest deadline, therefore highest priority = earliest deadline

-

Sort the requests by their deadlines.

-

Assign the highest priority to the earliest deadline, second priority to the next deadline, and so on.

-

-

-

例子:机场候机排序,优先让飞机快要起飞的乘客候机虽然会延迟部分乘客的候机时间,但是会减少延误的次数。但是如果系统过载,让最先要起飞的乘客优先候机会导致所有人都延误,在这个场景中排序优先问题需要重新讨论,因为目标变成了能够使延误航班次数最少的排序优先。

-

downside:the worst-case latency for a device always includes the maximum time we have to wait for the currently-running task to complete.

Scenario: suppose disk requests have a 800 us deadline in order to guarantee the best throughput from the disk subsystem. Since the disk handler service time is 500 us, the maximum allowable latency between a disk request and starting to execute the disk service routine is 300 us.

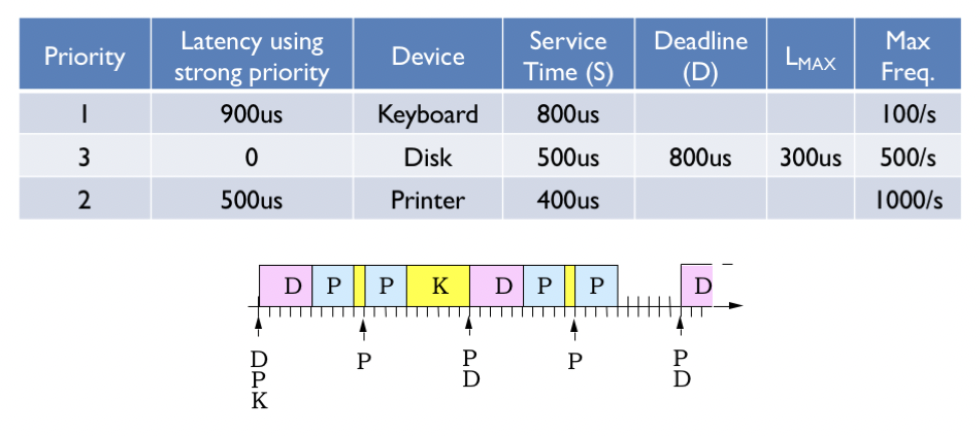

> Strategy2: the need for preemption¶

->a preemptive priority system/“strong” priority system

怎么处理strategy1中的场景问题?

设定新的优先级排序为:disk > printer> keyboard,改变的是当请求发出时立刻执行当前程序而不等待更低优先级程序的完成。在强优先级系统中,优先级和ddl非常相关,所以一定能在限期完成请求;而在弱优先系统中,优先级按照服务需要完成的时间划分,所以在考虑中断延迟时需要考虑其他服务的中断延迟时间。

…跳过了硬件实现部分…

> recurring interrupts¶

Consider interrupts which recur at bounded rates:

Keyboard handler doesn’t complete until 3 ms after its request was received.

由于只要有优先级高于键盘的请求,就必须先执行那个程序。所以在实时系统中用ddl作为约束而不是延迟时间的长短。

面临的问题:当键盘ddl在3ms之前时,强优先系统仍然无法达到实时条件的限制,这时说明在紧张的ddl下没有足够的cpu周期达到对服务的重复要求。

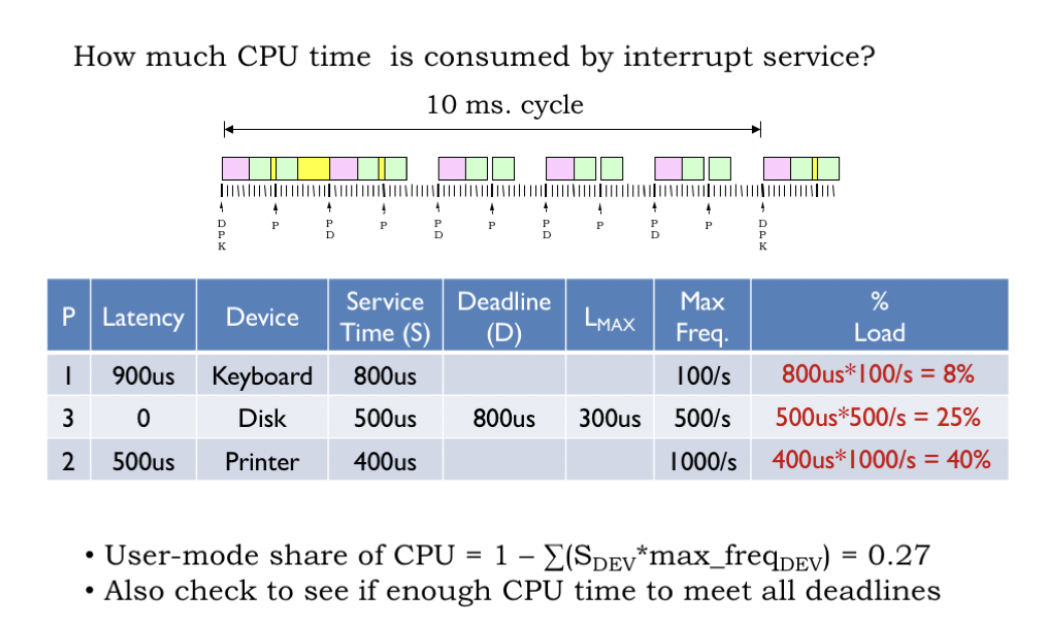

calculations of recurring interrupts

-

how much load each periodic request places on the system?

- Service time * max frequency = Load%

- User-mode share

-

whether have enough CPU cycles to meet each of the deadlines?

- example: for disk: 500/800=67.5% of the cycles to service the disk in the time between the disk request and disk deadline.